This weblog publish focuses on new options and enhancements. For a complete checklist, together with bug fixes, please see the launch notes.

Trinity Mini – A New U.S.-Constructed Open-Weight Reasoning Mannequin on Clarifai

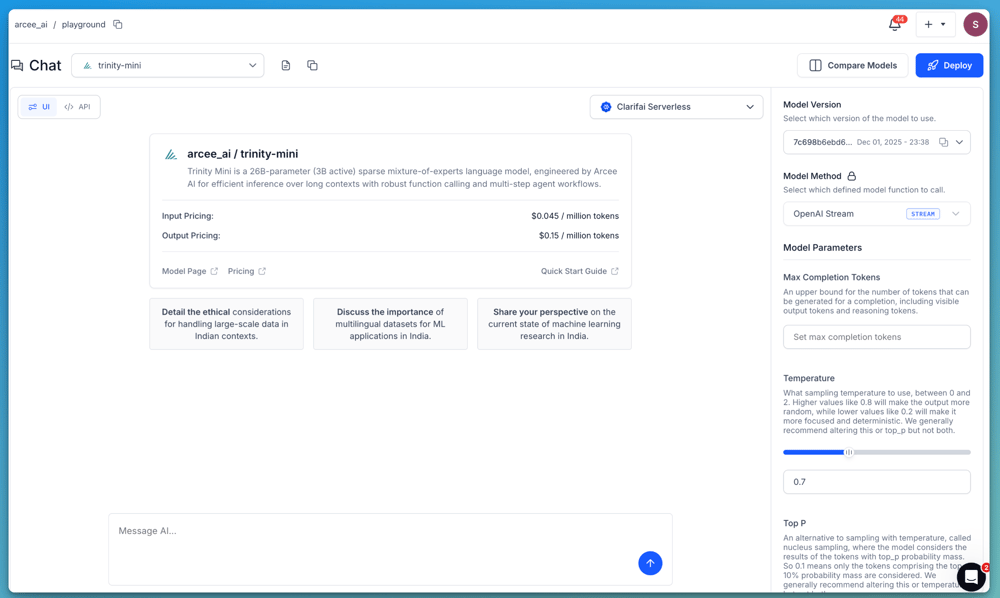

This launch brings Trinity Mini, the most recent open-weight mannequin from Arcee AI, now out there instantly on Clarifai. Trinity Mini is a 26B parameter Combination-of-Specialists (MoE) mannequin with 3B energetic parameters, designed for reasoning-heavy workloads and agentic AI functions.

The mannequin builds on the success of AFM-4.5B, incorporating superior math, code, and reasoning capabilities. Coaching was carried out on 512 H200 GPUs utilizing Prime Mind’s infrastructure, making certain effectivity and scalability at each stage.

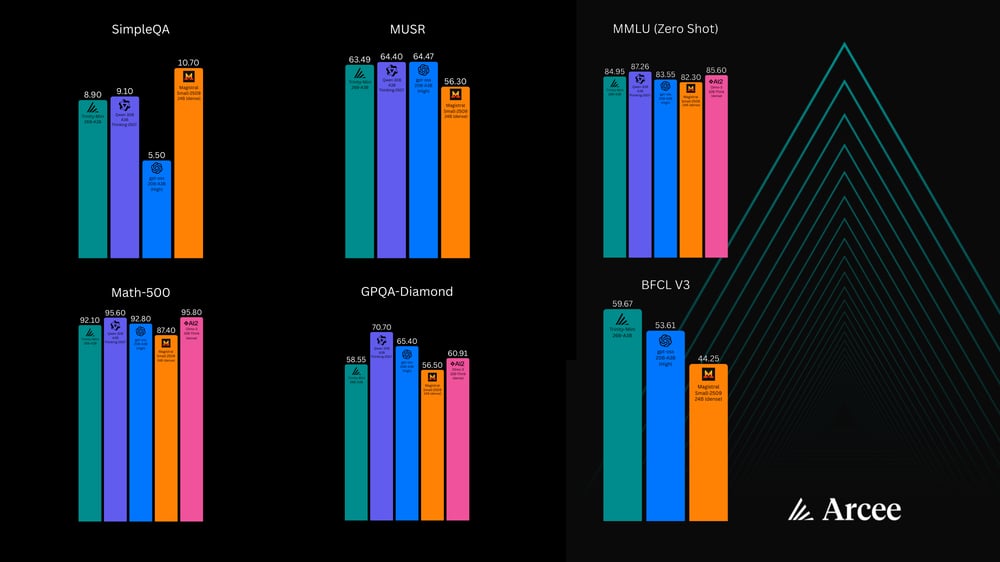

On benchmarks, Trinity Mini exhibits robust reasoning efficiency throughout a number of analysis suites. It scores 8.90 on SimpleQA, 63.49 on MUSR, and 84.95 on MMLU (Zero Shot). For math-focused workloads, the mannequin reaches 92.10 on Math-500, and on superior scientific duties reminiscent of GPQA-Diamond and BFCL V3 it scores 58.55 and 59.67. These outcomes place Trinity Mini near bigger instruction-tuned fashions whereas retaining the effectivity advantages of its compact MoE design.

We’ve got partnered with Arcee to make Trinity Mini totally accessible by way of the Clarifai, providing you with a straightforward method to discover the mannequin, consider its outputs and evaluate it in opposition to different reasoning fashions out there on the platform and combine it into your personal functions.

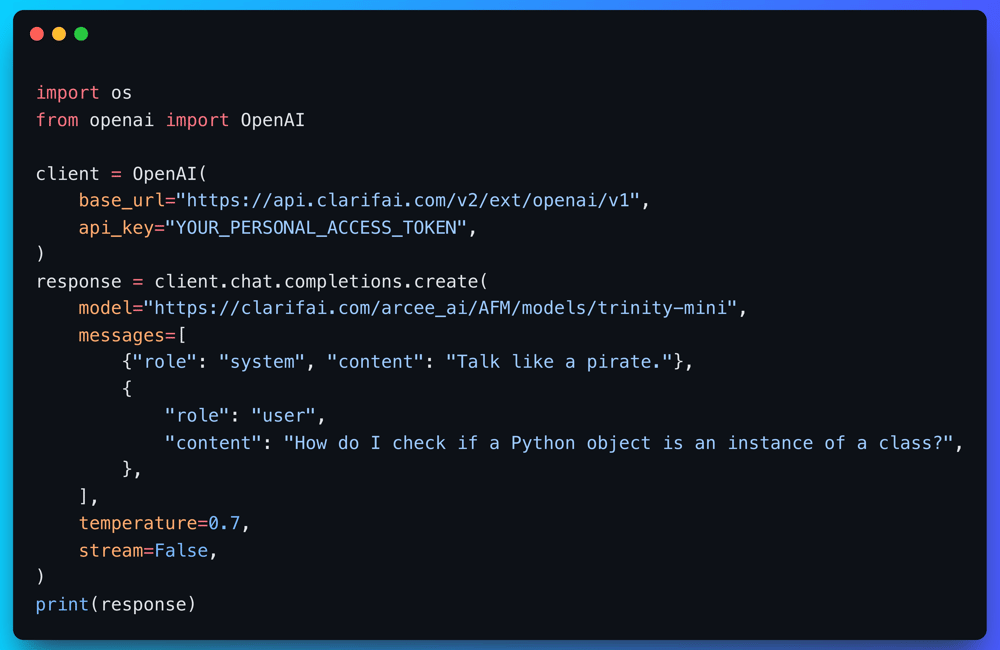

Builders can even begin utilizing Trinity Mini instantly utilizing Clarifai’s OpenAI Compatable API. Take a look at the information right here.

You can even entry Trinity Mini through Clarifai on OpenRouter. Test it out right here.

Mannequin Decommissioning

We’re upgrading our infrastructure, and several other legacy fashions can be decommissioned on December 31, 2025. These older task-specific fashions are being retired as we transition to newer, extra succesful mannequin households that now cowl the identical workflows with higher efficiency and stability.

All affected performance stays totally supported on the platform by way of present fashions. When you need assistance selecting replacements, we’ve got ready an in depth information overlaying really helpful alternate options for textual content classification, picture recognition, picture moderation, OCR and doc workflows. Checkout the decommissioning and alternate options information

Printed Fashions

Ministral-3-14B-Reasoning-2512

Ministral-3-14B-Reasoning-2512 is now out there on Clarifai. That is the biggest mannequin within the Ministral 3 household and is designed for math, coding, STEM workloads and multi-step reasoning. The mannequin combines a ~13.5B-parameter language spine with a ~0.4B-parameter imaginative and prescient encoder, permitting it to just accept each textual content and picture inputs.

It helps context lengths as much as 256k tokens, making it appropriate for lengthy paperwork and prolonged reasoning pipelines. The mannequin runs effectively in BF16 on 32 GB VRAM, with quantized variations requiring much less reminiscence, which makes it sensible for personal deployments and customized agent use circumstances.

Attempt Ministral-3-14B-Reasoning-2512

GLM-4.6

GLM-4.6 is now out there on Clarifai. This mannequin unifies reasoning, coding and agentic capabilities right into a single system, making it appropriate for multi-skill assistants, tool-using brokers and complicated automation workflows. It helps a 200k token context window, permitting it to deal with lengthy paperwork, prolonged plans and multi-step duties in a single sequence.

The mannequin delivers robust efficiency throughout reasoning and coding evaluations, with enhancements in real-world coding brokers reminiscent of Claude Code, Cline, Roo Code and Kilo Code. GLM-4.6 is designed to work together cleanly with instruments, generate structured outputs and function reliably inside agent frameworks.

Management Middle

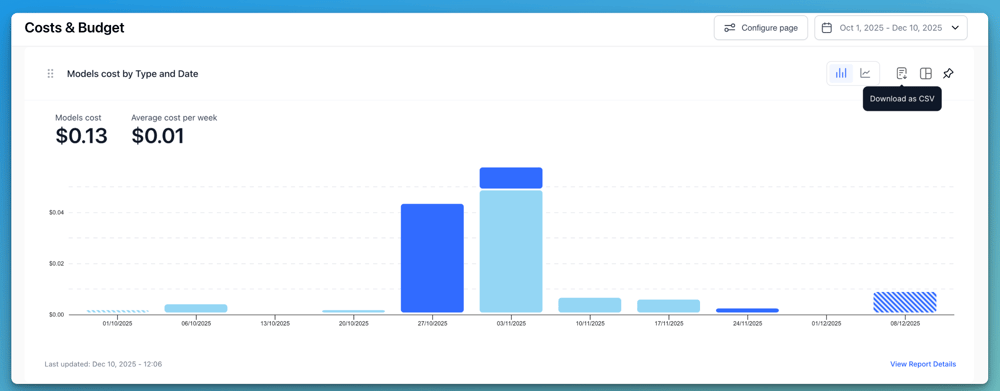

The Management Middle provides customers a unified view of utilization, efficiency and exercise throughout their fashions and deployments. It’s designed to assist groups monitor consumption patterns, monitor workloads and higher perceive how their functions are working on Clarifai.

On this launch We added a brand new choice to export the info of every chart as a CSV file, making it simpler to investigate metrics exterior the dashboard or combine them into customized reporting workflows.

Extra Adjustments

Platform Updates

-

Up to date account navigation with a cleaner format and reordered menu objects.

-

Added help for customized profile photos throughout the platform.

-

PAT values in code snippets at the moment are masked by default.

There are a number of extra usability and account-management enhancements included on this launch. Verify them out right here

Python SDK

-

Added platform specification help in

config.yamland the brand new--platformCLI choice. -

Improved mannequin loading validation for extra dependable initialization.

-

Refactored Dockerfile templates right into a cleaner multi-stage construct to simplify runner picture creation.

Extra SDK enhancements embrace higher dependency parsing, improved atmosphere dealing with, OpenAI Responses API help and a number of runner reliability fixes. Be taught extra right here

Able to Begin Constructing?

You can begin constructing with Trinity Mini on Clarifai at this time. Check the mannequin within the Playground, and combine it into your workflows by way of the OpenAI-compatible API. Trinity Mini is totally open-weight and production-ready, making it simple to construct brokers, reasoning pipelines or long-context functions with out further setup.

When you need assistance choosing the proper configuration or wish to deploy the mannequin at scale, our staff can information you. Reachout to the staff right here.