On this tutorial, we display how we use Ibis to construct a conveyable, in-database function engineering pipeline that appears and seems like Pandas however executes totally contained in the database. We present how we connect with DuckDB, register knowledge safely contained in the backend, and outline complicated transformations utilizing window capabilities and aggregations with out ever pulling uncooked knowledge into native reminiscence. By conserving all transformations lazy and backend-agnostic, we display easy methods to write analytics code as soon as in Python and depend on Ibis to translate it into environment friendly SQL. Try the FULL CODES right here.

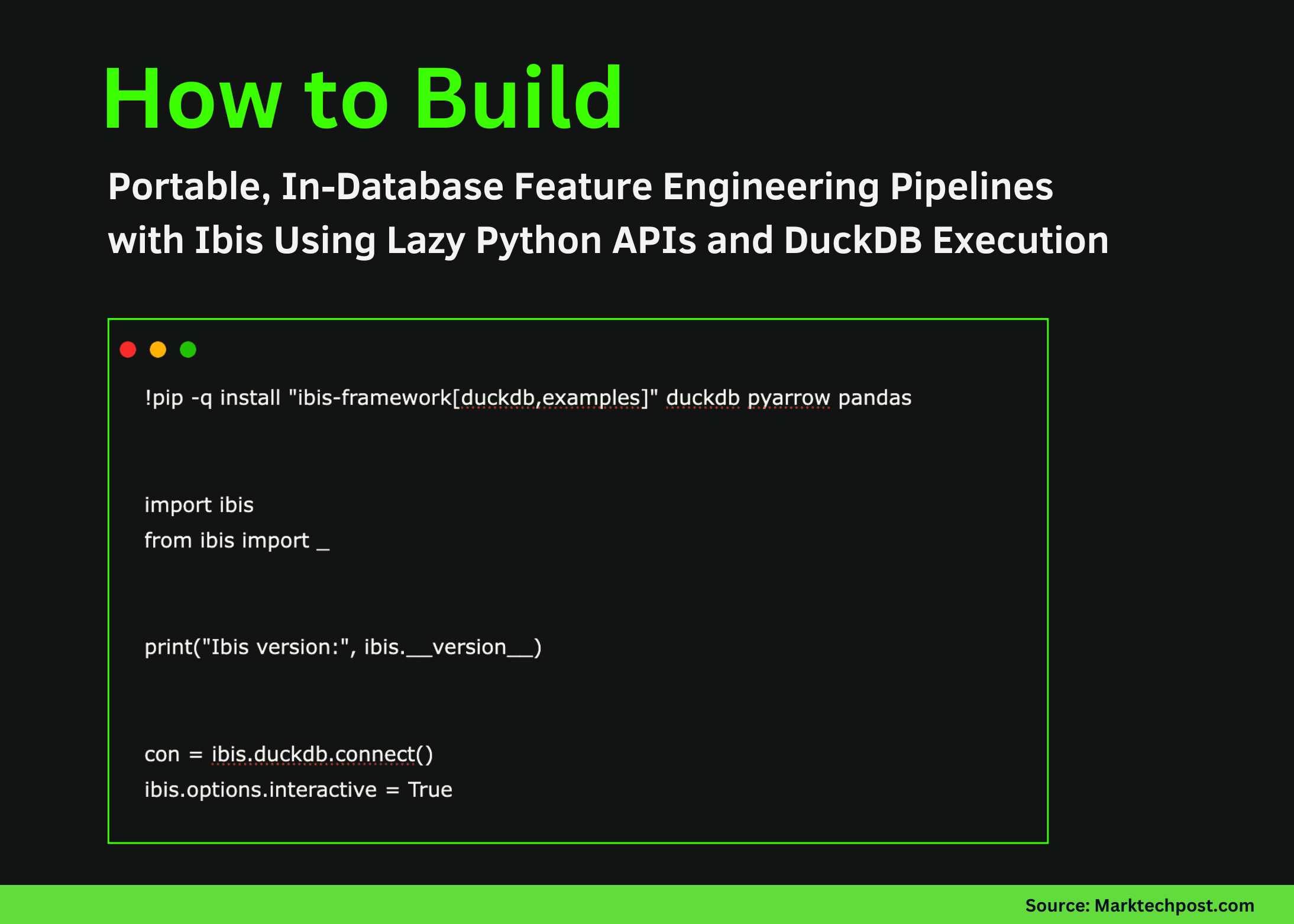

!pip -q set up "ibis-framework[duckdb,examples]" duckdb pyarrow pandas

import ibis

from ibis import _

print("Ibis model:", ibis.__version__)

con = ibis.duckdb.join()

ibis.choices.interactive = TrueWe set up the required libraries and initialize the Ibis atmosphere. We set up a DuckDB connection and allow interactive execution so that every one subsequent operations stay lazy and backend-driven. Try the FULL CODES right here.

attempt:

base_expr = ibis.examples.penguins.fetch(backend=con)

besides TypeError:

base_expr = ibis.examples.penguins.fetch()

if "penguins" not in con.list_tables():

attempt:

con.create_table("penguins", base_expr, overwrite=True)

besides Exception:

con.create_table("penguins", base_expr.execute(), overwrite=True)

t = con.desk("penguins")

print(t.schema())We load the Penguins dataset and explicitly register it contained in the DuckDB catalog to make sure it’s accessible for SQL execution. We confirm the desk schema and make sure that the information now lives contained in the database reasonably than in native reminiscence. Try the FULL CODES right here.

def penguin_feature_pipeline(penguins):

base = penguins.mutate(

bill_ratio=_.bill_length_mm / _.bill_depth_mm,

is_male=(_.intercourse == "male").ifelse(1, 0),

)

cleaned = base.filter(

_.bill_length_mm.notnull()

& _.bill_depth_mm.notnull()

& _.body_mass_g.notnull()

& _.flipper_length_mm.notnull()

& _.species.notnull()

& _.island.notnull()

& _.yr.notnull()

)

w_species = ibis.window(group_by=[cleaned.species])

w_island_year = ibis.window(

group_by=[cleaned.island],

order_by=[cleaned.year],

previous=2,

following=0,

)

feat = cleaned.mutate(

species_avg_mass=cleaned.body_mass_g.imply().over(w_species),

species_std_mass=cleaned.body_mass_g.std().over(w_species),

mass_z=(

cleaned.body_mass_g

- cleaned.body_mass_g.imply().over(w_species)

) / cleaned.body_mass_g.std().over(w_species),

island_mass_rank=cleaned.body_mass_g.rank().over(

ibis.window(group_by=[cleaned.island])

),

rolling_3yr_island_avg_mass=cleaned.body_mass_g.imply().over(

w_island_year

),

)

return feat.group_by(["species", "island", "year"]).agg(

n=feat.depend(),

avg_mass=feat.body_mass_g.imply(),

avg_flipper=feat.flipper_length_mm.imply(),

avg_bill_ratio=feat.bill_ratio.imply(),

avg_mass_z=feat.mass_z.imply(),

avg_rolling_3yr_mass=feat.rolling_3yr_island_avg_mass.imply(),

pct_male=feat.is_male.imply(),

).order_by(["species", "island", "year"])We outline a reusable function engineering pipeline utilizing pure Ibis expressions. We compute derived options, apply knowledge cleansing, and use window capabilities and grouped aggregations to construct superior, database-native options whereas conserving all the pipeline lazy. Try the FULL CODES right here.

options = penguin_feature_pipeline(t)

print(con.compile(options))

attempt:

df = options.to_pandas()

besides Exception:

df = options.execute()

show(df.head())We invoke the function pipeline and compile it into DuckDB SQL to validate that every one transformations are pushed right down to the database. We then run the pipeline and return solely the ultimate aggregated outcomes for inspection. Try the FULL CODES right here.

con.create_table("penguin_features", options, overwrite=True)

feat_tbl = con.desk("penguin_features")

attempt:

preview = feat_tbl.restrict(10).to_pandas()

besides Exception:

preview = feat_tbl.restrict(10).execute()

show(preview)

out_path = "/content material/penguin_features.parquet"

con.raw_sql(f"COPY penguin_features TO '{out_path}' (FORMAT PARQUET);")

print(out_path)We materialize the engineered options as a desk straight inside DuckDB and question it lazily for verification. We additionally export the outcomes to a Parquet file, demonstrating how we will hand off database-computed options to downstream analytics or machine studying workflows.

In conclusion, we constructed, compiled, and executed a sophisticated function engineering workflow absolutely inside DuckDB utilizing Ibis. We demonstrated easy methods to examine the generated SQL, materialized outcomes straight within the database, and exported them for downstream use whereas preserving portability throughout analytical backends. This method reinforces the core concept behind Ibis: we hold computation near the information, decrease pointless knowledge motion, and preserve a single, reusable Python codebase that scales from native experimentation to manufacturing databases.

Try the FULL CODES right here. Additionally, be happy to observe us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you’ll be able to be part of us on telegram as effectively.

Try our newest launch of ai2025.dev, a 2025-focused analytics platform that turns mannequin launches, benchmarks, and ecosystem exercise right into a structured dataset you’ll be able to filter, evaluate, and export.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.