Find out how to Construct Provider Analytics With Salesforce SAP Integration on Databricks

Provider knowledge touches almost each a part of a company — from procurement and provide chain administration to finance and analytics. But, it’s usually unfold throughout techniques that don’t talk with one another. For instance, Salesforce holds vendor profiles, contacts, and account particulars, and SAP S/4HANA manages invoices, funds, and basic ledger entries. As a result of these techniques function independently, groups lack a complete view of provider relationships. The result’s sluggish reconciliation, duplicate information, and missed alternatives to optimize spend.

Databricks solves this by connecting each techniques on one ruled knowledge & AI platform. Utilizing Lakeflow Join for Salesforce for knowledge ingestion and SAP Enterprise Information Cloud (BDC) Join, groups can unify CRM and ERP knowledge with out duplication. The result’s a single, trusted view of distributors, funds, and efficiency metrics that helps each procurement and finance use instances, in addition to analytics.

On this how-to, you’ll learn to join each knowledge sources, construct a blended pipeline, and create a gold layer that powers analytics and conversational insights by means of AI/BI Dashboards and Genie.

Why Zero-Copy SAP Salesforce Information Integration Works

Most enterprises attempt to join SAP and Salesforce by means of conventional ETL or third-party instruments. These strategies create a number of knowledge copies, introduce latency, and make governance troublesome. Databricks takes a distinct method.

-

Zero-copy SAP entry: The SAP BDC Connector for Databricks offers you ruled, real-time entry to SAP S/4HANA knowledge merchandise by means of Delta Sharing. No exports or duplication.

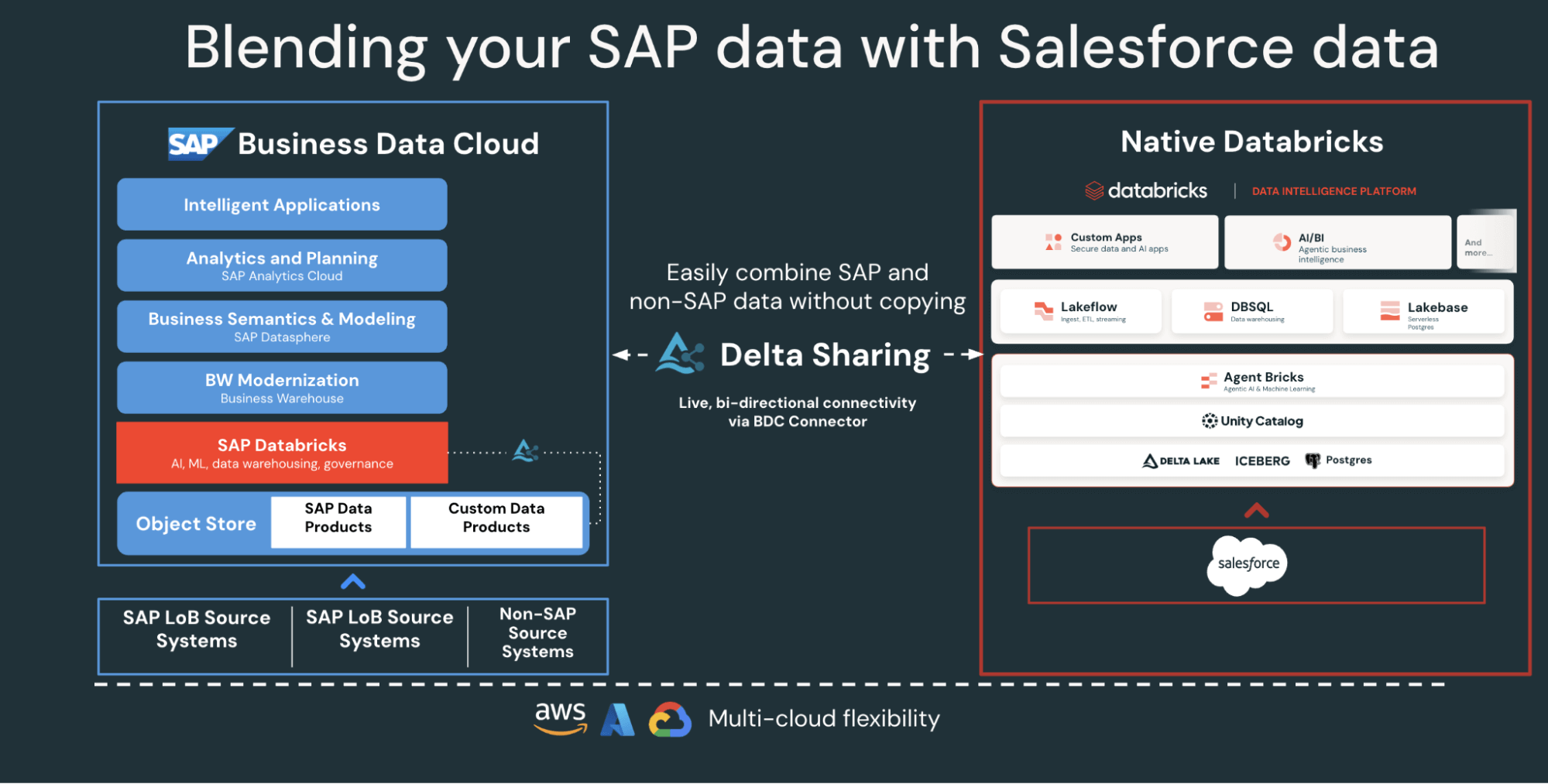

Determine: SAP BDC Connector to Native Databricks(Bi-directional) - Quick Salesforce Incremental ingestion: Lakeflow connects and ingests Salesforce knowledge repeatedly, conserving your datasets recent and constant.

- Unified governance: Unity Catalog enforces permissions, lineage, and auditing throughout each SAP and Salesforce sources.

- Declarative pipelines: Lakeflow Spark Declarative Pipelines simplifies ETL design and orchestration with automated optimizations for higher efficiency.

Collectively, these capabilities allow knowledge engineers to mix SAP and Salesforce knowledge on one platform, decreasing complexity whereas sustaining enterprise-grade governance.

SAP Salesforce Information Integration Structure on Databricks

Earlier than constructing the pipeline, it’s helpful to grasp how these parts match collectively in Databricks.

At a excessive degree, SAP S/4HANA publishes enterprise knowledge as curated, business-ready SAP-managed knowledge merchandise in SAP Enterprise Information Cloud (BDC). SAP BDC Join for Databricks permits safe, zero-copy entry to these knowledge merchandise utilizing Delta Sharing. In the meantime, Lakeflow Join handles Salesforce ingestion — capturing accounts, contacts, and alternative knowledge by means of incremental pipelines.

All incoming knowledge, whether or not from SAP or Salesforce, is ruled in Unity Catalog for governance, lineage, and permissions. Information engineers then use Lakeflow Declarative Pipelines to hitch and remodel these datasets right into a medallion structure (bronze, silver, and gold layers). Lastly, the gold layer serves as the muse for analytics and exploration in AI/BI Dashboards and Genie.

This structure ensures that knowledge from each techniques stays synchronized, ruled, and analytics and AI prepared — with out the overhead of replication or exterior ETL instruments.

Find out how to Construct Unified Provider Analytics

The next steps define methods to join, mix, and analyze SAP and Salesforce knowledge on Databricks.

Step 1: Ingestion of Salesforce Information with Lakeflow Join

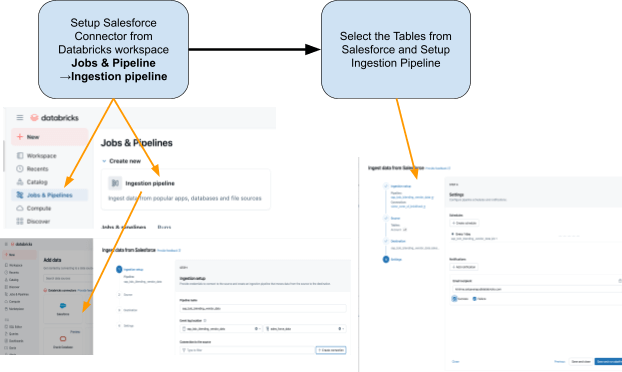

Use Lakeflow Join to deliver Salesforce knowledge into Databricks. You may configure pipelines by means of the UI or API. These pipelines handle incremental updates robotically, guaranteeing that knowledge stays present with out handbook refreshes.

The connector is totally built-in with Unity Catalog governance, Lakeflow Spark Declarative Pipelines for ETL, and Lakeflow Jobs for orchestration.

These are the tables that we’re planning to ingest from Salesforce:

- Account: Vendor/Provider particulars (fields embrace: AccountId, Identify, Trade, Kind, BillingAddress)

- Contact: Vendor Contacts (fields embrace: ContactId, AccountId, FirstName, LastName, E-mail)

Step 2: Entry SAP S/4HANA Information with the SAP BDC Connector

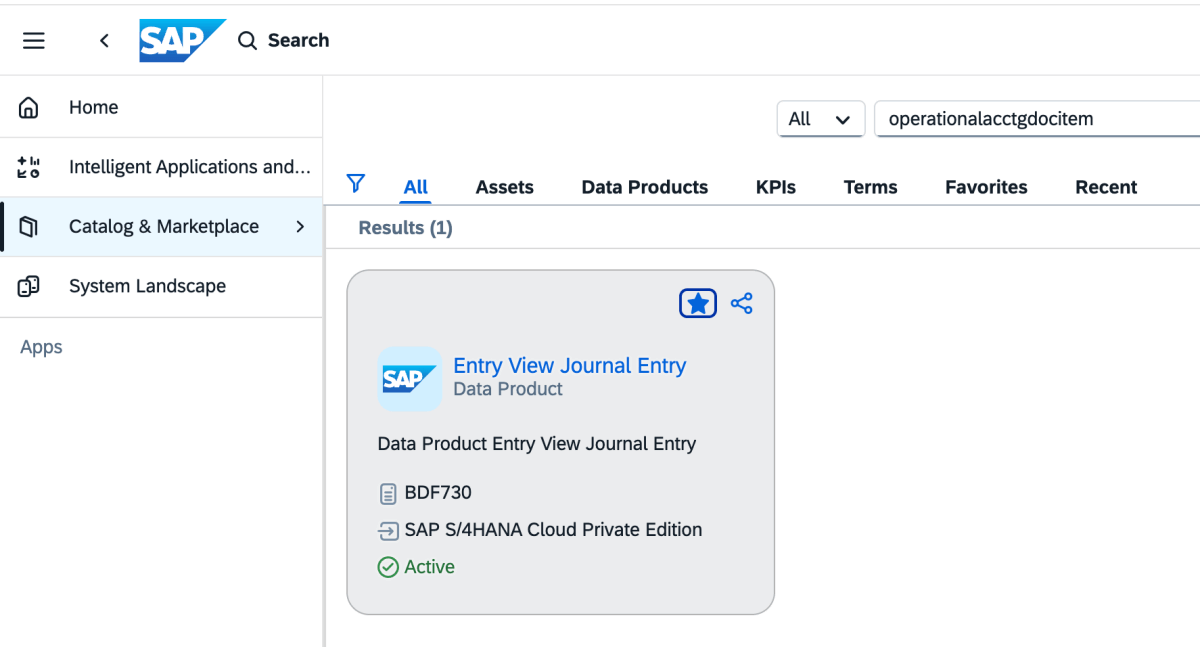

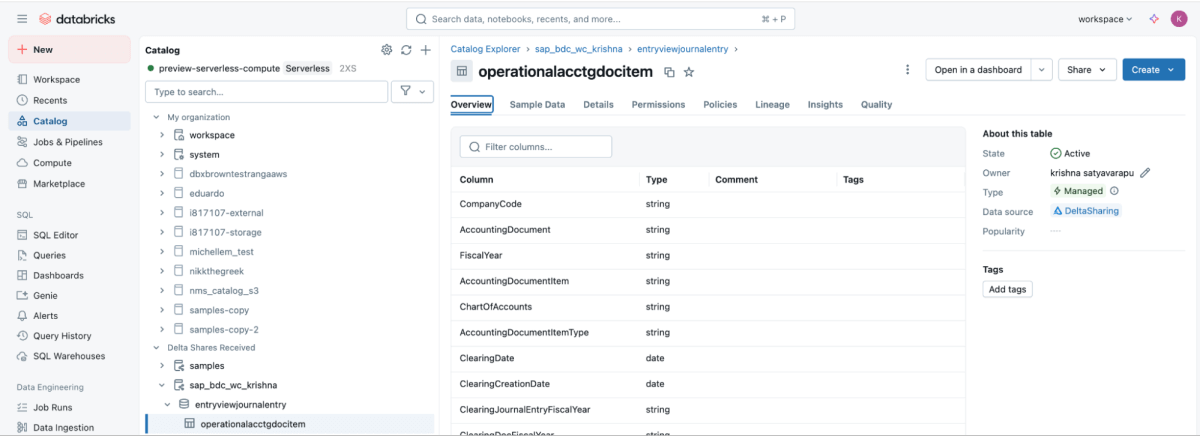

SAP BDC Join offers reside, ruled entry to SAP S/4HANA vendor fee knowledge on to Databricks — eliminating conventional ETL, by leveraging the SAP BDC knowledge product sap_bdc_working_capital.entryviewjournalentry.operationalacctgdocitem—the Common Journal line-item view.

This BDC knowledge product maps on to the SAP S/4HANA CDS view I_JournalEntryItem (Operational Accounting Doc Merchandise) on ACDOCA.

For the ECC context, the closest bodily constructions have been BSEG (FI line gadgets) with headers in BKPF, CO postings in COEP, and open/cleared indexes BSIK/BSAK (distributors) and BSID/BSAD (prospects). In SAP S/4HANA, these BS** objects are a part of the simplified knowledge mannequin, the place vendor and G/L line gadgets are centralized within the Common Journal (ACDOCA), changing the ECC method that usually required becoming a member of a number of separate finance tables.

These are the steps that have to be carried out within the SAP BDC cockpit.

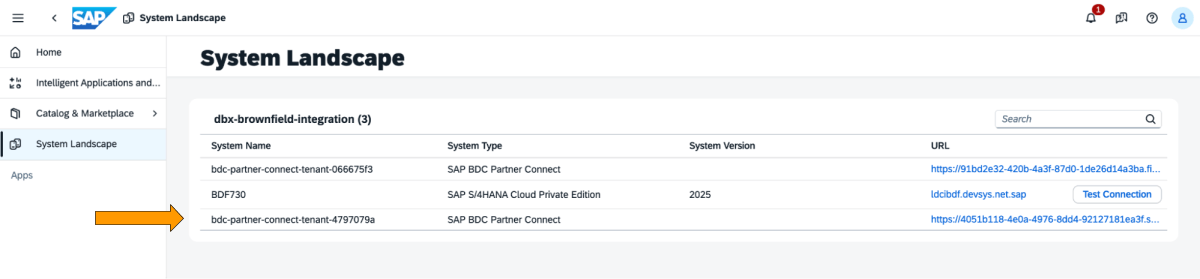

1: Log into the SAP BDC cockpit and take a look at the SAP BDC formation within the System Panorama. Hook up with Native Databricks by way of the SAP BDC delta sharing connector. For extra data on methods to join Native Databricks to the SAP BDC so it turns into a part of its formation.

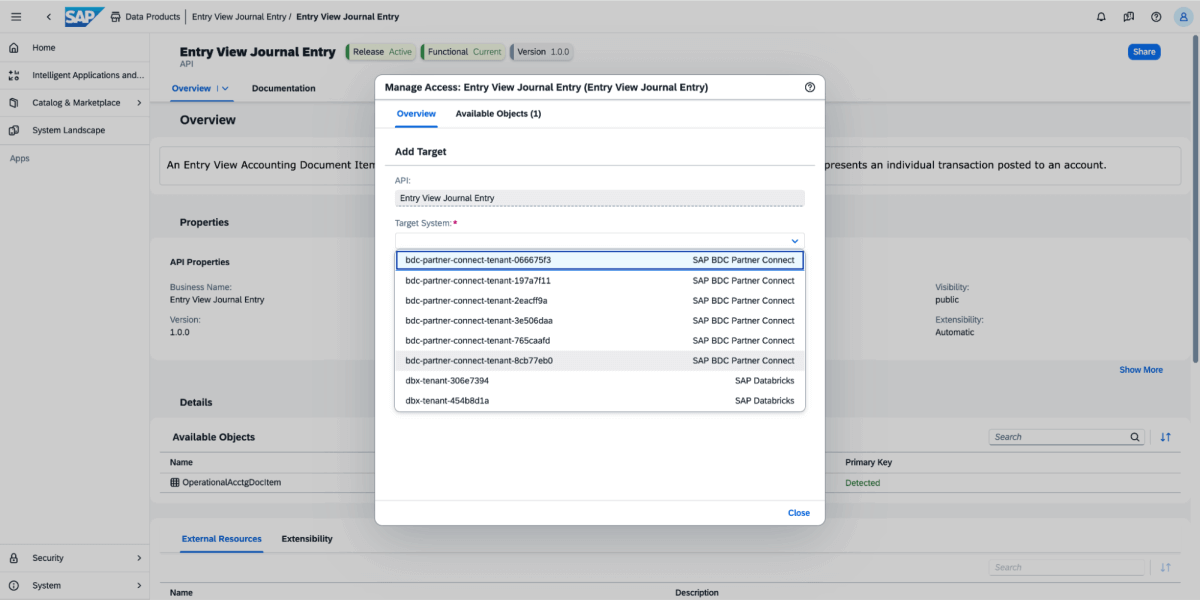

2: Go to Catalog and search for the Information Product Entry View Journal Entry as proven under

3: On the information product, choose Share, after which choose the goal system, as proven within the picture under.

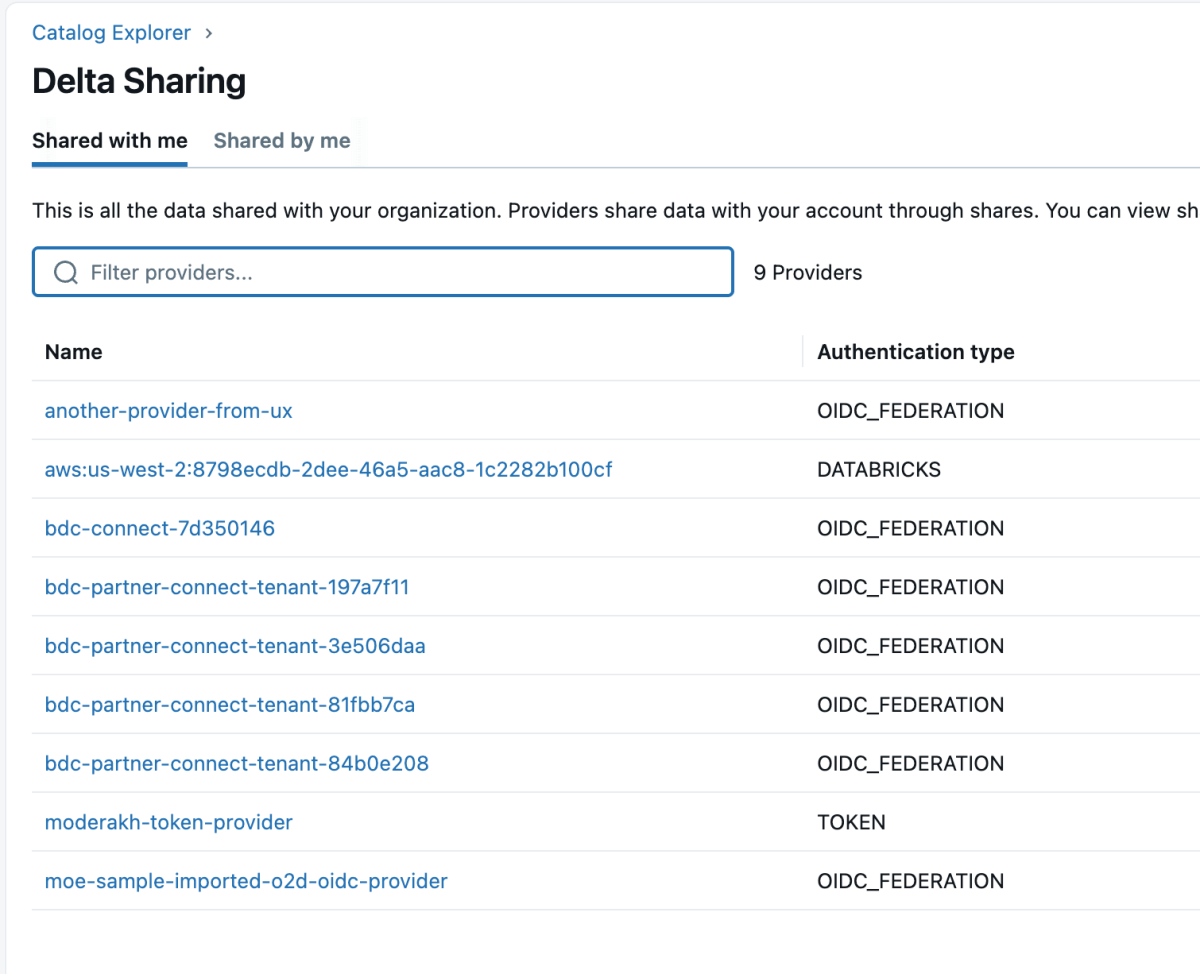

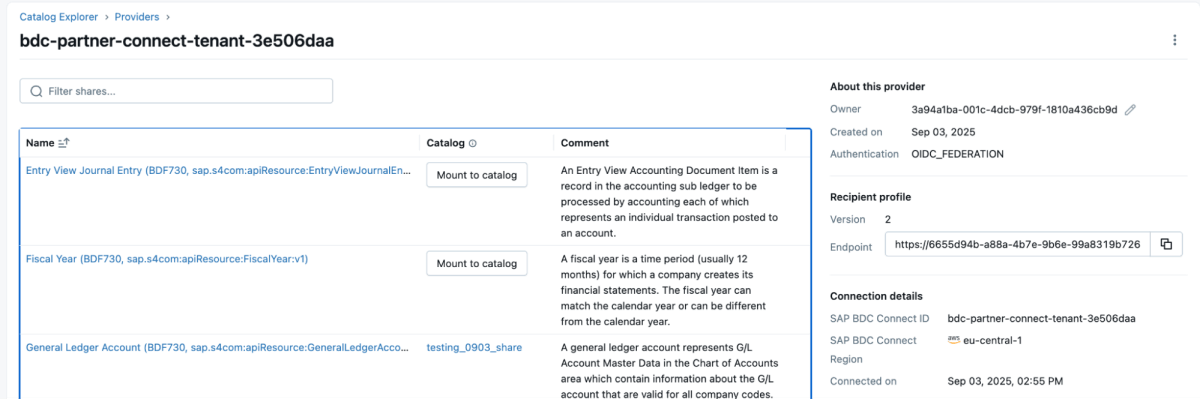

4: As soon as the information product is shared, it would come up as a delta share within the Databricks workspace as proven under. Guarantee you’ve “Use Supplier” entry as a way to see these suppliers.

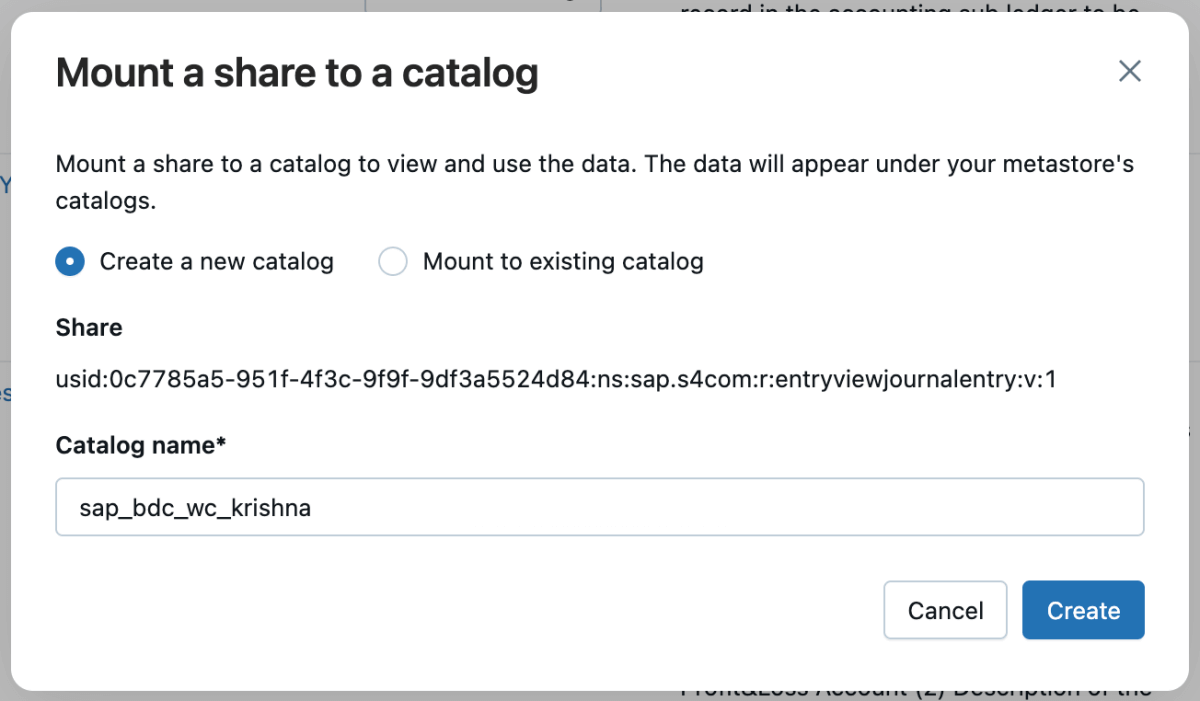

5: Then you possibly can mount that share to the catalog and both create a brand new catalog or mount it to an current catalog.

6: As soon as the share is mounted, it would mirror within the catalog.

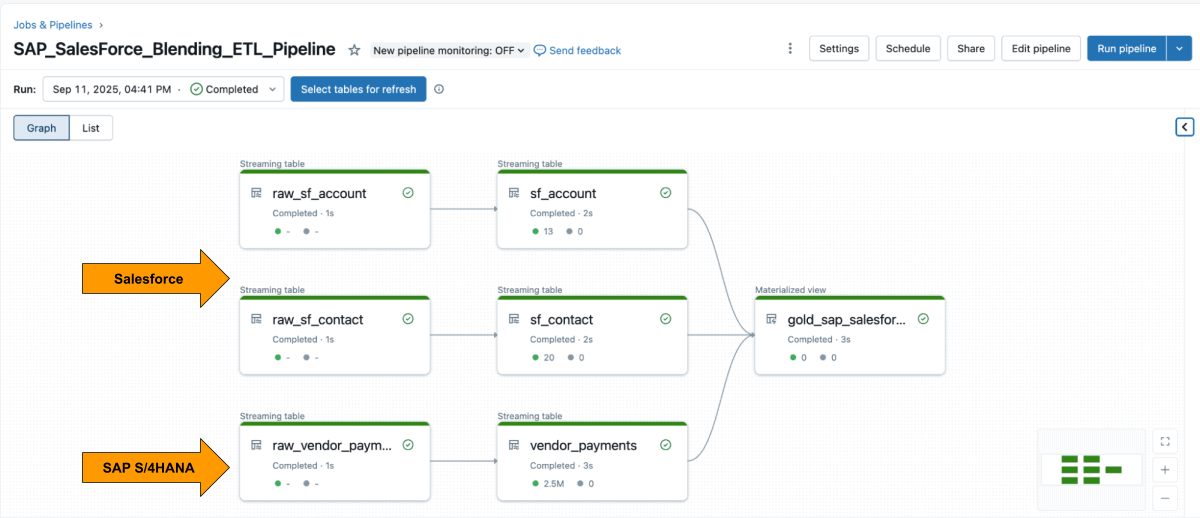

Step 3: Mixing the ETL Pipeline in Databricks utilizing Lakeflow Declarative Pipelines

With each sources accessible, use Lakeflow Declarative Pipelines to construct an ETL pipeline with Salesforce and SAP knowledge.

The Salesforce Account desk often consists of the sphere SAP_ExternalVendorId__c, which matches the seller ID in SAP. This turns into the first be part of key on your silver layer.

Lakeflow Spark Declarative Pipelines help you outline transformation logic in SQL whereas Databricks handles optimization robotically and orchestrates the pipelines.

Instance: Construct curated business-level tables

This question creates a curated business-level materialized view that unifies vendor fee information from SAP with vendor particulars from Salesforce that’s prepared for analytics and reporting.

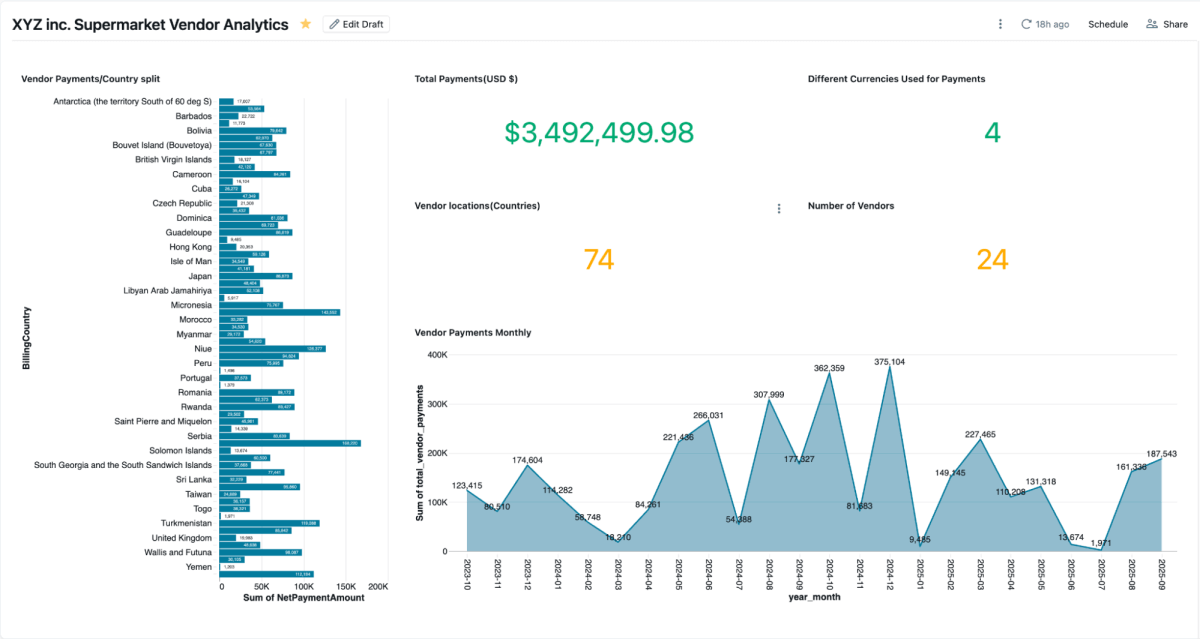

Step 4: Analyze with AI/BI Dashboards and Genie

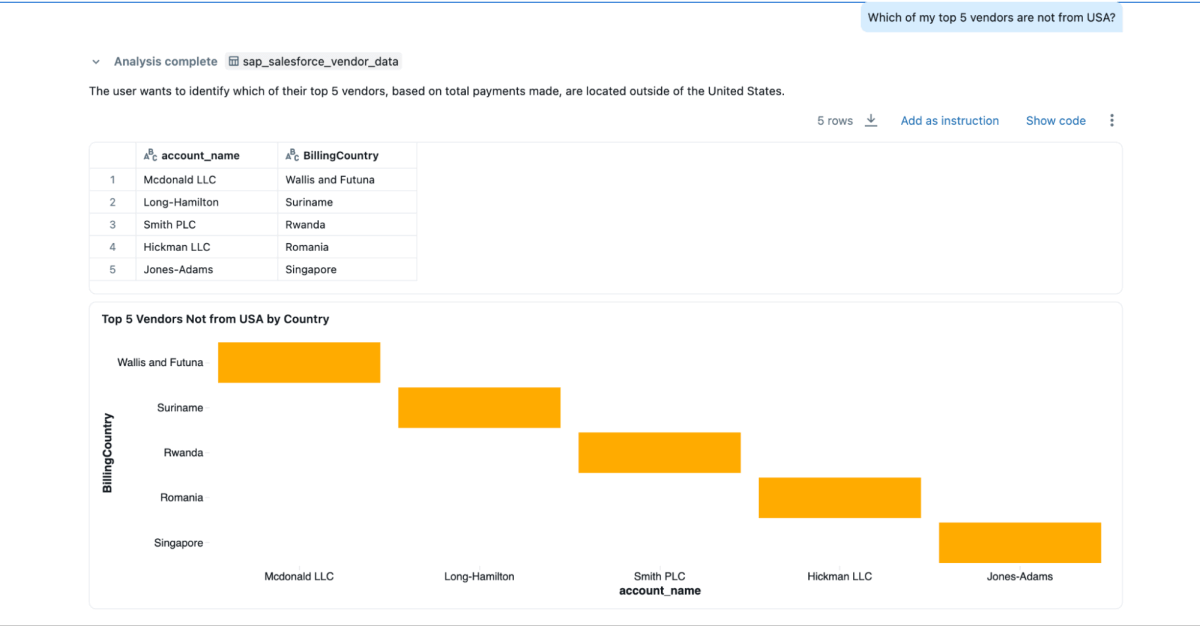

As soon as the materialized view is created, you possibly can discover it immediately in AI/BI Dashboards let groups visualize vendor funds, excellent balances, and spend by area.They help dynamic filtering, search, and collaboration, all ruled by Unity Catalog. Genie permits natural-language exploration of the identical knowledge.

You may create Genie areas on this blended knowledge and ask questions, which couldn’t be carried out if the information have been siloed in Salesforce and SAP

- “Who’re my high 3 distributors whom I pay probably the most, and I need their contact data as properly?”

- “What are the billing addresses for the highest 3 distributors?”

- “Which of my high 5 distributors usually are not from the USA?”

Enterprise Outcomes

By combining SAP and Salesforce knowledge on Databricks, organizations achieve an entire and trusted view of provider efficiency, funds, and relationships. This unified method delivers each operational and strategic advantages:

- Sooner dispute decision: Groups can view fee particulars and provider contact data facet by facet, making it simpler to analyze points and resolve them shortly.

- Early-pay financial savings: With fee phrases, clearing dates, and web quantities in a single place, finance groups can simply establish alternatives for early fee reductions.

- Cleaner vendor grasp: Becoming a member of on the

SAP_ExternalVendorId__carea helps establish and resolve duplicate or mismatched provider information, thereby sustaining correct and constant vendor knowledge throughout techniques. - Audit-ready governance: Unity Catalog ensures all knowledge is ruled with constant lineage, permissions, and auditing, so analytics, AI fashions, and experiences depend on the identical trusted supply.

Collectively, these outcomes assist organizations streamline vendor administration and enhance monetary effectivity — whereas sustaining the governance and safety required for enterprise techniques.

Conclusion:

Unifying provider knowledge throughout SAP and Salesforce doesn’t should imply rebuilding pipelines or managing duplicate techniques.

With Databricks, groups can work from a single, ruled basis that seamlessly integrates ERP and CRM knowledge in real-time. The mix of zero-copy SAP BDC entry, incremental Salesforce ingestion, unified governance, and declarative pipelines replaces integration overhead with perception.

The outcome goes past sooner reporting. It delivers a linked view of provider efficiency that improves buying selections, strengthens vendor relationships, and unlocks measurable financial savings. And since it’s constructed on the Databricks Information Intelligence Platform, the identical SAP knowledge that feeds funds and invoices can even drive dashboards, AI fashions, and conversational analytics — all from one trusted supply.

SAP knowledge is commonly the spine of enterprise operations. By integrating the SAP Enterprise Information Cloud, Delta Sharing, and Unity Catalog, organizations can lengthen this structure past provider analytics — into working-capital optimization, stock administration, and demand forecasting.

This method turns SAP knowledge from a system of file right into a system of intelligence, the place each dataset is reside, ruled, and prepared to be used throughout the enterprise.