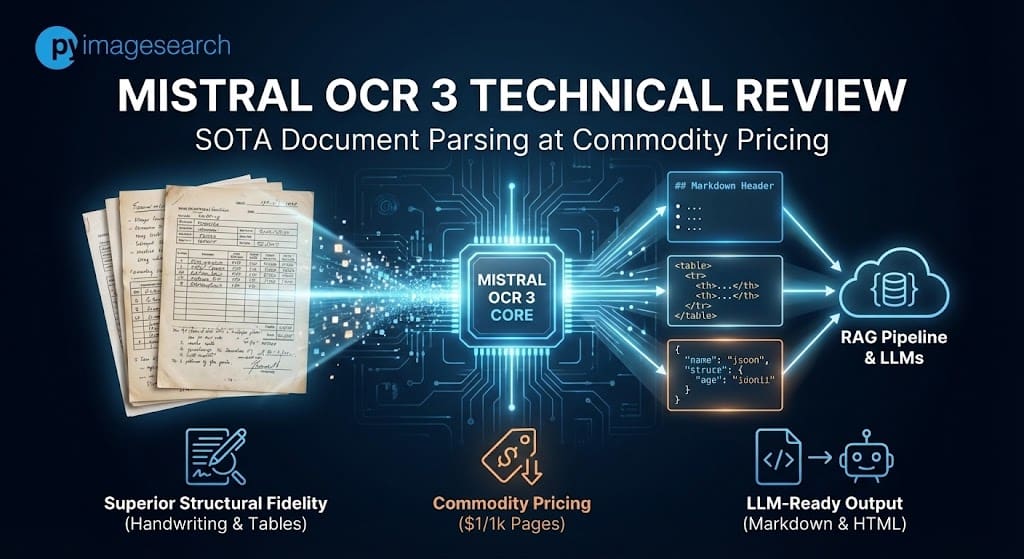

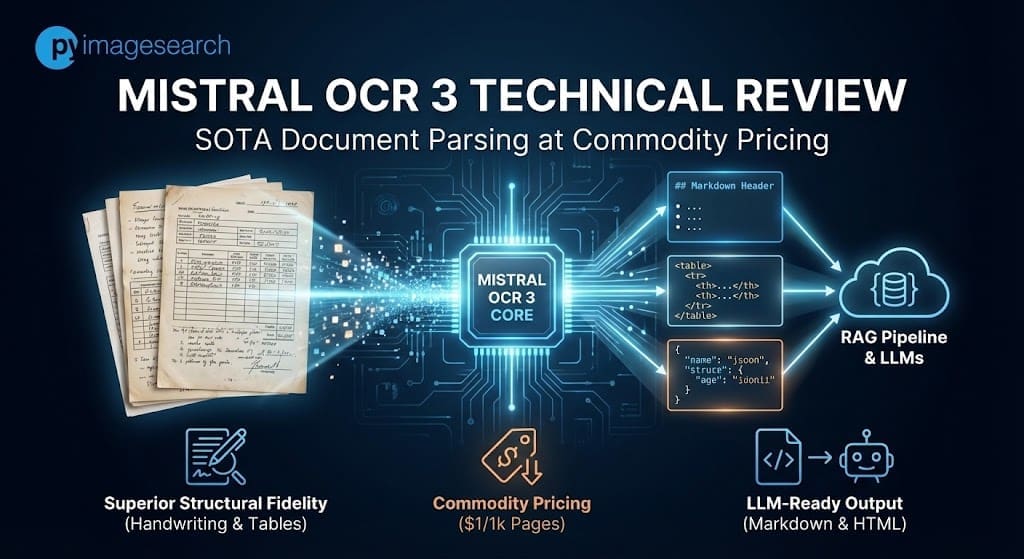

The commoditization of Optical Character Recognition (OCR) has traditionally been a race to the underside on worth, usually on the expense of structural constancy. Nevertheless, the discharge of Mistral OCR 3 alerts a definite shift available in the market. By claiming state-of-the-art accuracy on advanced tables and handwriting—whereas undercutting AWS Textract and Google Doc AI by vital margins—Mistral is positioning its proprietary mannequin not simply as a less expensive different, however as a technically superior parsing engine for RAG (Retrieval-Augmented Era) pipelines.

This technical evaluation dissects the structure, benchmark efficiency in opposition to hyperscalers, and the operational realities of deploying Mistral OCR 3 in manufacturing environments.

The Innovation: Construction-Conscious Structure

Mistral OCR 3 is a proprietary, environment friendly mannequin optimized particularly for changing doc layouts into LLM-ready Markdown and HTML. Not like basic multimodal LLMs, it focuses on construction preservation—particularly particularly desk reconstruction and dense kind parsing—accessible through the mistral-ocr-2512 endpoint.

Whereas conventional OCR engines (like Tesseract or early AWS Textract iterations) centered totally on bounding field coordinates and uncooked textual content extraction, Mistral OCR 3 is architected to resolve the “construction loss” drawback that plagues fashionable RAG pipelines.

The mannequin is described as “a lot smaller than best options” [1], but it outperforms bigger vision-language fashions in particular density duties. Its main innovation lies in its output modality: slightly than returning a JSON of coordinates (which requires post-processing to reconstruct), Mistral OCR 3 outputs Markdown enriched with HTML-based desk reconstruction [1].

This suggests the mannequin is skilled to acknowledge doc semantics—figuring out {that a} grid of numbers is a

| Metric | Mistral OCR 3 | Azure Doc Intelligence | DeepSeek OCR | Google DocAI |

|---|---|---|---|---|

| Handwritten Accuracy | 88.9 | 78.2 | 57.2 | 73.9 |

| Historic Scanned Accuracy | 96.7 | 83.7 | 81.1 | 87.1 |

Observe: The 57.2 rating for DeepSeek highlights that general-purpose open-weights fashions nonetheless wrestle with cursive variance in comparison with specialised proprietary endpoints.

Section 2: Structural Integrity (Tables & Types)

For monetary evaluation and RAG, desk constancy is binary: it's both usable or it isn't. Mistral OCR 3 demonstrates superior detection of merged cells and headers.

| Metric | Mistral OCR 3 | AWS Textract | Azure Doc Intelligence |

|---|---|---|---|

| Complicated Tables Accuracy | 96.6 | 84.8 | 85.9 |

| Types Accuracy | 95.9 | 84.5 | 86.2 |

| Multilingual (English) | 98.6 | 93.9 | 93.5 |

Determine 2: Comparative accuracy throughout doc duties. Observe the numerous delta in “Complicated Tables” and “Handwritten” classes.

Determine 2: Comparative accuracy throughout doc duties. Observe the numerous delta in “Complicated Tables” and “Handwritten” classes.

Balanced Critique: Edge Circumstances and Failure Modes

Regardless of excessive mixture scores, early adopters report inconsistency in advanced multi-column layouts and picture format sensitivity. Whereas it excels at logical construction, builders ought to concentrate on particular quirks concerning PDF vs. JPEG enter dealing with.

At PyImageSearch, we emphasize that benchmark scores not often inform the entire story. Evaluation of early adopter suggestions and neighborhood testing reveals particular constraints:

- Format Sensitivity (PDF vs. Picture): Builders have famous a “JPEG vs. PDF” inconsistency. In some situations, changing a PDF web page to a high-resolution JPEG earlier than submission yielded higher desk extraction outcomes than submitting the uncooked PDF. This means the pre-processing pipeline for PDF rasterization inside the API might introduce noise.

- Multi-Column Hallucinations: Whereas desk extraction is state-of-the-art, “advanced multi-column layouts” (reminiscent of magazine-style formatting with irregular textual content flows) stay a problem. The mannequin sometimes makes an attempt to drive a desk construction onto non-tabular columnar textual content.

- The “Black Field” Limitation: Not like open-weight options, this can be a strictly SaaS providing. You can not fine-tune this mannequin on area of interest proprietary datasets (e.g., particular medical types) as you could possibly with a neighborhood Imaginative and prescient Transformer.

- Manufacturing Supervision: Regardless of a 74% win fee over model 2, enterprise customers warning that “clear” construction outputs can typically masks OCR hallucination errors. Excessive-fidelity Markdown seems to be right to a human eye even when particular digits are flipped, necessitating Human-in-the-Loop (HITL) verification for monetary knowledge.

Pricing & Deployment Specs

Mistral OCR 3 aggressively disrupts the market with a Batch API worth of $1 per 1,000 pages, undercutting legacy suppliers by as much as 97%. It's a purely SaaS-based mannequin, eliminating native VRAM necessities however introducing knowledge privateness concerns for regulated industries.

The financial argument for Mistral OCR 3 is as robust because the technical one. For prime-volume archival digitization, the associated fee distinction is non-trivial.

| Function | Spec / Value |

|---|---|

| Mannequin ID | mistral-ocr-2512 |

| Customary API Value | $2 per 1,000 pages [1] |

| Batch API Value | $1 per 1,000 pages (50% low cost) [1] |

| {Hardware} Necessities | None (SaaS). Accessible through API or Doc AI Playground. |

| Output Format | Markdown, Structured JSON, HTML (for tables) |

Determine 3: Enchancment charges: Mistral OCR 3 boasts a 74% general win fee in opposition to its predecessor, v2.

Determine 3: Enchancment charges: Mistral OCR 3 boasts a 74% general win fee in opposition to its predecessor, v2.

The Batch API pricing is especially notable for builders migrating from AWS Textract, the place advanced desk and kind extraction can price considerably extra per web page relying on the area and have flags used.

FAQ: Mistral OCR 3

How does Mistral OCR 3 pricing examine to AWS Textract and Google Doc AI? Mistral OCR 3 prices $1 per 1,000 pages through the Batch API [1]. As compared, AWS Textract and Google Doc AI can price between $1.50 and $15.00 per 1,000 pages relying on superior options (like Tables or Types), making Mistral considerably cheaper for high-volume processing.

Can Mistral OCR 3 acknowledge cursive and messy handwriting? Sure. Benchmarks present it achieves 88.9% accuracy on handwriting, outperforming Azure (78.2%) and DeepSeek (57.2%). Group checks, such because the “Santa Letter” demo, confirmed its means to parse messy cursive.

What are the variations between Mistral OCR 3 and Pixtral Giant? Mistral OCR 3 is a specialised mannequin optimized for doc parsing, desk reconstruction, and markdown output [1]. Pixtral Giant is a general-purpose multimodal LLM. OCR 3 is smaller, sooner, and cheaper for devoted doc duties.

Learn how to use the Mistral OCR 3 Batch API for decrease prices? Builders can specify the batch processing endpoint when making API requests. This processes paperwork asynchronously (supreme for archival backlogs) and applies a 50% low cost, bringing the associated fee to $1/1k pages [1].

Is Mistral OCR 3 accessible as an open-weight mannequin? No. Presently, Mistral OCR 3 is a proprietary mannequin accessible solely through the Mistral API and the Doc AI Playground.

Citations

[1] Mistral AI, “Introducing Mistral OCR 3”.

Earlier Article:

Constructing Your First Streamlit App: Uploads, Charts, and Filters (Half 1)

Subsequent Article:

Mistral OCR 3 Technical Evaluation: SOTA Doc Parsing at Commodity Pricing