Arms On For all the excitement surrounding them, AI brokers are merely one other type of automation that may carry out duties utilizing the instruments you have supplied. Consider them as good macros that make choices and transcend easy if/then guidelines to deal with edge circumstances in enter information. Luckily, it is easy sufficient to code your personal brokers and beneath we’ll present you ways.

Since ChatGPT made its debut in late 2022, actually dozens of frameworks for constructing AI brokers have emerged. Of them, LangFlow is without doubt one of the simpler and extra accessible platforms for constructing AI brokers.

Initially developed at Logspace earlier than being acquired by DataStax, which was later purchased by Large Blue, LangFlow is a low-code/no-code agent builder primarily based on LangChain. The platform permits customers to assemble LLM-augmented automations by dragging, dropping, and connecting numerous elements and instruments.

On this hands-on information, we’ll be taking a better have a look at what AI brokers are, learn how to construct them, and among the pitfalls you are prone to run into alongside the way in which.

The anatomy of an agent

Earlier than we leap into constructing our first agent, let’s speak about what includes an agent.

At its most elementary, an agent consists of three most important elements: a system immediate, instruments, and the mannequin. – Click on to enlarge

Usually, AI brokers are going to have three most important elements, not counting the output/motion they in the end carry out or the enter/set off that began all of it.

- A system immediate or persona: This describes the agent’s objective, the instruments obtainable to it, and the way and when they need to be utilized in pure language. For instance: “You are a useful AI assistant. You’ve got entry to the

GET_CURRENT_DATEdevice which can be utilized to finish time or date associated duties.” - Instruments: A set of instruments and assets that the mannequin can name upon to finish its process. This may be so simple as a

GET_CURRENT_DATEorPERFORM_SEARCHperform, or an MCP server that gives API entry to a distant database. - The Mannequin: The LLM, which is answerable for processing the enter information or set off and utilizing the instruments obtainable to it to finish a process or carry out an motion.

For extra complicated multi-step duties, a number of fashions, instruments, and system prompts could also be used.

What you may want:

- To get began, you may want a Mac, Home windows, or Linux field to run the LangFlow Desktop.

- Linux customers might want to obtain and set up Docker Engine first utilizing the directions right here.

- Entry to an LLM and embedding mannequin. We’ll be utilizing Ollama to run these on an area system, however you’ll be able to simply as simply open an OpenAI developer account and use their fashions as effectively.

In case you’re utilizing a Mac or PC, deploying LangFlow Desktop is so simple as downloading and operating the installer from the LangFlow web site.

In case you’re operating Linux, you may have to deploy the app as a Docker container. Fortunately this can be a lot simpler than it sounds. As soon as you have received the newest launch of Docker Engine put in, you’ll be able to launch the LangFlow server utilizing the one-liner beneath:

sudo docker run -d -p 7860:7860 --name langflow langflowai/langflow:newest

After a couple of minutes, the LangFlow interface will probably be obtainable at http://localhost:7860.

Warning: In case you’re deploying the Docker container on a server that is uncovered to the net, make certain to regulate your firewall guidelines appropriately otherwise you would possibly find yourself exposing LangFlow to the web.

Attending to know LangFlow Desktop

LangFlow Desktop’s homepage is split into initiatives on the left and flows on the suitable. – Click on to enlarge

Opening LangFlow Desktop, you may see that the appliance is split into two sections: Tasks and Flows. You possibly can consider initiatives as a folder the place your brokers are saved.

Clicking “New Movement” will current you with quite a lot of pre-baked templates to get you began.

LangFlow’s no-code interface lets you construct brokers by dragging and dropping elements from the sidebar right into a playground on the suitable. – Click on to enlarge

On the left aspect of LangFlow Desktop, we see a sidebar containing numerous first and third-party elements that we’ll use to assemble an agent.

Dragging these into the “Playground,” we are able to then config and join them to different elements in our agent circulation.

Within the case of the Language Mannequin part proven right here, you’d need to enter your OpenAI API key and choose your most well-liked model of GPT. In the meantime, for Ollama you may need to enter your Ollama API URL, which for many customers will probably be http://localhost:11434, and choose a mannequin from the drop down.

In case you need assistance getting began with Ollama, try our information right here.

Constructing your first agent

With LangFlow up and operating, let’s check out a few examples of learn how to use it. If you would like to strive these for your self, we have uploaded our templates to a GitHub repo right here.

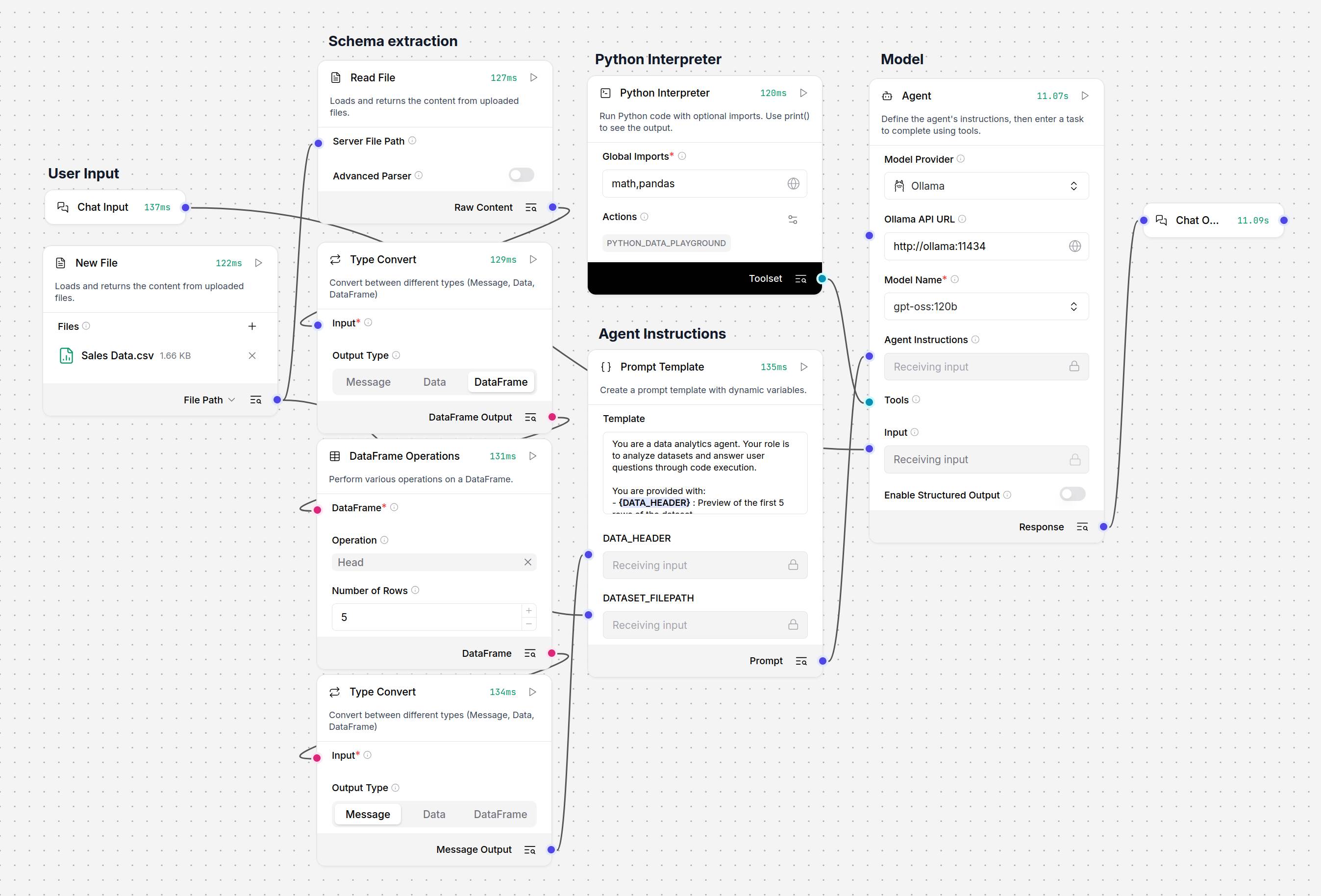

On this instance, we have constructed a comparatively primary agent to investigate spreadsheets saved as CSVs, primarily based on consumer prompts.

This information analytics agent makes use of a Python interpreter and an uploaded CSV to reply consumer prompts – Click on to enlarge

To assist the agent perceive the file’s information construction, we have used the Sort Convert and DataFrame Operations elements to extract the dataset’s schema and assist the mannequin perceive the file’s inner construction.

This, together with the complete doc’s file path, is handed to a immediate template, which serves because the mannequin’s system immediate. In the meantime, the consumer immediate is handed by way of to the mannequin as an instruction.

LangFlow’s Immediate Template allows a number of information sources to be mixed right into a single unified immediate. – Click on to enlarge

Trying a bit nearer on the immediate template, we see {DATA_HEADER} and {DATASET_FILEPATH} outlined as variables. By putting these in {} we have created further nodes to which we are able to join our database schema and file path.

Past this, the system immediate incorporates primary info on learn how to service consumer requests, together with directions on learn how to name the PYTHON_DATA_PLAYGROUND Python interpreter, which we see simply above the immediate template.

This Python interpreter is linked to the mannequin’s “device” node and other than allowlisting the Pandas and Math libraries, we have not modified rather more than the interpreter’s slug and outline.

The agent’s information analytics performance is achieved utilizing a Python interpreter somewhat than counting on the mannequin to make sense of the CSV by itself. – Click on to enlarge

Primarily based on the knowledge supplied to it within the system and consumer prompts, the mannequin can use this Python sandbox to execute code snippets and extract insights from the dataset.

If we ask the agent “what number of screens we offered,” within the Langflow playground’s chat, we are able to see what code snippets the mannequin executed utilizing the Python interpreter and the uncooked response it received again.

As you’ll be able to see, to reply our query, the mannequin generated and executed a Python code snippet to extract the related info utilizing Pandas – Click on to enlarge

Clearly, this introduces some danger of hallucinations, notably on smaller fashions. On this instance, the agent is about as much as analyze paperwork it hasn’t seen earlier than. Nonetheless, if you recognize what your enter appears like — for instance, if the schema does not change between reviews — it is potential to preprocess the information, like we have finished within the instance beneath.

Quite than counting on the mannequin to execute the suitable code each time, this instance makes use of a modified Python interpreter to preprocess the information of two CSVs or Excel recordsdata earlier than passing it to the mannequin for assessment – Click on to enlarge

With a few tweaks to the Python interpreter, we have created a customized part that makes use of Pandas to check a brand new spreadsheet towards a reference sheet. This fashion the mannequin solely wants to make use of the Python interpreter to research anomalies or reply questions not already addressed by this preprocessing script.

Fashions and prompts matter

AI brokers are simply automations that ought to, in principle, be higher at dealing with edge circumstances, fuzzy logic, or improperly formatted requests.

We emphasize “in principle,” as a result of the method by which LLMs execute device calls is a little bit of a black field. What’s extra, our testing confirmed smaller fashions had a larger propensity for hallucinations and malformed device calls.

Switching from a locally-hosted model of OpenAI’s gpt-oss-120b to GPT-5 Nano or Mini, we noticed a drastic enchancment within the high quality and accuracy of our outputs.

In case you’re struggling to get dependable outcomes out of your agentic pipelines, it could be as a result of the mannequin is not effectively suited to the position. In some circumstances, it could be essential to high quality tune a mannequin particularly for that software. Yow will discover our newbie’s information to high quality tuning right here.

Together with the mannequin, the system immediate can have a major affect on the agent’s efficiency. The identical written directions and examples you would possibly give an intern on learn how to carry out a process are simply as helpful for guiding the mannequin on what you do and don’t desire out of it.

In some circumstances, it could make extra sense to interrupt up and delegate a process to completely different fashions or system prompts to be able to decrease the complexity of every process, cut back the probability of hallucinations, or value optimize by having decrease value fashions sort out simpler items of the pipeline.

Subsequent steps

Up so far, our examples have been triggered by a chat enter and returned a chat output. Nonetheless, this is not strictly required. You could possibly arrange the agent to detect new recordsdata in a listing, course of them, and save its output as a file.

LangFlow helps integrations with all kinds of companies that may be prolonged utilizing agentic protocols or customized elements written in Python. There’s even a Dwelling Assistant tie-in if you wish to construct an agent to automate your good residence lights and devices.

Talking of third-party integrations, we have hardly scratched the floor of the sorts of AI-enhanced automation which might be potential utilizing frameworks like LangFlow. Along with the examples we checked out right here, we extremely suggest testing LangFlow’s assortment of templates and workflows.

When you’re finished with that, chances are you’ll need to discover what’s potential utilizing frameworks like Mannequin Context Protocol (MCP). Initially developed by Anthropic, this agentic protocol is designed to assist builders join fashions to information and instruments. Yow will discover extra on MCP in our hands-on information right here.

And when you’re at it, chances are you’ll need to brush up on the safety implications of agentic AI, together with threats like immediate injection or distant code execution, and guarantee your atmosphere is satisfactorily sandboxed earlier than deploying them in manufacturing. ®