Job descriptions of Knowledge Engineering roles have modified drastically over time. In 2026, these learn much less like knowledge plumbing and extra like manufacturing engineering. You’re anticipated to ship pipelines that don’t break at 2 AM, scale cleanly, and keep compliant whereas they do it. So, no – “I do know Python and Spark” alone doesn’t lower it anymore.

As an alternative, as we speak’s stack is centred round cloud warehouses + ELT, dbt-led transformations, orchestration, knowledge high quality assessments that truly fail pipelines, and boring-but-critical disciplines like schema evolution, knowledge contracts, IAM, and governance. Add lakehouse desk codecs, streaming, and containerised deployments, and the talent bar goes very excessive, very quick.

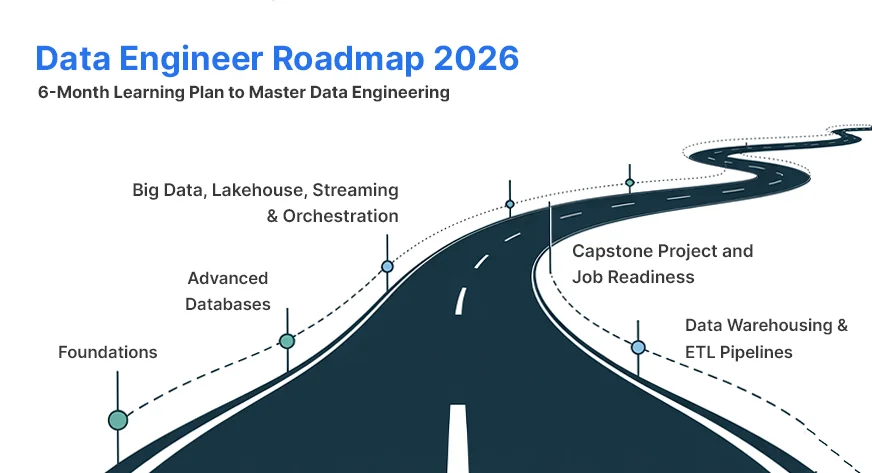

So, for those who’re beginning in 2026 (and even revisiting your plan to grow to be an information engineer), this roadmap is for you. Right here, we cowl a month-by-month path to studying the very best expertise which might be required in as we speak’s knowledge engineering roles. If you happen to grasp these, you’ll grow to be employable and never simply “tool-aware.” Let’s bounce proper in and discover all that’s vital in essentially the most systematic approach doable.

Month 1: Foundations

To Be taught: CS Fundamentals, Python, SQL, Linux, Git

Let’s be sincere. Month 1 isn’t the “thrilling” month. There is no such thing as a Spark cluster, shiny dashboards, or Kafka streams. However for those who skip this basis, every part that follows on this roadmap to turning into an information engineer turns into tougher than it must be. So begin early, begin sturdy!

CS Fundamentals

Begin with laptop science fundamentals. Be taught core knowledge constructions like arrays, linked lists, timber, and hash tables. Add primary algorithms like sorting and looking out. Be taught time complexity so you possibly can choose whether or not your code will scale properly. Additionally, be taught object-oriented programming (OOP) ideas, as a result of most actual pipelines are constructed as reusable modules and never single-use scripts.

Python Fundamentals

Subsequent, get strong with Python programming. Give attention to clear syntax, capabilities, management move, and writing readable code. Be taught primary OOP, however extra importantly, construct the behavior of documenting what you write. In 2026, your code can be reviewed, maintained, and reused. So deal with it like a product, not a pocket book experiment.

SQL Fundamentals

Alongside Python, begin SQL fundamentals. Be taught SELECT, JOIN, GROUP BY, subqueries, and aggregations. SQL continues to be the language that runs the info world, and the sooner you get comfy with it, the simpler each later month turns into. Use one thing like PostgreSQL or MySQL, load a pattern dataset, and apply each day.

Help Instruments

Lastly, don’t ignore the “supporting instruments.” Be taught primary Linux/Unix shell instructions (bash) as a result of servers and pipelines dwell there. Arrange Git and begin pushing every part to GitHub/GitLab from day one. Model management isn’t non-compulsory in manufacturing groups.

Month 1 Aim

By the tip of Month 1, your objective is straightforward: you must be capable of write clear Python, question knowledge confidently with SQL, and work comfortably in a terminal with Git. That baseline will carry you thru the complete roadmap.

Month 2: Superior Databases

To Be taught: Superior SQL, RDBMS Observe, NoSQL, Schema Evolution & Knowledge Contracts, Mini ETL Mission

Month 2 is the place you progress from “I can write queries” to “I can design and question databases correctly.” In most knowledge engineering roles, SQL is without doubt one of the essential filters. So this month within the roadmap to turning into an information engineer is about turning into genuinely sturdy at it, whereas additionally increasing into NoSQL and fashionable schema practices.

Superior SQL

Begin upgrading your SQL talent set. Observe complicated multi-table JOINs, window capabilities (ROW_NUMBER, RANK, LEAD/LAG), and CTEs. Additionally be taught primary question tuning: how indexes work, learn how to learn question plans, and learn how to spot sluggish queries. Environment friendly SQL at scale is without doubt one of the most repeated necessities in knowledge engineering job interviews.

RDBMS Observe

Choose a relational database like PostgreSQL or MySQL and construct correct schemas. Attempt designing a easy analytics-friendly construction, resembling a star schema (truth and dimension tables), utilizing a practical dataset like gross sales, transactions, or sensor logs. This offers you hands-on apply with knowledge modelling.

NoSQL Introduction

Now add one NoSQL database to your toolkit. Select one thing like MongoDB (doc retailer) or DynamoDB (key-value). Be taught what makes NoSQL completely different: versatile schemas, horizontal scaling, and sooner writes in lots of real-time methods. Additionally perceive the trade-off: you typically quit complicated joins and inflexible construction for pace and suppleness.

Schema Evolution & Knowledge Contracts

This can be a 2026 talent that issues much more than it did earlier. Learn to deal with schema modifications safely: including columns, renaming fields, sustaining backward/ahead compatibility, and utilizing versioning. Alongside that, perceive the thought of information contracts, that are clear agreements between knowledge producers and customers, so pipelines don’t break when knowledge codecs change.

Mini ETL Mission

Finish the month with a small however full ETL pipeline. Extract knowledge from a CSV or public API, clear and remodel it utilizing Python, then load it into your SQL database. Automate it with a easy script or scheduler. Don’t intention for complexity. The objective, as a substitute, is to construct confidence in transferring knowledge end-to-end.

Month 2 Aim

By the tip of Month 2, you must be capable of write sturdy SQL queries, design smart schemas, perceive when to make use of SQL vs NoSQL, and construct a small and dependable ETL pipeline.

Month 3: Knowledge Warehousing & ETL Pipelines

To Be taught: Knowledge Modelling, Cloud Warehouses, ELT with dbt, Airflow Orchestration, Knowledge High quality Checks, Pipeline Mission

Month 3 on this roadmap is the place you begin working like a contemporary knowledge engineer. You progress past databases and enter real-world analytics, constructing warehouse-based pipelines at scale. That is additionally the month the place you be taught two necessary instruments that present up in every single place in 2026 knowledge groups: dbt and Airflow.

Knowledge Modelling

Begin with knowledge modelling for analytics. Be taught star and snowflake schemas, and perceive why truth tables and dimension tables make reporting sooner and less complicated. You do not want to grow to be a modelling professional in a single month, however you must perceive how good modelling reduces confusion for downstream groups.

Cloud Warehouses

Subsequent, get hands-on with a cloud knowledge warehouse. Choose one: BigQuery, Snowflake, or Redshift. Learn to load knowledge, run queries, and handle tables. These warehouses are constructed for OLAP workloads and are central to most fashionable analytics stacks.

ELT and dbt

Now shift from basic ETL considering to ELT. In 2026, most groups load uncooked knowledge first and do transformations contained in the warehouse. That is the place dbt turns into necessary. Learn to:

- create fashions (SQL transformations)

- handle dependencies between fashions

- write assessments (null checks, uniqueness, accepted values)

- doc your fashions so others can use them confidently

Airflow Orchestration

After getting ingestion and transformations, you want automation. Set up Airflow (native or Docker) and construct easy Directed Acyclic Graphs or DAGs. Find out how scheduling works, how retries work, and learn how to monitor pipeline runs. Airflow isn’t just a “scheduler.” It’s the management centre for manufacturing pipelines.

Knowledge High quality Checks

This can be a non-negotiable 2026 talent. Add automated checks for:

- nulls and lacking values

- freshness (knowledge arriving on time)

- ranges and invalid values

Use dbt assessments, and if you’d like deeper validation, strive Nice Expectations. The important thing level: when knowledge is unhealthy, the pipeline ought to fail early.

Pipeline Mission

Finish Month 3 with a whole warehouse pipeline:

- fetch knowledge each day from an API or recordsdata

- load uncooked knowledge into your warehouse

- remodel it with dbt into clear tables

- schedule every part with Airflow

- add knowledge assessments so failures are seen

This undertaking turns into a robust portfolio piece as a result of it resembles an actual office workflow.

Month 3 Aim

By the tip of Month 3, you must be capable of load knowledge right into a cloud warehouse, remodel it utilizing dbt, automate the workflow with Airflow, and add knowledge high quality checks that stop unhealthy knowledge from quietly coming into your system.

Month 4: Cloud Platforms & Containerisation

To Be taught: Cloud Observe, IAM Fundamentals, Safety & Governance, Cloud Knowledge Instruments, Docker, DevOps Integration

Month 4 within the knowledge engineer roadmap is the place you cease considering solely about pipelines and begin fascinated about how these pipelines run in the true world. In 2026, knowledge engineers are anticipated to know cloud environments, primary safety, and learn how to deploy and preserve workloads in constant environments, and month 4 on this roadmap prepares you for simply that.

Cloud Observe

Choose one cloud platform: AWS, GCP, or Azure. Be taught the core providers that knowledge groups use:

- storage (S3 / GCS / Blob Storage)

- compute (EC2 / Compute Engine / VMs)

- managed databases (RDS / Cloud SQL)

- primary querying instruments (Athena, BigQuery, Synapse-style querying)

Additionally be taught primary cloud ideas like areas, networking fundamentals (high-level is okay), and price consciousness.

IAM, Safety, Privateness, and Governance

Now deal with entry management and security. Be taught IAM fundamentals: roles, insurance policies, least privilege, and repair accounts. Perceive how groups deal with secrets and techniques (API keys, credentials). Be taught what PII is and the way it’s protected utilizing masking and entry restrictions. Additionally get accustomed to governance concepts like:

- row/column-level safety

- knowledge catalogues

- governance instruments (Lake Formation, Unity Catalog)

You do not want to grow to be a safety specialist, however it’s essential to perceive what “safe by default” appears to be like like.

Cloud Knowledge Instruments

Discover one or two managed knowledge providers in your chosen cloud. Examples:

- AWS Glue, EMR, Redshift

- GCP Dataflow, Dataproc, BigQuery

- Azure Knowledge Manufacturing facility, Synapse

Even when you don’t grasp them, perceive what they do and what they’re changing (self-managed Spark clusters, handbook scripts, and so forth.).

Docker Fundamentals

Now be taught Docker. The objective is straightforward: bundle your knowledge workload so it runs the identical in every single place. Containerise one factor you could have already constructed, resembling:

- your Python ETL job

- your Airflow setup

- a small dbt undertaking runner

Learn to write a Dockerfile, construct a picture, and run containers domestically.

DevOps Integration

Lastly, join your work to a primary engineering workflow:

- use Docker Compose to run multi-service setups (Airflow + Postgres, and so forth.)

- arrange a easy CI pipeline (GitHub Actions) that runs checks/assessments on every commit

That is how fashionable groups preserve pipelines secure.

Month 4 Aim

By the tip of Month 4, you must be capable of use one cloud platform comfortably, perceive IAM and primary governance, run an information workflow in Docker, and apply easy CI practices to maintain your pipeline code dependable.

Month 5: Large Knowledge, Lakehouse, Streaming, and Orchestration

To Be taught: Spark (PySpark), Lakehouse Structure, Desk Codecs (Delta/Iceberg/Hudi), Kafka, Superior Airflow

Month 5 on this roadmap to turning into an information engineer is about dealing with scale. Even if you’re not processing huge datasets on day one, most groups nonetheless count on knowledge engineers to know distributed processing, lakehouse storage, and streaming methods. This month builds that layer.

Hadoop (Elective, Excessive-Stage Solely)

In 2026, you don’t want deep Hadoop experience. However you must know what it’s and why it existed. Be taught what HDFS, YARN, and MapReduce have been constructed for, and what issues they solved on the time. Bear in mind, solely examine these on your consciousness. Don’t attempt to grasp them, as a result of most fashionable stacks have moved towards Spark and lakehouse methods.

Apache Spark (PySpark)

Spark continues to be the default alternative for batch processing at scale. Find out how Spark works with DataFrames, what transformations and actions imply, and the way SparkSQL matches into actual pipelines. Spend time understanding the fundamentals of partitioning and shuffles, as a result of these two ideas clarify most efficiency points. Observe by processing a bigger dataset than what you usually use Pandas for, and examine the workflow.

Lakehouse Structure

Now transfer to lakehouse structure. Many groups need the low-cost storage of an information lake, however with the reliability of a warehouse. Lakehouse methods intention to offer that center floor. Be taught what modifications while you deal with knowledge on object storage as a structured analytics system, particularly round reliability, versioning, and schema dealing with.

Delta Lake / Iceberg / Hudi

These desk codecs are an enormous a part of why lakehouse works in apply. Be taught what they add on prime of uncooked recordsdata: higher metadata administration, ACID-style reliability, schema enforcement, and assist for schema evolution. You do not want to grasp all three, however you must perceive why they exist and what issues they remedy in manufacturing pipelines.

Streaming and Kafka Fundamentals

Streaming issues as a result of many organisations need knowledge to reach repeatedly moderately than in each day batches. Begin with Kafka and learn the way matters, partitions, producers, and customers work collectively. Perceive how groups use streaming pipelines for occasion knowledge, clickstreams, logs, and real-time monitoring. The objective is to know the structure clearly, to not grow to be a Kafka operator.

Superior Airflow Orchestration

Lastly, stage up your orchestration expertise by writing extra production-style Airflow DAGs. You’ll be able to attempt to:

- add retries and alerting

- run Spark jobs by Airflow operators

- arrange failure notifications

- schedule batch and near-real-time jobs

That is very near what manufacturing orchestration appears to be like like.

Month 5 Aim

By the tip of Month 5 as an information engineer, you must be capable of run batch transformations in Spark, clarify how lakehouse methods work, perceive why Delta/Iceberg/Hudi matter, and describe how Kafka-based streaming pipelines function. You also needs to be capable of orchestrate these workflows with Airflow in a dependable, production-minded approach.

Month 6: Capstone Mission and Job Readiness

To Be taught: Finish-to-Finish Pipeline Design, Documentation, Fundamentals Revision, Interview Preparation

Month 6 on this knowledge engineer roadmap is the place every part comes collectively. The objective is to construct one full undertaking that proves you possibly can work like an actual knowledge engineer. This single capstone will matter greater than ten small tutorials, as a result of it demonstrates full possession of a pipeline.

Capstone Mission

Construct an end-to-end pipeline that covers the fashionable 2026 stack. Right here’s what your Month 6 capstone ought to embody. Maintain it easy, however be sure each half is current.

- Ingest knowledge in batch (each day recordsdata/logs) or as a stream (API occasions)

- Land uncooked knowledge in cloud storage resembling S3 or GCS

- Rework the info utilizing Spark or Python

- Load cleaned outputs right into a cloud warehouse like Snowflake or BigQuery

- Orchestrate the workflow utilizing Airflow

- Run key elements in Docker so the undertaking is reproducible

- Add knowledge high quality checks for nulls, freshness, duplicates, and invalid values

Make certain your pipeline fails clearly when knowledge breaks. This is without doubt one of the strongest indicators that your undertaking is production-minded and never only a demo.

Documentation

Documentation isn’t an additional activity. It’s a part of the undertaking. Create a transparent README that explains what your pipeline does, why you made sure decisions, and the way another person can run it. Add a easy structure diagram, an information dictionary, and clear code feedback. In actual groups, sturdy documentation typically separates good engineers from common ones.

Fundamentals Overview

Now revisit the fundamentals. Overview SQL joins, window capabilities, schema design, and customary question patterns. Refresh Python fundamentals, particularly knowledge manipulation and writing clear capabilities. You need to be capable of clarify key trade-offs resembling ETL vs ELT, OLTP vs OLAP, and SQL vs NoSQL with out hesitation.

Interview Preparation

Spend time practising interview-style questions. Resolve SQL puzzles, work on Python coding workouts, and put together to debate your capstone intimately. Be prepared to clarify the way you deal with retries, failures, schema modifications, and knowledge high quality points. In 2026 interviews, firms care much less about whether or not you “used a software” and extra about whether or not you perceive learn how to construct dependable pipelines.

Month 6 Aim

By the tip of Month 6, you must have a whole, well-documented knowledge engineering undertaking, sturdy fundamentals in SQL and Python, and clear solutions for frequent interview questions. As a result of now, you could have utterly surpassed the training stage and are able to put your expertise to make use of in an actual job.

Conclusion

As I mentioned earlier than, in 2026, knowledge engineering is now not nearly understanding instruments. It now revolves round constructing pipelines which might be dependable, safe, and simple to function at scale. If you happen to comply with this six-month roadmap religiously and end it with a robust capstone, there isn’t a approach you received’t be prepared as a modern-day knowledge engineer.

Not simply on papers, you’ll have the talents that fashionable groups truly search for: strong SQL and Python, warehouse-first ELT, orchestration, knowledge high quality, governance consciousness, and the flexibility to ship end-to-end methods. At that time of this roadmap, you’ll have already grow to be an information engineer. All you’ll then want is a job to make it official.

Login to proceed studying and revel in expert-curated content material.