On this tutorial, we show how one can design a contract-first agentic determination system utilizing PydanticAI, treating structured schemas as non-negotiable governance contracts moderately than elective output codecs. We present how we outline a strict determination mannequin that encodes coverage compliance, danger evaluation, confidence calibration, and actionable subsequent steps instantly into the agent’s output schema. By combining Pydantic validators with PydanticAI’s retry and self-correction mechanisms, we be certain that the agent can’t produce logically inconsistent or non-compliant choices. All through the workflow, we deal with constructing an enterprise-grade determination agent that causes beneath constraints, making it appropriate for real-world danger, compliance, and governance eventualities moderately than toy prompt-based demos. Take a look at the FULL CODES right here.

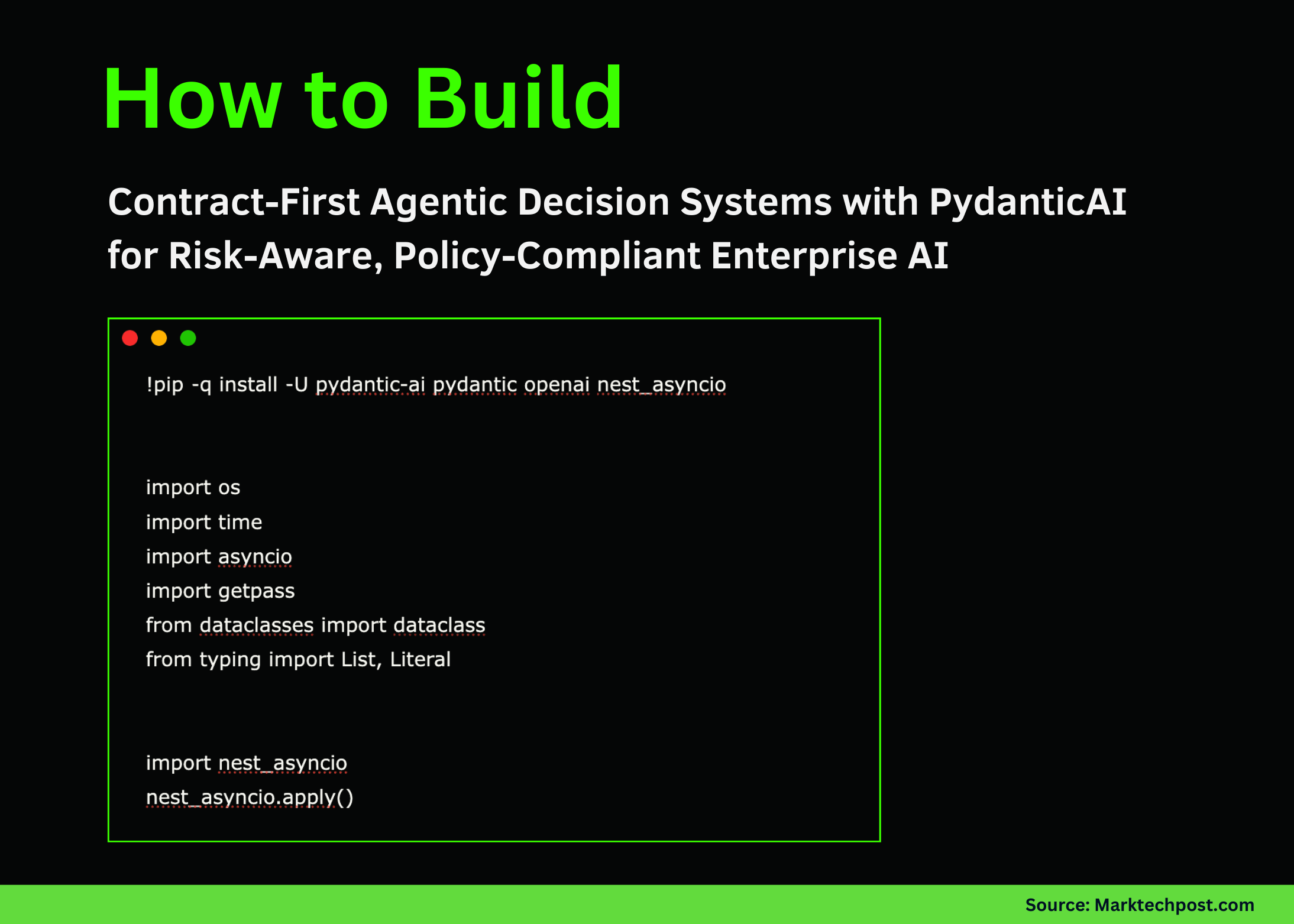

!pip -q set up -U pydantic-ai pydantic openai nest_asyncio

import os

import time

import asyncio

import getpass

from dataclasses import dataclass

from typing import Checklist, Literal

import nest_asyncio

nest_asyncio.apply()

from pydantic import BaseModel, Discipline, field_validator

from pydantic_ai import Agent

from pydantic_ai.fashions.openai import OpenAIChatModel

from pydantic_ai.suppliers.openai import OpenAIProvider

OPENAI_API_KEY = os.getenv("OPENAI_API_KEY")

if not OPENAI_API_KEY:

strive:

from google.colab import userdata

OPENAI_API_KEY = userdata.get("OPENAI_API_KEY")

besides Exception:

OPENAI_API_KEY = None

if not OPENAI_API_KEY:

OPENAI_API_KEY = getpass.getpass("Enter OPENAI_API_KEY: ").strip()We arrange the execution atmosphere by putting in the required libraries and configuring asynchronous execution for Google Colab. We securely load the OpenAI API key and make sure the runtime is able to deal with async agent calls. This establishes a secure basis for operating the contract-first agent with out environment-related points. Take a look at the FULL CODES right here.

class RiskItem(BaseModel):

danger: str = Discipline(..., min_length=8)

severity: Literal["low", "medium", "high"]

mitigation: str = Discipline(..., min_length=12)

class DecisionOutput(BaseModel):

determination: Literal["approve", "approve_with_conditions", "reject"]

confidence: float = Discipline(..., ge=0.0, le=1.0)

rationale: str = Discipline(..., min_length=80)

identified_risks: Checklist[RiskItem] = Discipline(..., min_length=2)

compliance_passed: bool

circumstances: Checklist[str] = Discipline(default_factory=listing)

next_steps: Checklist[str] = Discipline(..., min_length=3)

timestamp_unix: int = Discipline(default_factory=lambda: int(time.time()))

@field_validator("confidence")

@classmethod

def confidence_vs_risk(cls, v, data):

dangers = data.information.get("identified_risks") or []

if any(r.severity == "excessive" for r in dangers) and v > 0.70:

increase ValueError("confidence too excessive given high-severity dangers")

return v

@field_validator("determination")

@classmethod

def reject_if_non_compliant(cls, v, data):

if data.information.get("compliance_passed") is False and v != "reject":

increase ValueError("non-compliant choices should be reject")

return v

@field_validator("circumstances")

@classmethod

def conditions_required_for_conditional_approval(cls, v, data):

d = data.information.get("determination")

if d == "approve_with_conditions" and (not v or len(v) < 2):

increase ValueError("approve_with_conditions requires at the least 2 circumstances")

if d == "approve" and v:

increase ValueError("approve should not embody circumstances")

return vWe outline the core determination contract utilizing strict Pydantic fashions that exactly describe a sound determination. We encode logical constraints resembling confidence–danger alignment, compliance-driven rejection, and conditional approvals instantly into the schema. This ensures that any agent output should fulfill enterprise logic, not simply syntactic construction. Take a look at the FULL CODES right here.

@dataclass

class DecisionContext:

company_policy: str

risk_threshold: float = 0.6

mannequin = OpenAIChatModel(

"gpt-5",

supplier=OpenAIProvider(api_key=OPENAI_API_KEY),

)

agent = Agent(

mannequin=mannequin,

deps_type=DecisionContext,

output_type=DecisionOutput,

system_prompt="""

You're a company determination evaluation agent.

You could consider danger, compliance, and uncertainty.

All outputs should strictly fulfill the DecisionOutput schema.

"""

)

We inject enterprise context via a typed dependency object and initialize the OpenAI-backed PydanticAI agent. We configure the agent to provide solely structured determination outputs that conform to the predefined contract. This step formalizes the separation between enterprise context and mannequin reasoning. Take a look at the FULL CODES right here.

@agent.output_validator

def ensure_risk_quality(end result: DecisionOutput) -> DecisionOutput:

if len(end result.identified_risks) < 2:

increase ValueError("minimal two dangers required")

if not any(r.severity in ("medium", "excessive") for r in end result.identified_risks):

increase ValueError("at the least one medium or excessive danger required")

return end result

@agent.output_validator

def enforce_policy_controls(end result: DecisionOutput) -> DecisionOutput:

coverage = CURRENT_DEPS.company_policy.decrease()

textual content = (

end result.rationale

+ " ".be part of(end result.next_steps)

+ " ".be part of(end result.circumstances)

).decrease()

if end result.compliance_passed:

if not any(ok in textual content for ok in ["encryption", "audit", "logging", "access control", "key management"]):

increase ValueError("lacking concrete safety controls")

return end resultWe add output validators that act as governance checkpoints after the mannequin generates a response. We pressure the agent to determine significant dangers and to explicitly reference concrete safety controls when claiming compliance. If these constraints are violated, we set off computerized retries to implement self-correction. Take a look at the FULL CODES right here.

async def run_decision():

world CURRENT_DEPS

CURRENT_DEPS = DecisionContext(

company_policy=(

"No deployment of techniques dealing with private information or transaction metadata "

"with out encryption, audit logging, and least-privilege entry management."

)

)

immediate = """

Resolution request:

Deploy an AI-powered buyer analytics dashboard utilizing a third-party cloud vendor.

The system processes consumer habits and transaction metadata.

Audit logging isn't carried out and customer-managed keys are unsure.

"""

end result = await agent.run(immediate, deps=CURRENT_DEPS)

return end result.output

determination = asyncio.run(run_decision())

from pprint import pprint

pprint(determination.model_dump())We run the agent on a sensible determination request and seize the validated structured output. We show how the agent evaluates danger, coverage compliance, and confidence earlier than producing a last determination. This completes the end-to-end contract-first determination workflow in a production-style setup.

In conclusion, we show how one can transfer from free-form LLM outputs to ruled, dependable determination techniques utilizing PydanticAI. We present that by imposing exhausting contracts on the schema degree, we are able to routinely align choices with coverage necessities, danger severity, and confidence realism with out handbook immediate tuning. This strategy permits us to construct brokers that fail safely, self-correct when constraints are violated, and produce auditable, structured outputs that downstream techniques can belief. Finally, we show that contract-first agent design allows us to deploy agentic AI as a reliable determination layer inside manufacturing and enterprise environments.

Take a look at the FULL CODES right here. Additionally, be happy to comply with us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you may be part of us on telegram as properly.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.