We’ve got reached the top of our collection on deploying to OCI utilizing the Hackathon Starter Equipment.

For this final article, we are going to see the right way to deploy an software utilizing Helidon (Java), the MySQL REST Service, and OCI GenAI with Lanchain4J.

We use Helidon as a result of it’s a cool, open-source framework developed by Oracle. It’s light-weight and quick. The newest model, 4.3.0, consists of AI-related options corresponding to LangChain4j, which permits us to combine AI capabilities into our software through OCI AI Providers.

Prerequisities

The very first thing we have to do is confirm that we’ve all of the required Java and Maven variations: Java 21 and the corresponding Maven model.

We additionally want our OCI config file to entry the AI Providers.

We’d like the Sakila pattern database.

And eventually, we want the code of our software, which is out there on GitHub: helidon-mrs-ai.

Java 21 and Maven

We verify on our compute occasion what’s put in, we are able to confer with half 3 of the collection:

[opc@webserver ~]$ java -version

openjdk model "17.0.17" 2025-10-21 LTS

OpenJDK Runtime Surroundings (Red_Hat-17.0.17.0.10-1.0.1) (construct 17.0.17+10-LTS)

OpenJDK 64-Bit Server VM (Red_Hat-17.0.17.0.10-1.0.1) (construct 17.0.17+10-LTS, blended mode, sharing)For Helidon 4.3.0, we want Java 21, in order we see partially 3, we have to change the default Java to 21:

[opc@webserver ~]$ sudo update-alternatives --config java

There are 2 packages which offer 'java'.

Choice Command

-----------------------------------------------

*+ 1 java-17-openjdk.aarch64 (/usr/lib/jvm/java-17-openjdk-17.0.17.0.10-1.0.1.el9.aarch64/bin/java)

2 java-21-openjdk.aarch64 (/usr/lib/jvm/java-21-openjdk-21.0.9.0.10-1.0.1.el9.aarch64/bin/java)

Enter to maintain the present choice[+], or sort choice quantity: 2

[opc@webserver ~]$ java -version

openjdk model "21.0.9" 2025-10-21 LTS

OpenJDK Runtime Surroundings (Red_Hat-21.0.9.0.10-1.0.1) (construct 21.0.9+10-LTS)

OpenJDK 64-Bit Server VM (Red_Hat-21.0.9.0.10-1.0.1) (construct 21.0.9+10-LTS, blended mode, sharing)Now, let’s confirm the Maven model:

[opc@webserver ~]$ mvn -version

Apache Maven 3.6.3 (Pink Hat 3.6.3-22)

Maven residence: /usr/share/maven

Java model: 17.0.17, vendor: Pink Hat, Inc., runtime: /usr/lib/jvm/java-17-openjdk-17.0.17.0.10-1.0.1.el9.aarch64

Default locale: en_US, platform encoding: UTF-8

OS identify: "linux", model: "6.12.0-105.51.5.el9uek.aarch64", arch: "aarch64", household: "unix"This isn’t the one we want; we’ve to put in it:

[opc@webserver ~]$ sudo dnf set up maven-openjdk21

....

[opc@webserver ~]$ mvn -version

Apache Maven 3.6.3 (Pink Hat 3.6.3-22)

Maven residence: /usr/share/maven

Java model: 21.0.9, vendor: Pink Hat, Inc., runtime: /usr/lib/jvm/java-21-openjdk-21.0.9.0.10-1.0.1.el9.aarch64

Default locale: en_US, platform encoding: UTF-8

OS identify: "linux", model: "6.12.0-105.51.5.el9uek.aarch64", arch: "aarch64", household: "unixExcellent, that is what we want; we are able to proceed to the following step.

OCI Config

To entry the Gen AI Providers on OCI, we have to present some info to allow entry. The simplest manner is to generate an OCI config file.

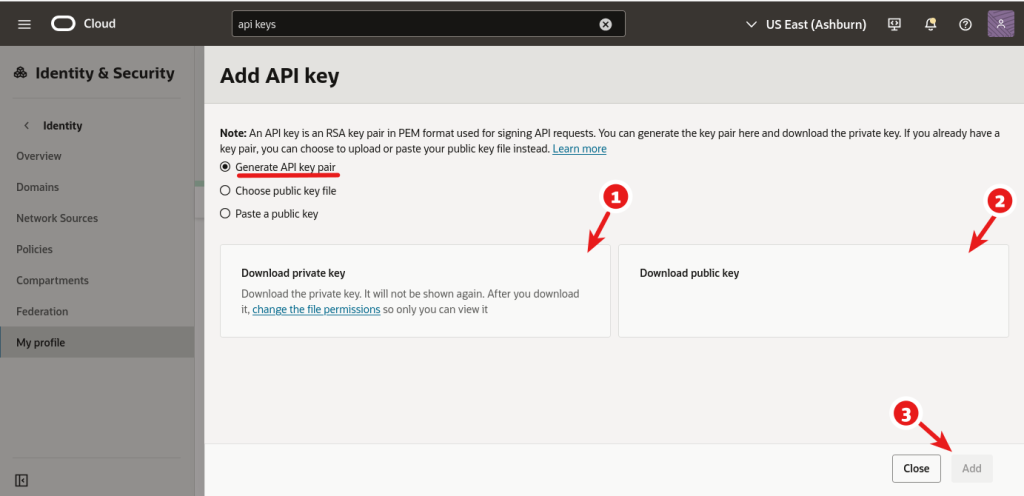

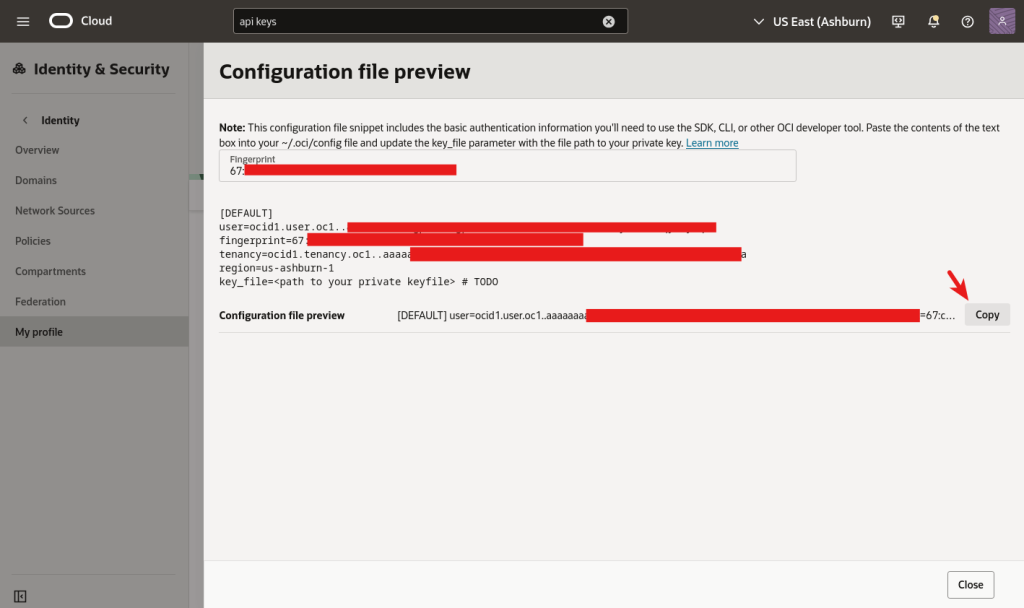

It’s worthwhile to use the OCI Console and use an present or create a brand new API Key:

Now, we have to copy the non-public key to our compute occasion (utilizing ssh, see half 2), and on the compute occasion, we create the folder ~/.oci, and we create the file config by which we paste the configuration:

[laptop]$ scp -i key.pem opc@:

[laptop]$ ssh -i key.pem opc@

[opc@webserver ~]$ mkdir ~/.oci

[opc@webserver ~]$ vi ~/.oci/config

[opc@webserver ~]$ chmod 600 Don’t neglect to replace the key_file entry within the .oci/config file to match the API_PRIVATE_KEY path, such as/residence/opc/my_priv.pem.

Sakila Database

We’ll use the Sakila database in our software. That is the right way to set up it:

[opc@webserver ~]$ wget https://downloads.mysql.com/docs/sakila-db.tar.gz

[opc@webserver ~]$ tar zxvf sakila-db.tar.gz

[opc@webserver ~]$ mysqlsh admin@

SQL > supply 'sakila-db/sakila-schema.sql'

SQL > supply 'sakila-db/sakila-data.sql' Putting in the code

Lastly, we are going to deploy the code to our compute occasion. We have to first set up git:

[opc@webserver ~]$ sudo dnf set up git -yAnd we fetch the code:

[opc@webserver ~]$ git clone https://github.com/lefred/helidon-mrs-ai.gitThe Database

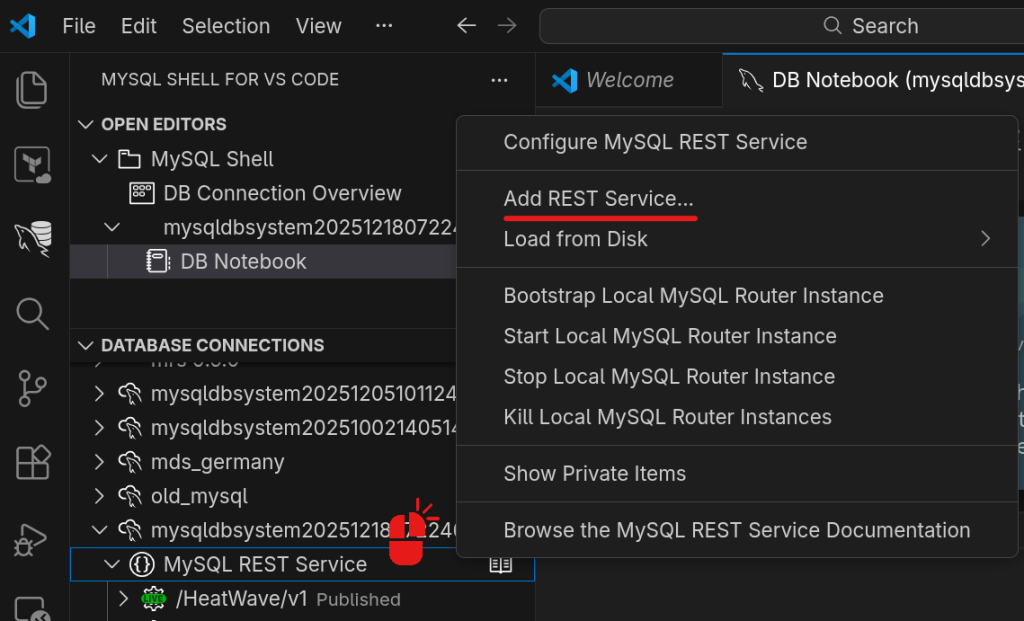

Now we have to add the Sakila schema to the REST Service (see half 8) and create a brand new desk that may retailer our customers’ credentials:

SQL > use sakila;

SQL> CREATE TABLE `customers` (

`user_id` int unsigned NOT NULL AUTO_INCREMENT,

`firstname` varchar(30) DEFAULT NULL,

`lastname` varchar(30) DEFAULT NULL,

`username` varchar(30) DEFAULT NULL,

`electronic mail` varchar(50) DEFAULT NULL,

`password_hash` varchar(60) DEFAULT NULL,

`updated_at` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP

ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`user_id`)

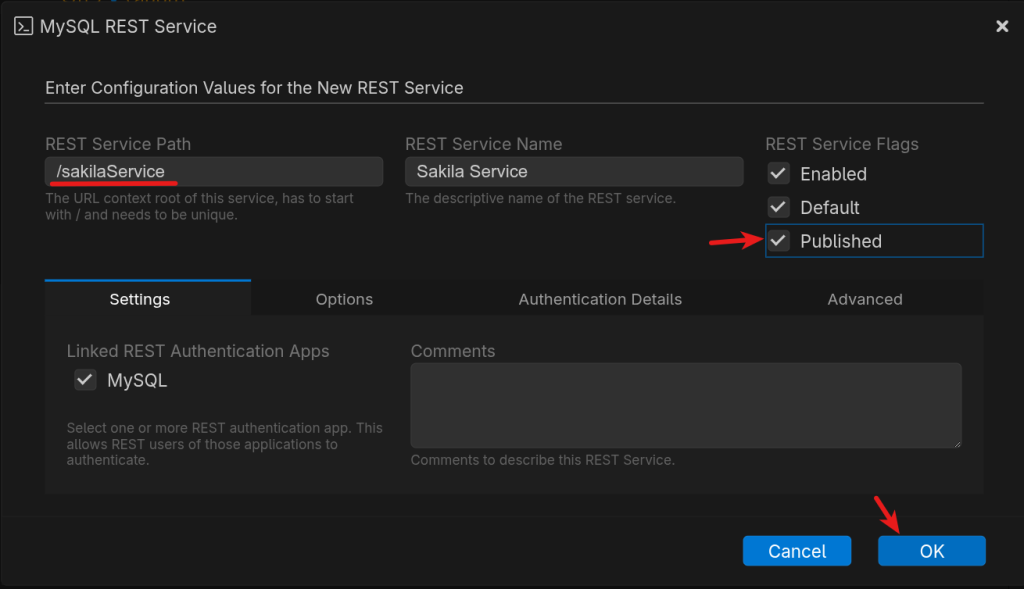

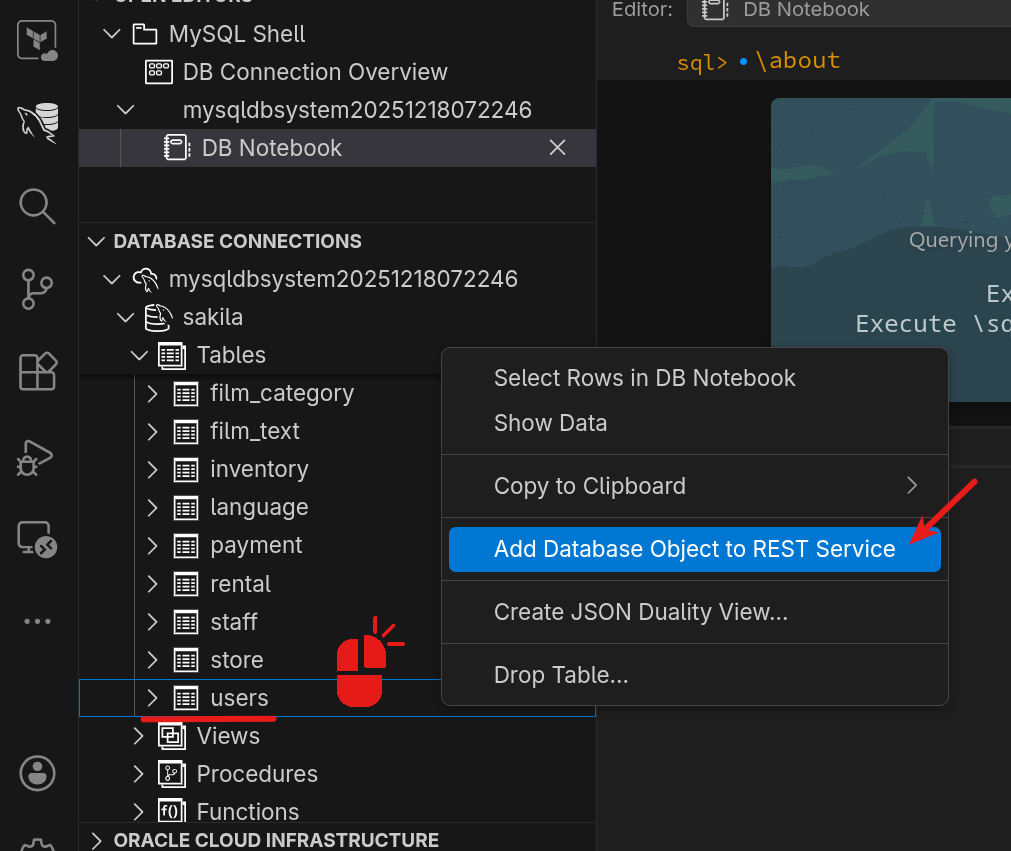

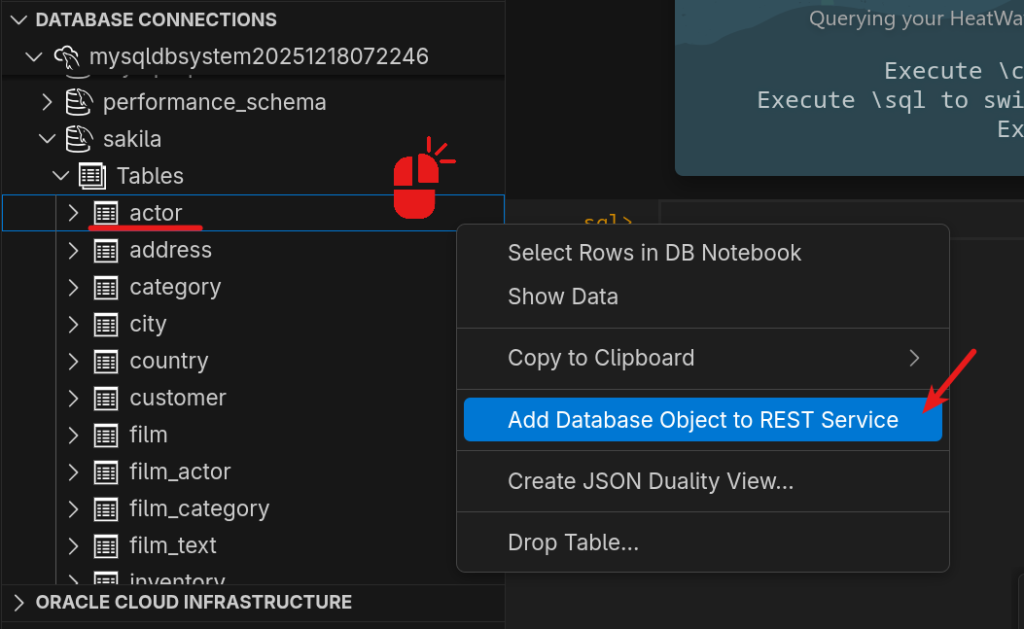

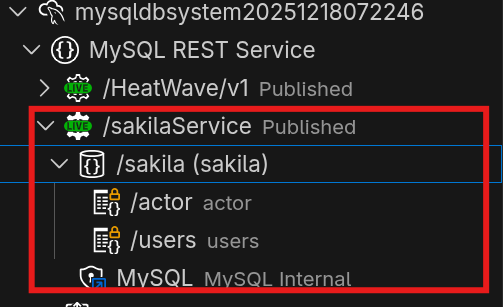

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ciWe create a brand new service, and we add the brand new customers and the actor desk into it:

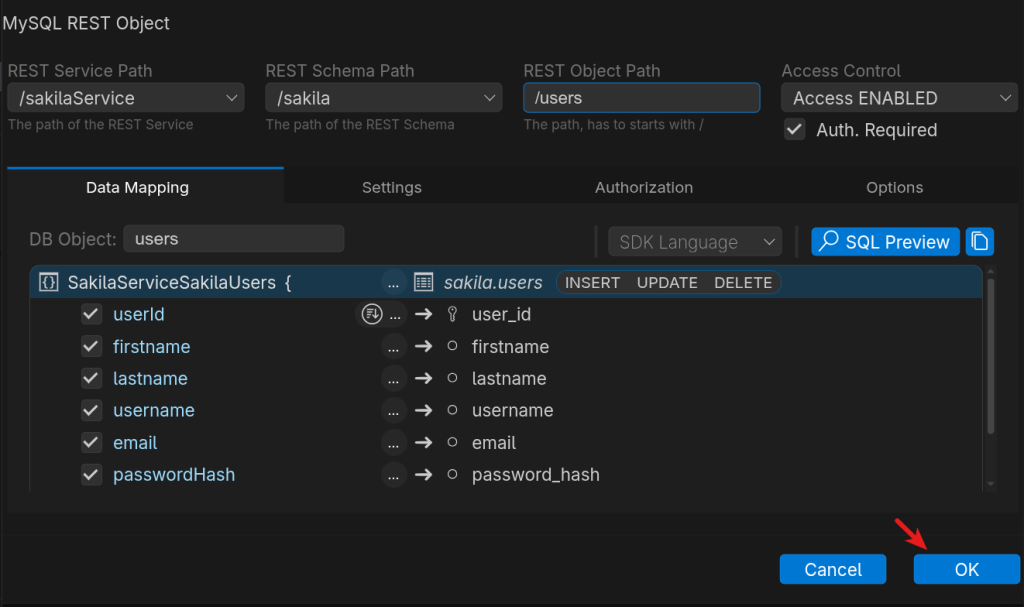

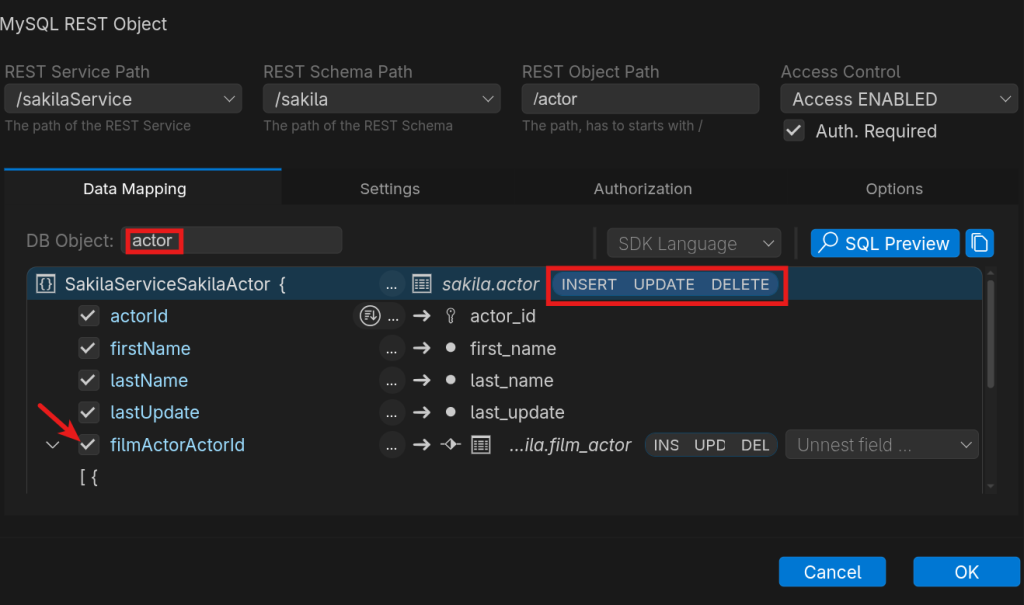

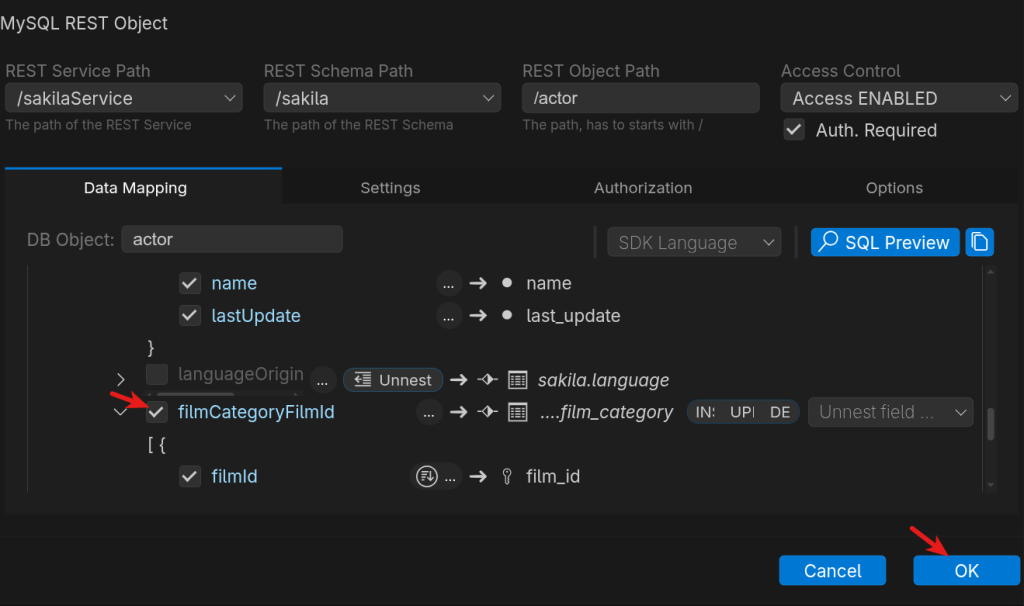

And for the actor desk, we additionally permit DML (INSERT, UPDATE, DELETE), and we choose a number of additional hyperlinks to different tables, such because the movies, the language, and the class:

After which, all is prepared for the REST Service:

Configuring the app

Now, we are going to configure the appliance earlier than constructing it. Within the helidon-mrs-ai/src/foremost/assets/ listing, there’s a template (software.yaml.template) we are going to use:

[opc@webserver ~]$ cd helidon-mrs-ai/src/foremost/assets

[opc@webserver resources]$ cp software.yaml.template software.yamlWe’ll now modify the content material of the software.yaml file with the right info associated to our surroundings.

Within the mrs part, the URL should match the MySQL HeatWave DB System’s IP and the service path we created. In my case it must be:

mrs:

url: "https://10.0.1.74/sakilaService"For the langchain4j entries, please take note of your area and compartment-id. You will discover this info within the OCI Console within the Analytics & AI part. See half 6 within the view code of the chat instance.

Don’t neglect to make use of a mannequin that can be accessible in your area.

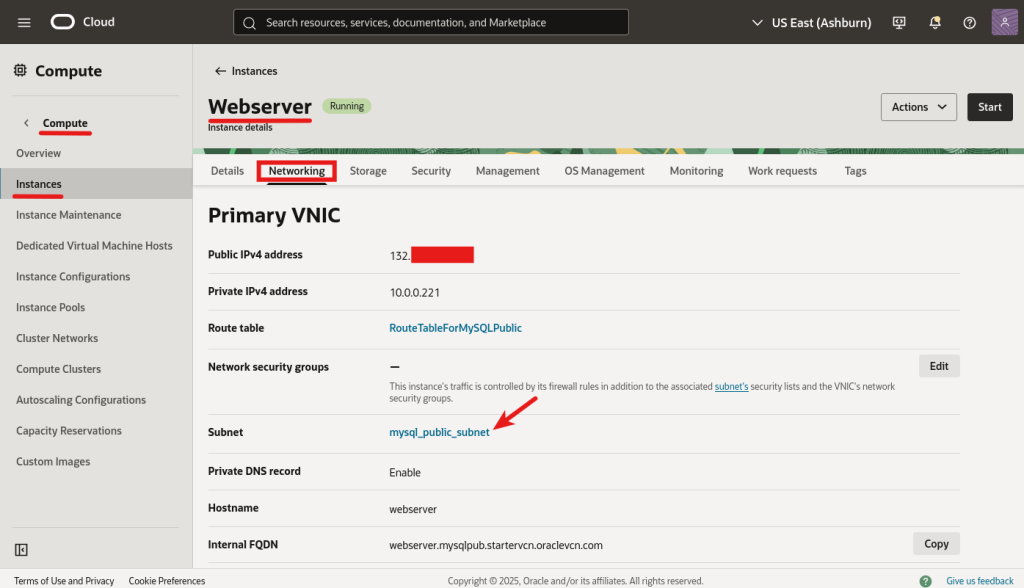

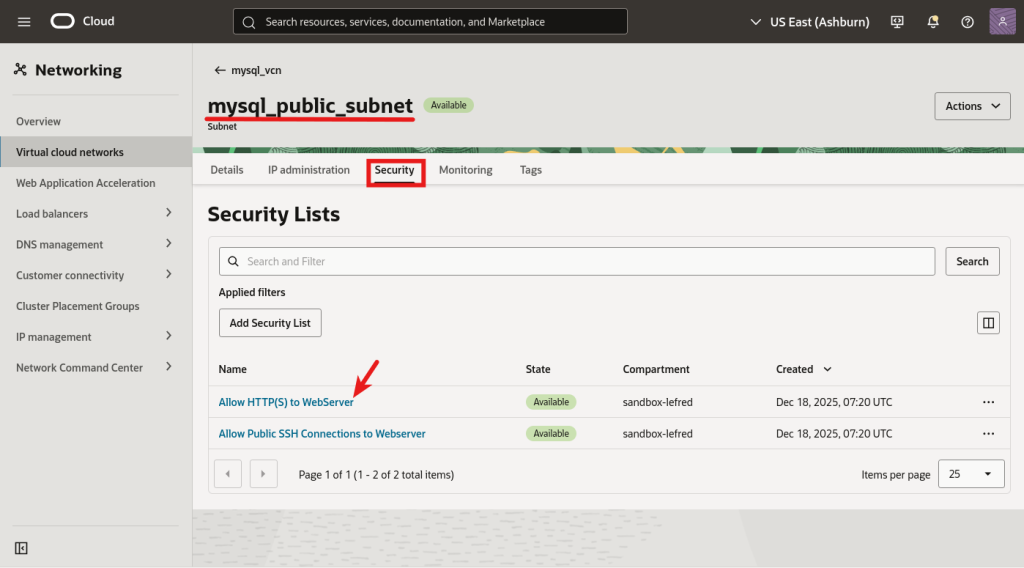

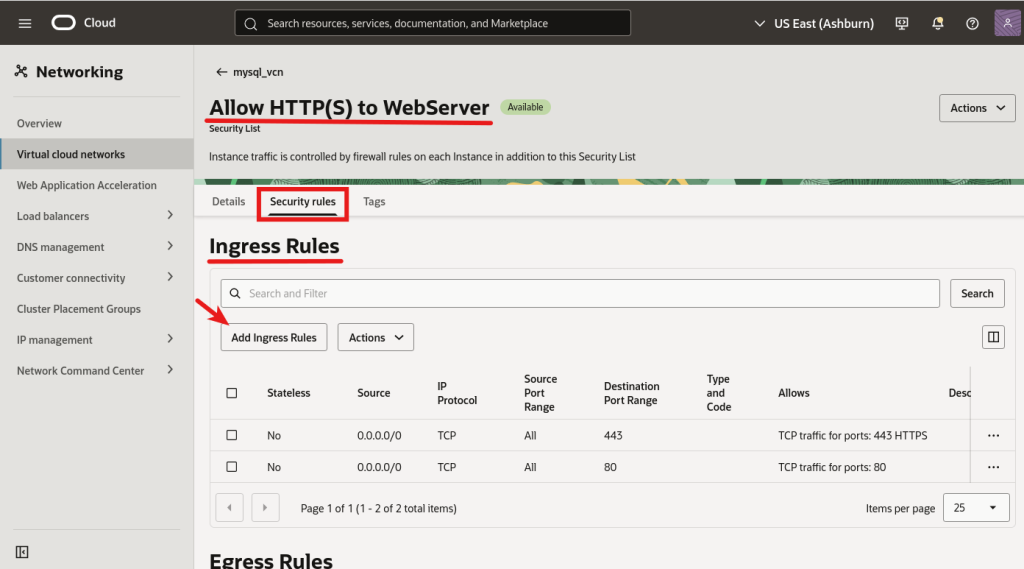

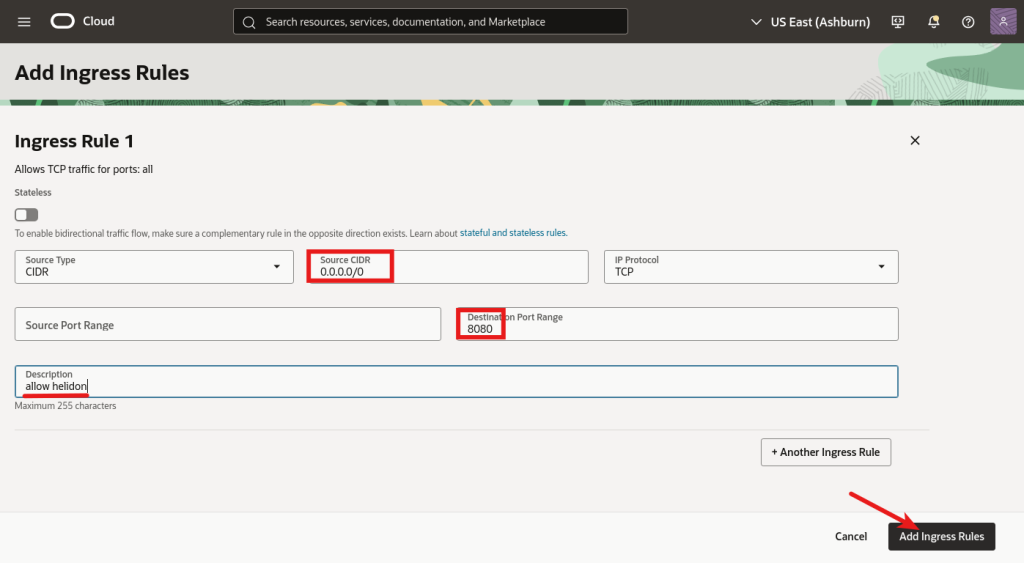

You’ll have observed within the configuration file that port 8080 will probably be used; you then want to permit connections to it from the Web:

We’ve got allowed entry in OCI, now we additionally will allow entry into the compute occasion’s native firewall:

[opc@webserver ~]$ sudo firewall-cmd --zone=public --permanent --add-port=8080/tcp

success

[opc@webserver ~]$ sudo firewall-cmd --reload

successHelidon

Now we’re able to deploy our Helidon software:

[opc@webserver ~]$ cd ~/helidon-mrs-ai/

[opc@webserver helidon-mrs-ai]$ mvn clear set up -DskipTests bundleAs soon as constructed, we are able to run it:

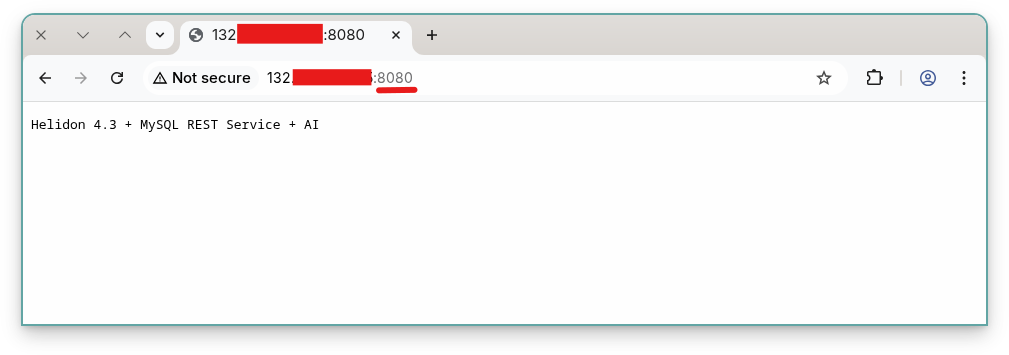

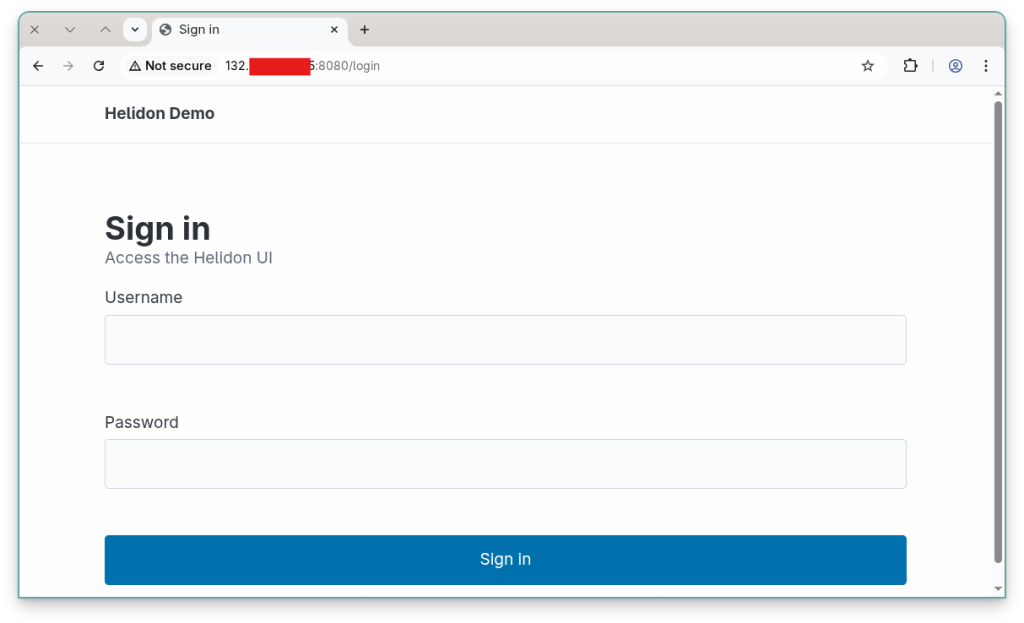

[opc@webserver helidon-mrs-ai]$ java -jar goal/helidon-mrs-ai.jarWe will entry the general public IP of our webserver (the compute occasion) on a browser utilizing port 8080:

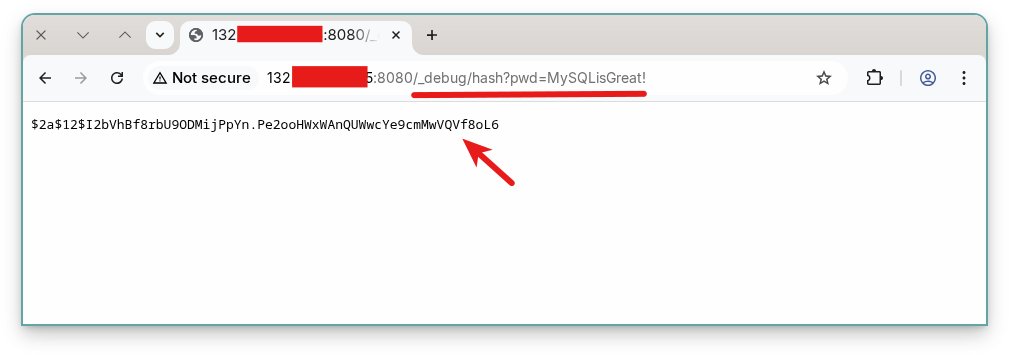

As chances are you’ll keep in mind, we created a person desk to retailer our customers; we nonetheless must make one person. To know its hash password, we have to generate it. We’ve got an endpoint that may generate such a hash: _debug/hash?pwd=

We use that hash whereas creating our person:

SQL > use sakila

SQL > customers (firstname, username, password_hash)

values ('lefred', 'fred',

'$2a$12$I2bVhBf8rbU9ODMijPpYn.Pe2ooHWxWAnQUWwcYe9cmMwVQVf8oL6')We will now entry the /ui endpoint that may redirect us to the login web page:

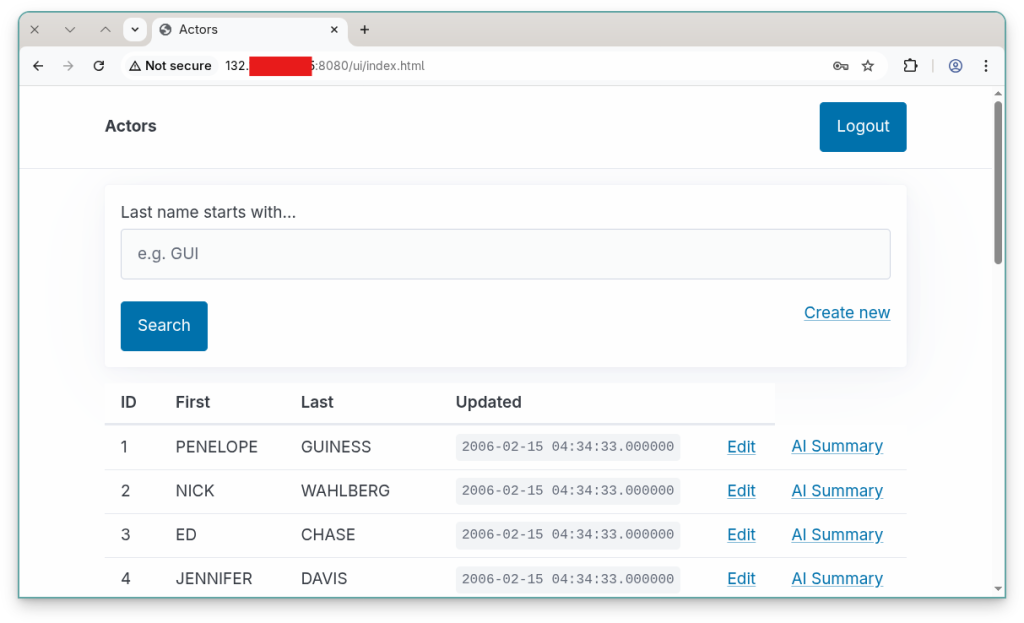

And when efficiently logged, we are able to entry the appliance:

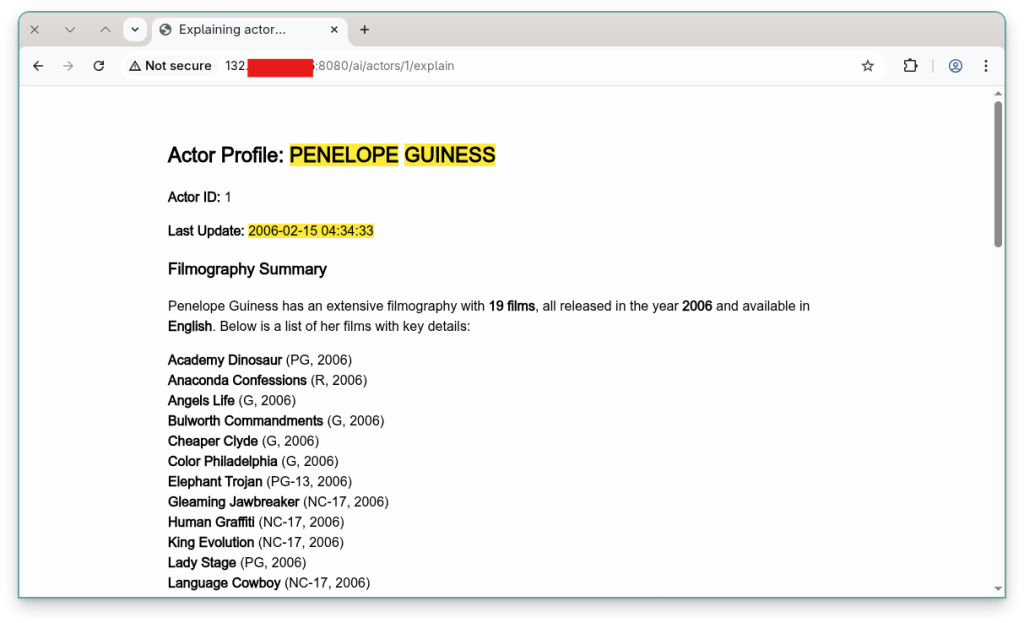

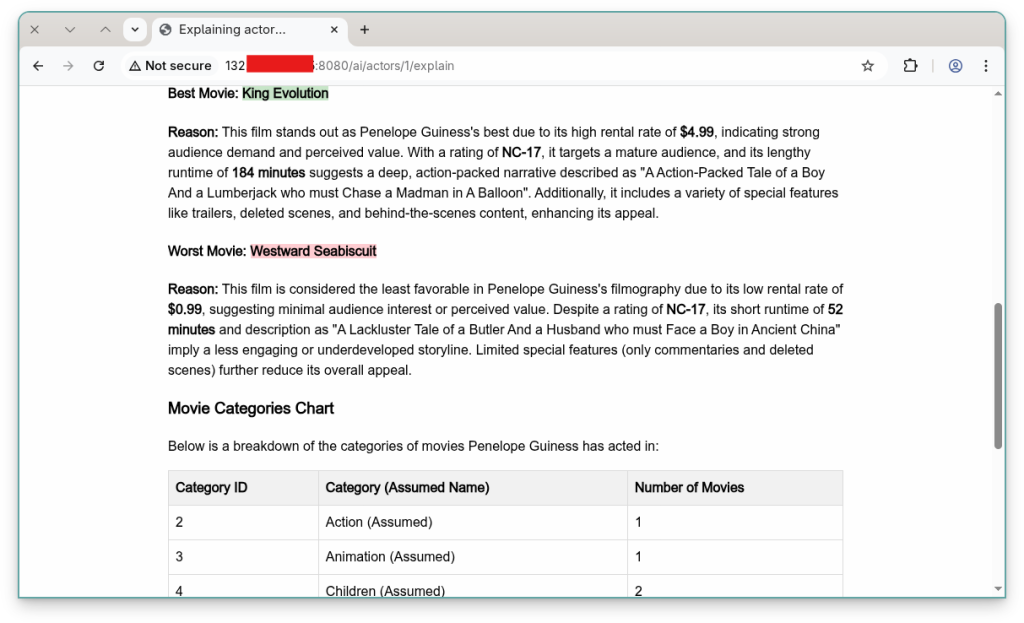

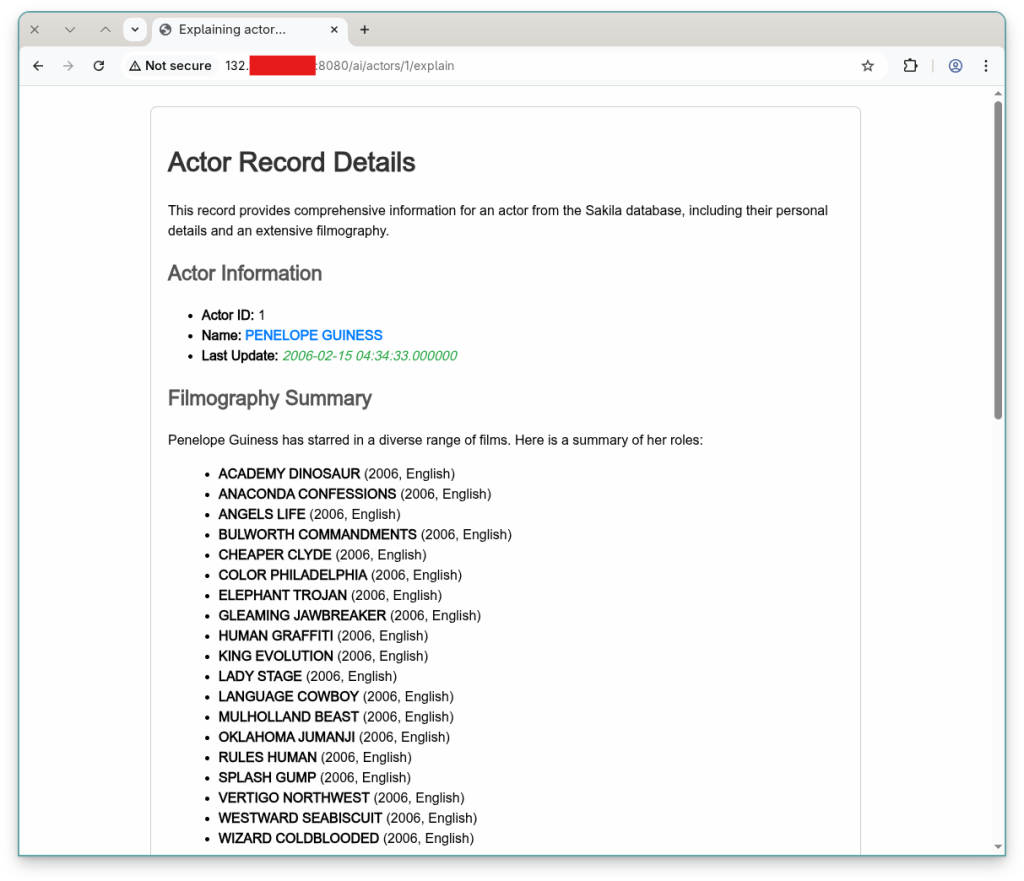

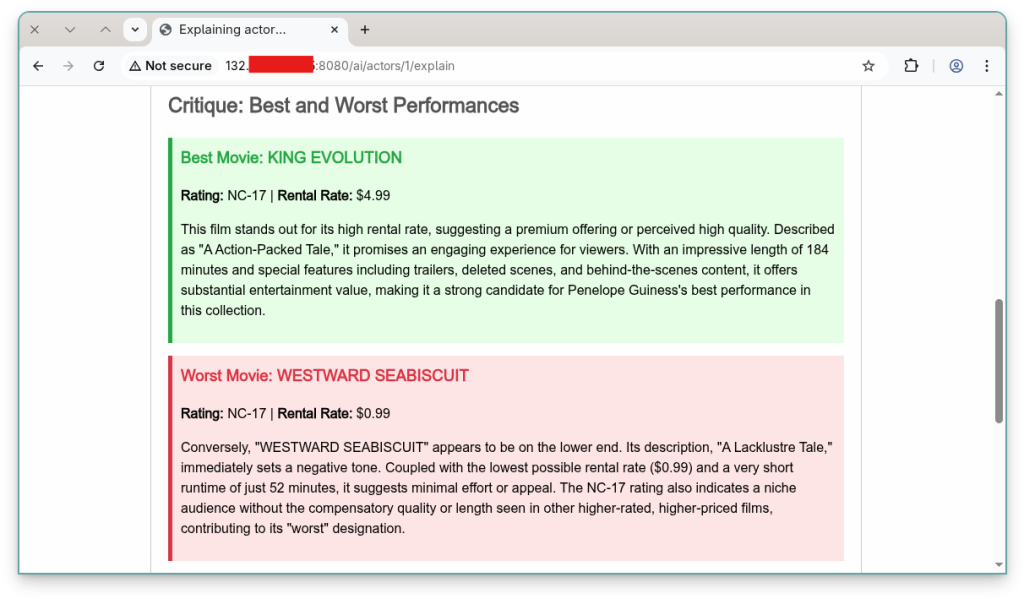

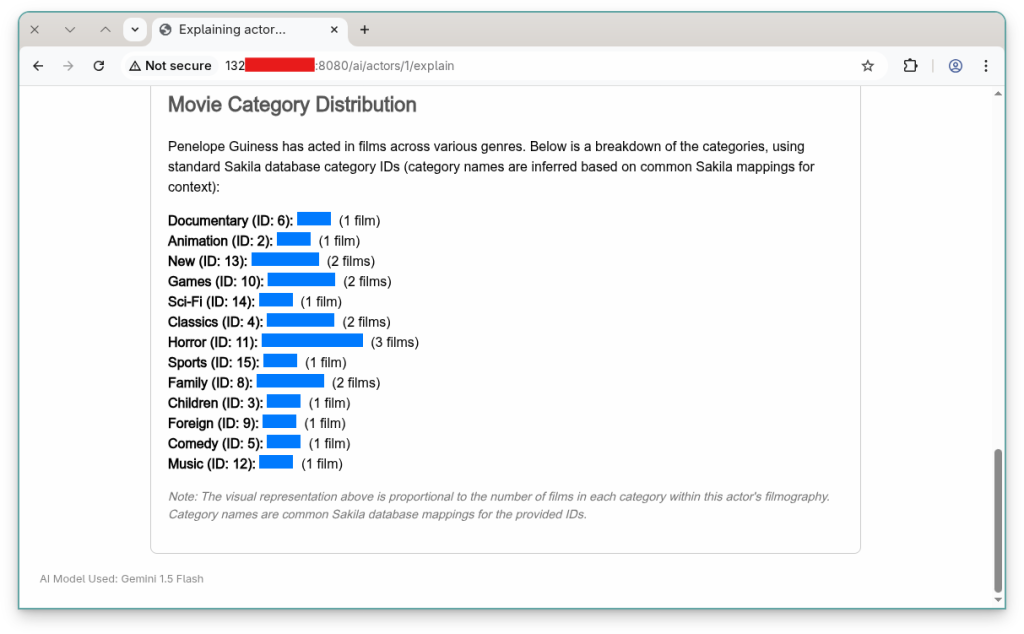

If we choose the AI Abstract for one actor, we are going to get a abstract generated by the mannequin we configured. The next instance was generated utilizing xai.grok-3:

The next abstract was generated utilizing google.gemini-2.5-flash:

Conclusion

This final step wraps up the entire “Deploying on OCI with the starter package” journey by placing all of the constructing blocks collectively: a Helidon (Java) software working on a compute occasion, a MySQL HeatWave database uncovered by the MySQL REST Service, and OCI GenAI consumed through LangChain4j so as to add an AI-powered abstract characteristic.

We noticed the right way to deploy and begin writing an software in OCI and profit from the ability of the accessible LLM fashions.

I hope you loved the journey and that you’re prepared to begin writing your individual app… joyful coding!