Ten years in the past this week, I wrote a provocative and daring put up that blew up, made it to high spot on HackerNews. I had simply joined Magic Pony, a nascent startup, and I bear in mind the founders Rob and Zehan scolding me for offending the very neighborhood we had been a part of, and – after all – deep studying builders we needed to recruit.

Deep Studying is Straightforward – Study One thing More durable

Caveat: This put up is supposed tackle people who find themselves utterly new to deep

studying and are planning an entry into this subject. The intention is to assist

them suppose critically concerning the complexity of the sphere, and to assist them inform

aside issues which might be trivial from issues which might be…

The put up aged in some very, hmm, fascinating methods. So I believed it could be good to replicate on what I wrote, issues I obtained very incorrect, and the way issues turned out.

- 🤡 Hilariously poor predictions on low hanging fruit and the influence of structure tweaks

- 🎯 some insightful ideas on how simplicity = energy

- 🤡 predictions on growth of Bayesian deep studying, MCMC

- 🎯 some good recommendation nudging individuals to generative fashions

- ⚖️ PhD vs firm residency: What I believe now?

- 🍿 Who’s Flawed At present? Am I incorrect? Are We All Flawed?

Let’s begin with the obvious blind spot in hindsight:

🤡 ‘s predictions on structure and scaling

There’s additionally a sense within the subject that low-hanging fruit for deep studying is disappearing. […] Perception into tips on how to make these strategies truly work are unlikely to return within the type of enhancements to neural community architectures alone.

Ouch. Now this one has aged like my great-uncle-in-law’s wine (He did not have barrels so he cleaned up an outdated wheelie bin to function fermentation vat). After all at this time, 40% of individuals credit score the transformer structure for every part that is occurring, 60% credit score scaling legal guidelines that are primarily existence proofs of stupendously costly low hanging fruit.

However there’s extra I did not see again then: I – and others – wrote rather a lot about why GANs do not work, and tips on how to perceive them higher, and tips on how to repair them utilizing maths. Ultimately, what made them work properly in observe was concepts like BigGAN, which largely used architectural tweaks reasonably than foundational mathematical modifications. However, what made SRGAN work was considering deeply concerning the loss perform and making a basic change – a change that has been universally adopted in virtually all follow-on work.

Typically, plenty of lovely concepts had been steamrolled by the unexplainably an unreasonably good inductive biases of the only strategies. I – and lots of others – wrote about modeling invariances and positive, geometric deep studying is a factor in the neighborhood, however proof is mounting that deliberate, theoretically impressed mannequin designs play a restricted function. Even one thing as profitable because the convolution, as soon as regarded as indispensable for picture processing, is susceptible to going the way in which of the dodo – not less than on the largest scales.

In hindsight: There’s numerous stuff in deep studying that we do not perceive practically sufficient. But they work. Some easy issues have surprisingly big influence, and mathematical rigour would not all the time assist. The bitter lesson is bitter for a cause (perhaps it was the wheelie bin). Typically issues work for causes utterly unrelated to why we thought they’d work. Typically individuals are proper for the incorrect cause. I used to be actually incorrect, and for the incorrect cause, a number of occasions. Have we run out of low hanging fruit now? Are we getting into “the period of analysis with massive compute” as Ilya stated? Is Yan LeCun proper to name LLMs a lifeless finish at this time? (Pop some 🍿 within the microwave and browse until the tip for extra)

🎯 “Deep studying is highly effective precisely as a result of it makes laborious issues straightforward”

Okay, this was an important perception. And good perception is usually completely apparent in hindsight. The unimaginable energy of deep studying, outlined because the holy trinity of automated differentiation, stochastic gradient descent and GPU libraries is that it took one thing PhD college students did, and turned it into one thing 16-year-olds can play with. They need not know what a gradient is, probably not, a lot much less implement them. You need not open The Matrix Cookbook 100 occasions a day to recollect which manner the transpose is meant to be.

Initially of my profession, in 2007, I attended a Machine Studying Summer season Faculty. It was meant for PhD college students and postdocs. I used to be among the many youngest individuals, solely a Grasp’s scholar. At present, we run AI retreats for 16-18 12 months olds who work on tasks like RL-based options to the no-three-in-lines program, or testing OOD behaviour of diffusion language fashions. Three tasks are usually not removed from publishable work, one scholar is first creator on a NeurIPS paper, although I had nothing to do with that.

In hindsight: the influence of constructing laborious issues straightforward shouldn’t be underestimated. That is the place the most important influence alternatives are. LLMs, too, are highly effective as a result of they make laborious issues rather a lot simpler. That is additionally our core thesis at Affordable: LLMs will make extraordinarily troublesome kinds of programming – ones which sort of wanted a specialised PhD to essentially perceive – “straightforward”. Or not less than accessible to mortal human software program engineers.

🤡 Strikes Once more? Probabilistic Programming and MCMC

OK so one of many massive predictions I made is that

probabilistic programming might do for Bayesian ML what Theano has performed for neural networks

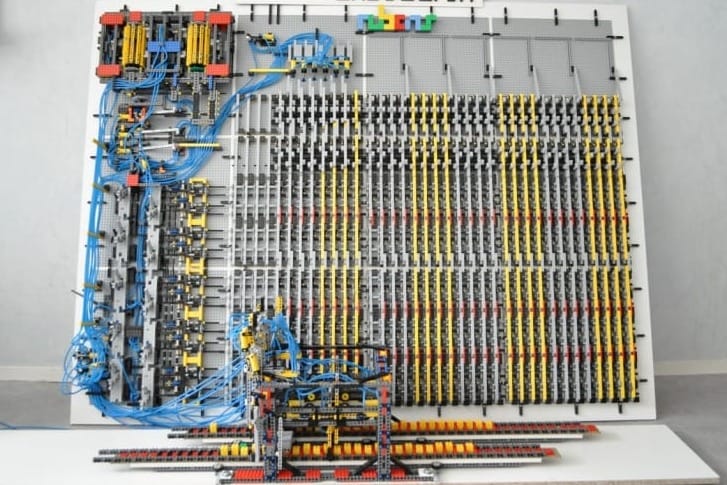

To say the least, that didn’t occur (in the event you’re questioning, Theano was an early deep studying framework, precursor to pytorch and jax at this time). Nevertheless it was an interesting thought. If the primary factor with deep studying is that it democratized “PhD degree” machine studying by hiding complexity underneath lego-like simplicity, would not it’s nice to just do that with the much more PhD degree matter of Bayesian/probabilistic inference? Gradient descent and excessive dimensional vectors are laborious sufficient to clarify to a youngster however good luck explaining KL Divergences and Hamiltonian Monte Carlo. If we might summary this stuff out the identical manner, and unlock their energy, it may very well be nice. Nicely, we could not summary issues to the identical diploma.

In hindsight: Commenters referred to as it self-serving of me to foretell that areas through which I had experience in will occur to be an important subjects to work the long run. They usually had been proper! My background in data principle and chances did change into fairly helpful, nevertheless it took me a while to let go of my Bayesian upbringing. I’ve mirrored on this in my put up on secular Bayesianism in 2019.

🎯 Generative Modeling

Within the put up I instructed individuals study “one thing more durable” as an alternative of – or along with – deep studying. A type of areas I inspired individuals to have a look at was generative modelling. I gave GANs and Variational Autoencoders as examples. After all, neither of those play function in LLMs, arguably the crown jewels of deep studying. Moreover, generative modelling in autoregressive fashions is definitely tremendous easy, will be defined with none probabilistic language as merely “predicting the following token”.

In hindsight: Generative modelling continues to be influential, and so this wasn’t not less than tremendous dangerous recommendation to inform individuals to concentrate on it in 2016. Diffusion fashions, early variations of which had been rising by 2015, energy most picture and video generative fashions at this time, and diffusion language fashions could at some point be influential, too. Right here, not less than it’s true that deeper data of subjects like rating matching, variational strategies got here in helpful.

⚖️ PhD vs Firm Residency

On this fascinating matter, I wrote

A pair corporations now supply residency programmes, prolonged internships, which supposedly permit you to kickstart a profitable profession in machine studying with no PhD. What the best choice is relies upon largely in your circumstances, but in addition on what you need to obtain.

I wrote this in 2015. If you happen to went and did a PhD in Europe (lasting 3-4 years) beginning then, assuming you are nice, you’d have performed properly. You graduated simply in time to see LLMs unfold – did not miss an excessive amount of. Plus, you’d doubtless have performed one fascinating internship each single summer time of your diploma. However issues have modified. Frontier analysis is now not printed. Internships at frontier labs are laborious to get except you are in your closing 12 months and the businesses can see a transparent path of hiring you full time. Gone are the times of publishing papers as an intern.

Within the frontier LLM house, the sphere is so fast paced that it is really troublesome to choose a analysis query there that will not look out of date by the point you write your thesis. If you happen to choose one thing basic and impressive sufficient – say including an fascinating type of reminiscence to LLMs – your lab will doubtless lack the sources to show it at scale, and even when your thought is an effective one, by the point you are performed, the issue will likely be thought of “primarily solved” and other people begin copying no matter algorithm DeepSeek or Google occurred to speak about first. After all, you may select to not have interaction with the frontier questions and do one thing

Instances have modified. Relying on what your objectives, pursuits are and what you are good at, I am not so positive a PhD is the only option. And what’s extra! I declare that

most undergraduate laptop science packages, even some elite ones, fail to match the training velocity of the perfect college students.

I am not saying it is best to skip a rigorous diploma program. My remark is that high expertise can and do efficiently have interaction with what was thought of graduate-level content material of their teenage years. Whereas again then I used to be deeply skeptical on ‘faculty dropouts’ and the Thiel fellowship, my views have shifted considerably after spending time with good younger college students.

🍿 Part: Are We Flawed At present?

The wonderful thing about science is that scientists are allowed to be incorrect. Progress occurs when individuals take totally different views, offered we admit we had been incorrect and replace on proof. So right here you could have it, clearly

I used to be incorrect on an important many issues.

However this raises questions: The place do I stand at this time? Am I incorrect at this time? Who else is incorrect at this time? Which place goes to seem like my 2016 weblog put up on reflection?

In 2016 I warned in opposition to herd mentality of “lego-block” deep studying. In 2026, I’m marching with the herd. The herd, in keeping with Yann LeCun, is sprinting in direction of a lifeless finish, mistaking the fluency of language fashions with a real basis for intelligence.

Is Yann LeCun proper to name LLMs a lifeless finish? I recall that Yann’s technical criticism of LLMs began with a reasonably mathematics-based theoretical argument about how errors accumulate, and autoregressive LLMs are exponentially diverging diffusers. Such an argument was particularly fascinating to see from Yann, who likes to remind us that naysayers doubted neural networks and have put ahead arguments like “they’ve too many parameters, they’ll overfit” or “non-convex optimization will get caught in native optima”. Arguments that he blamed for standing in the way in which of progress. Like others, I do not now purchase these arguments.

What’s the herd not seeing? In keeping with Yann, true intelligence requires an understanding of the bodily world. That with a view to obtain human degree intelligence, we first need to have cat or canine degree intelligence. Honest sufficient. There are totally different elements of intelligence and LLMs solely seize some elements. However this isn’t cause sufficient to name them a lifeless finish except the objective is to create one thing indistinguishable from a human. A non-embodied, language-based intelligence has an infinitely deep rabbit-hole of information and intelligence to overcome: an lack of ability to catch a mouse or climb a tree will not stop language-based intelligence to have profound influence.

On different issues the herd will not be seeing, Yann argues true intelligence wants “actual” reminiscence, reasoning and planning. I do not suppose anybody disagrees. However why might these not be constructed on or plugged into the language mannequin substrate? It is now not true that LLMs are statistical sample matching gadgets that study to imitate what’s on the web. More and more, LLMs study from exploration, cause and plan fairly robustly. Rule studying, steady studying and reminiscence are on high of the analysis agenda of each single LLM firm. These are going to get performed.

I have a good time Yann going on the market to make and show his factors, and want him luck. I respect him and his profession tremendously, at the same time as I usually discover myself taking a perspective that simply occurs to be in anti-phase to his – as avid readers of this weblog little question know.

However for now, I am proudly marching with the herd.