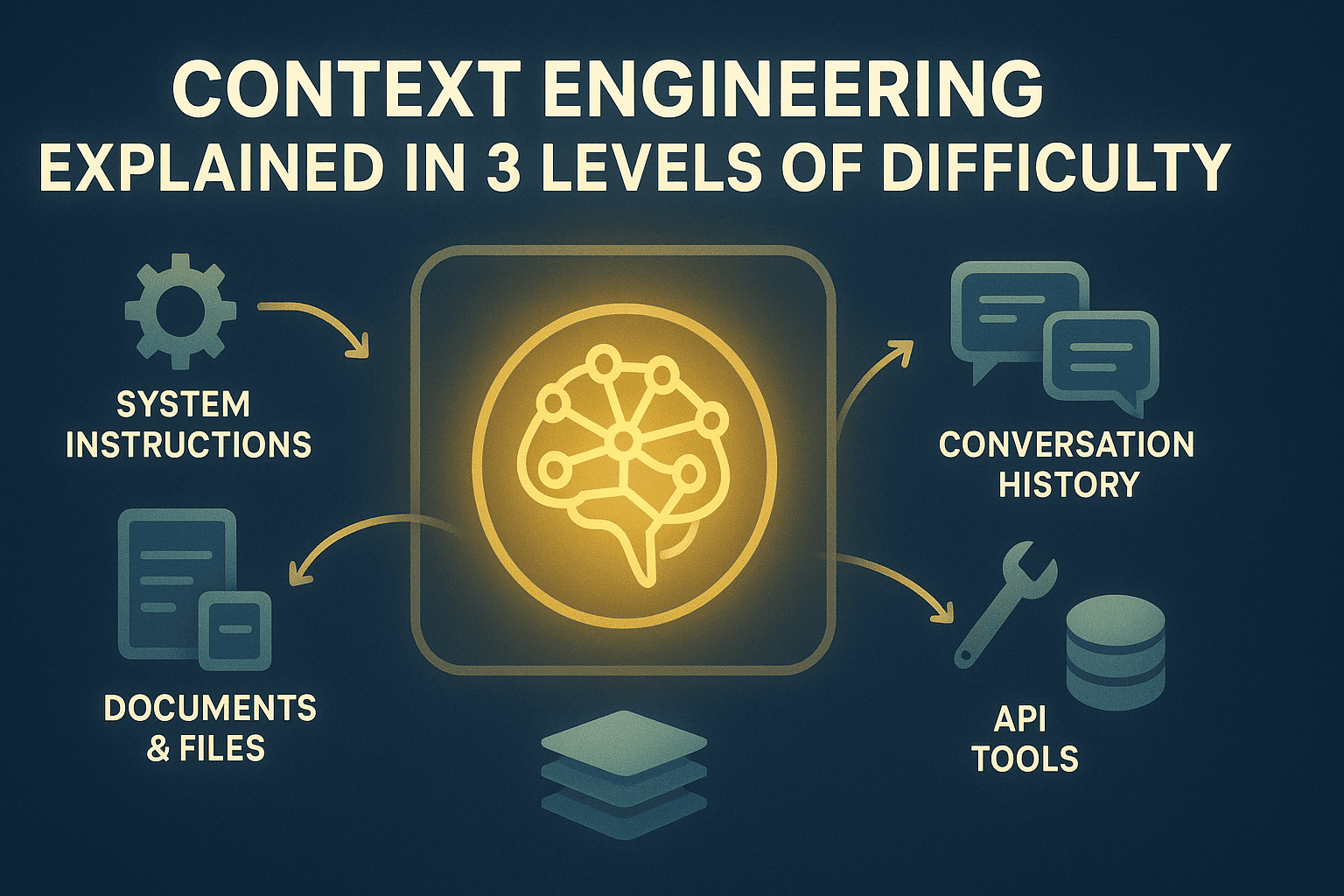

Context Engineering Defined in 3 Ranges of Problem | Picture by Creator

# Introduction

Massive language mannequin (LLM) purposes hit context window limits consistently. The mannequin forgets earlier directions, loses observe of related info, or degrades in high quality as interactions prolong. It’s because LLMs have mounted token budgets, however purposes generate unbounded info — dialog historical past, retrieved paperwork, file uploads, software programming interface (API) responses, and person knowledge. With out administration, essential info will get randomly truncated or by no means enters context in any respect.

Context engineering treats the context window as a managed useful resource with express allocation insurance policies and reminiscence techniques. You determine what info enters context, when it enters, how lengthy it stays, and what will get compressed or archived to exterior reminiscence for retrieval. This orchestrates info circulation throughout the applying’s runtime relatively than hoping every part matches or accepting degraded efficiency.

This text explains context engineering at three ranges:

- Understanding the elemental necessity of context engineering

- Implementing sensible optimization methods in manufacturing techniques

- Reviewing superior reminiscence architectures, retrieval techniques, and optimization methods

The next sections discover these ranges intimately.

# Degree 1: Understanding The Context Bottleneck

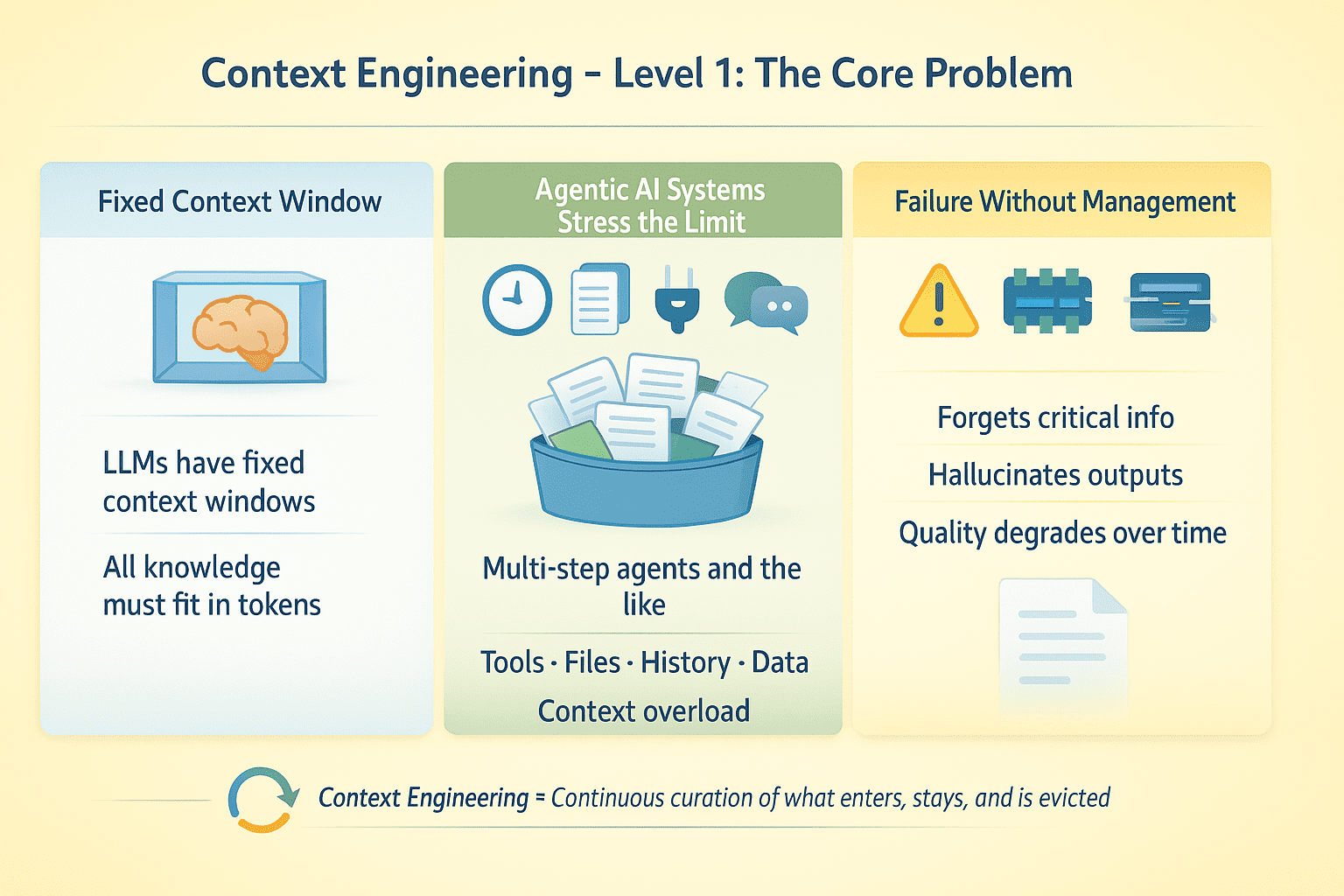

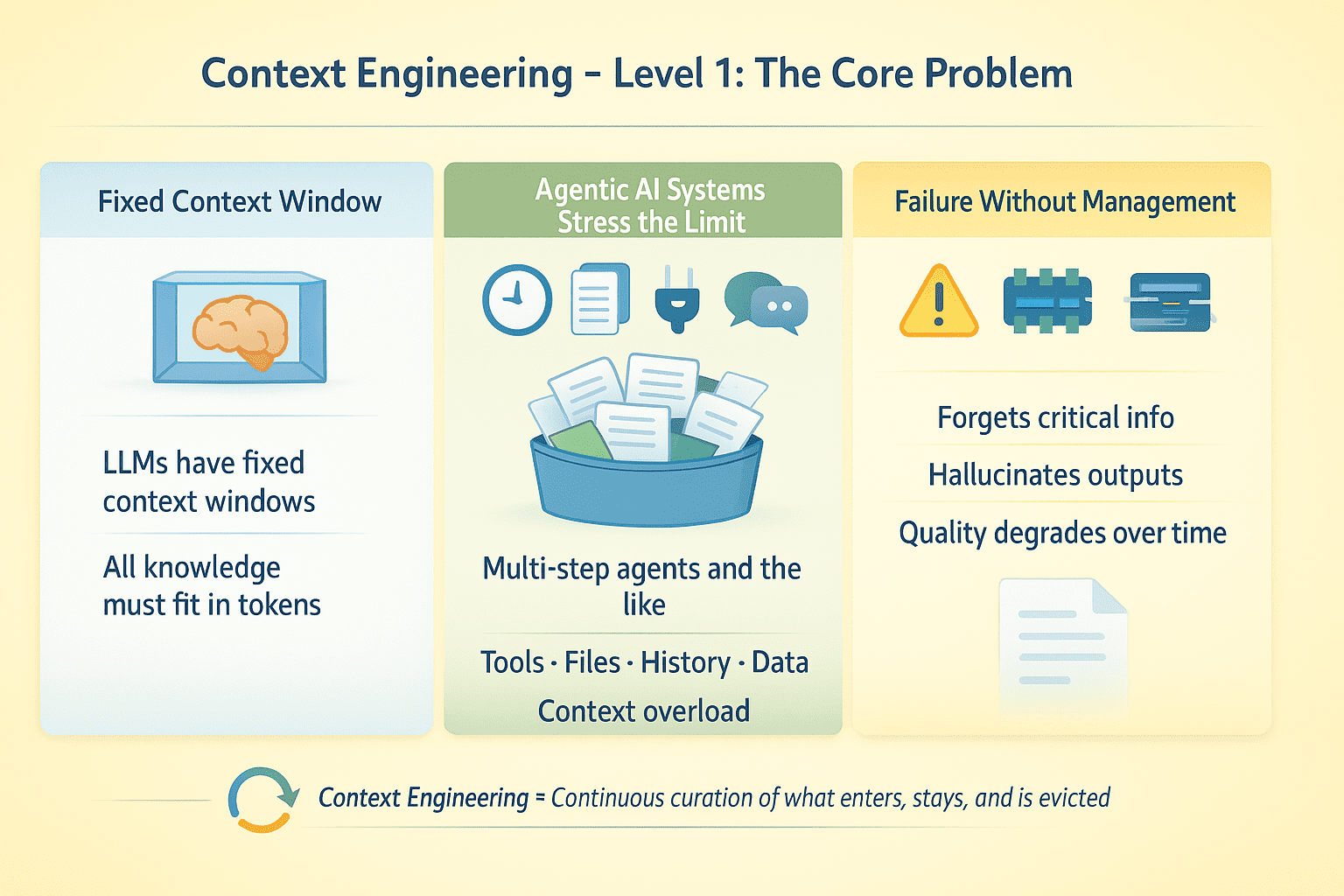

LLMs have mounted context home windows. Every part the mannequin is aware of at inference time should slot in these tokens. This isn’t a lot of an issue with single-turn completions. For retrieval-augmented era (RAG) purposes and AI brokers working multi-step duties with software calls, file uploads, dialog historical past, and exterior knowledge, this creates an optimization downside: what info will get consideration and what will get discarded?

Say you’ve got an agent that runs for a number of steps, makes 50 API calls, and processes 10 paperwork. Such an agentic AI system will probably fail with out express context administration. The mannequin forgets crucial info, hallucinates software outputs, or degrades in high quality because the dialog extends.

Context Engineering Degree 1 | Picture by Creator

Context engineering is about designing for steady curation of the knowledge setting round an LLM all through its execution. This consists of managing what enters context, when, for the way lengthy, and what will get evicted when house runs out.

# Degree 2: Optimizing Context In Follow

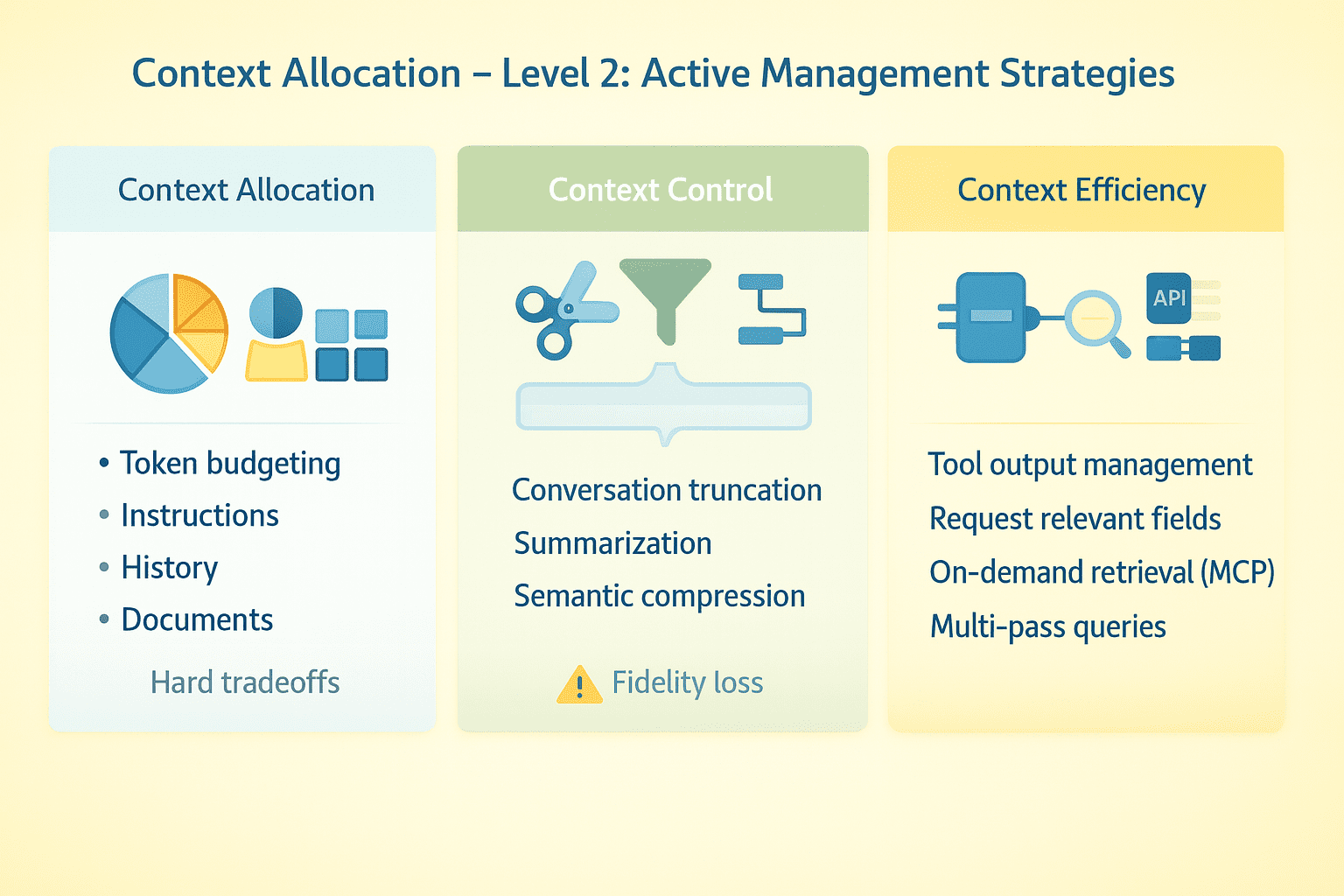

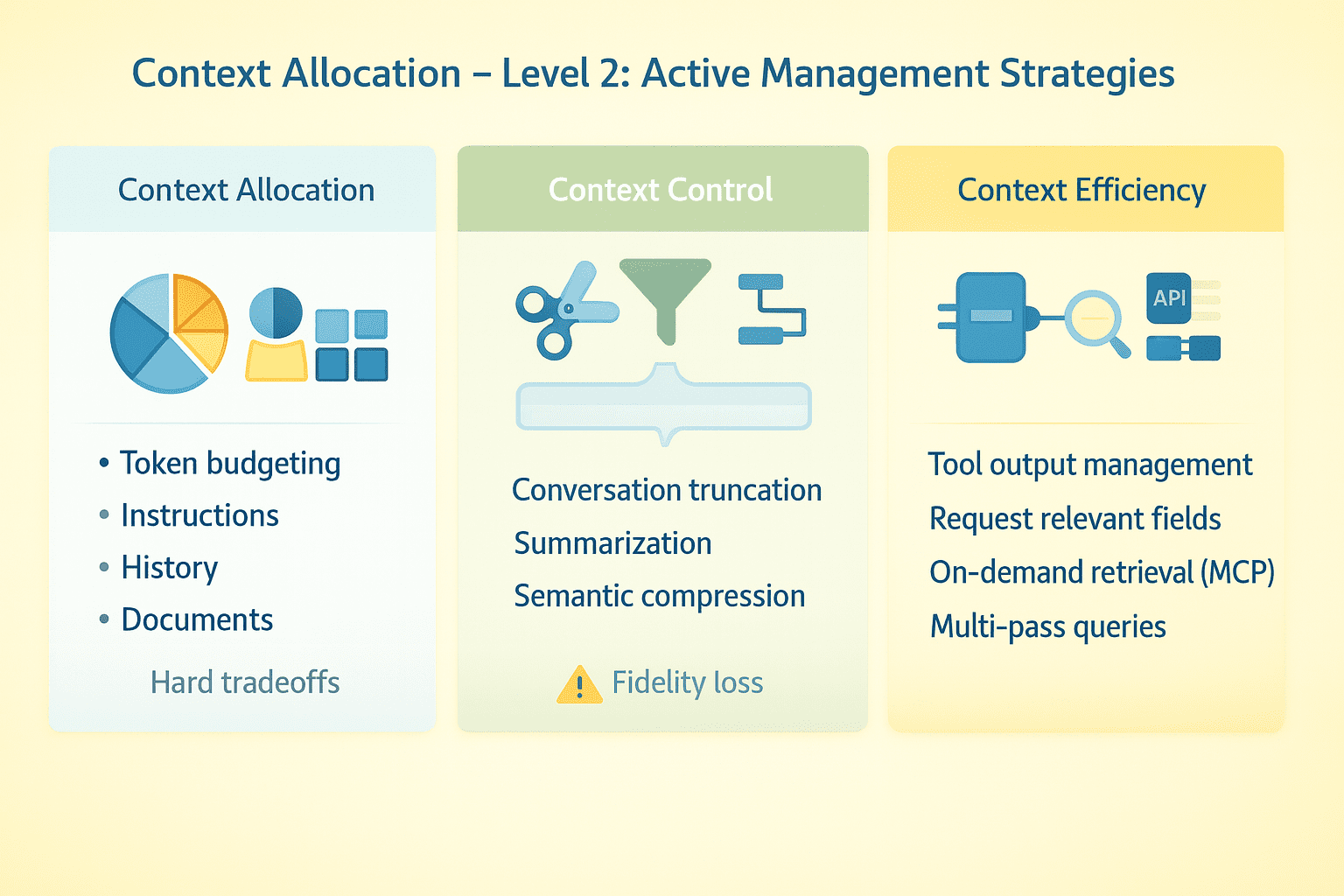

Efficient context engineering requires express methods throughout a number of dimensions.

// Budgeting Tokens

Allocate your context window intentionally. System directions would possibly take 2K tokens. Dialog historical past, software schemas, retrieved paperwork, and real-time knowledge can all add up rapidly. With a really giant context window, there’s loads of headroom. With a a lot smaller window, you’re compelled to make laborious tradeoffs about what to maintain and what to drop.

// Truncating Conversations

Maintain current turns, drop center turns, and protect crucial early context. Summarization works however loses constancy. Some techniques implement semantic compression — extracting key details relatively than preserving verbatim textual content. Check the place your agent breaks as conversations prolong.

// Managing Instrument Outputs

Massive API responses eat tokens quick. Request particular fields as an alternative of full payloads, truncate outcomes, summarize earlier than returning to the mannequin, or use multi-pass methods the place the agent first will get metadata then requests particulars for related objects solely.

// Utilizing The Mannequin Context Protocol And On-demand Retrieval

As a substitute of loading every part upfront, join the mannequin to exterior knowledge sources it queries when wanted utilizing the mannequin context protocol (MCP). The agent decides what to fetch based mostly on activity necessities. This shifts the issue from “match every part in context” to “fetch the suitable issues on the proper time.”

// Separating Structured States

Put secure directions in system messages. Put variable knowledge in person messages the place it may be up to date or eliminated with out touching core directives. Deal with dialog historical past, software outputs, and retrieved paperwork as separate streams with unbiased administration insurance policies.

Context Engineering Degree 2 | Picture by Creator

The sensible shift right here is to deal with context as a dynamic useful resource that wants energetic administration throughout an agent’s runtime, not a static factor you configure as soon as.

# Degree 3: Implementing Context Engineering In Manufacturing

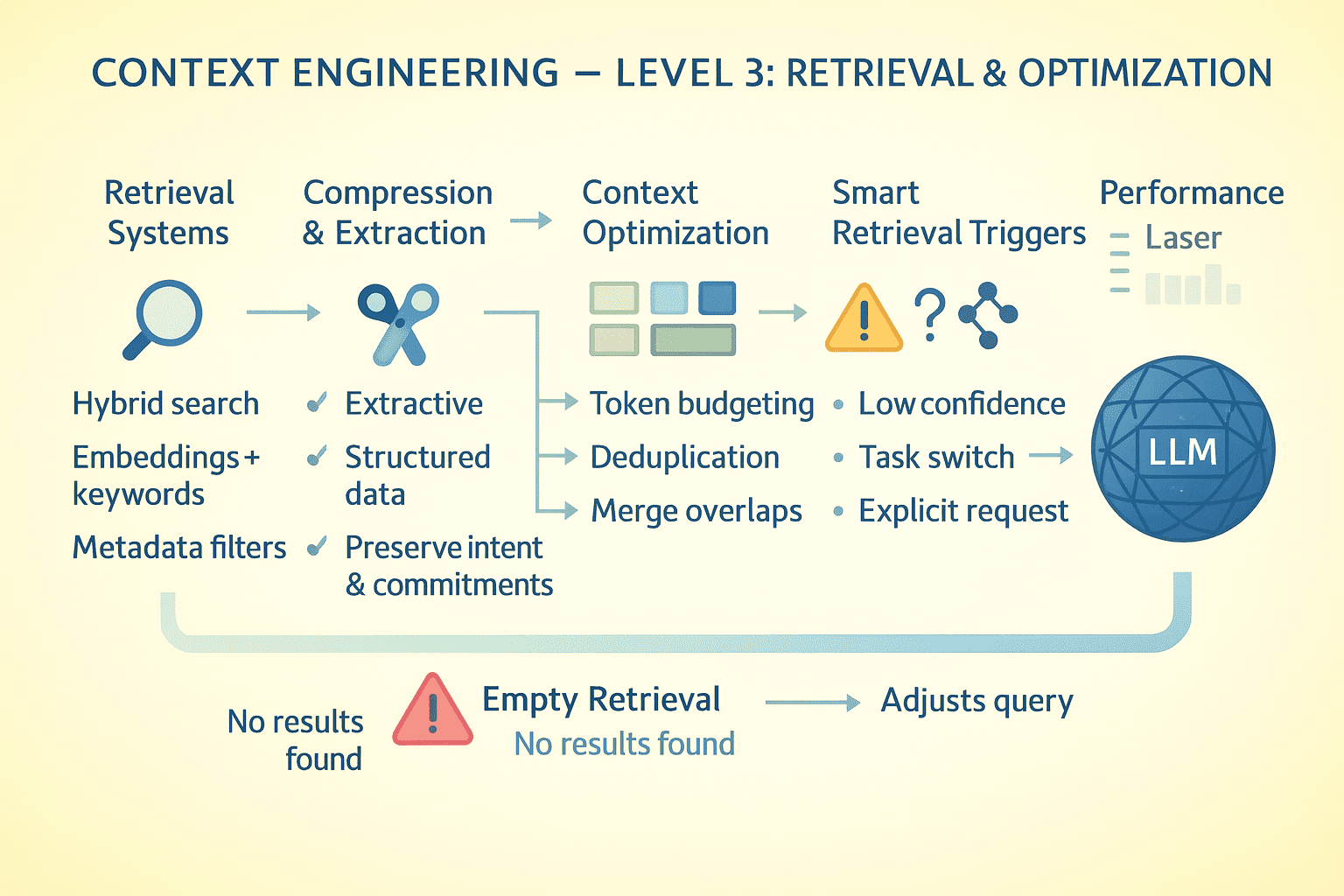

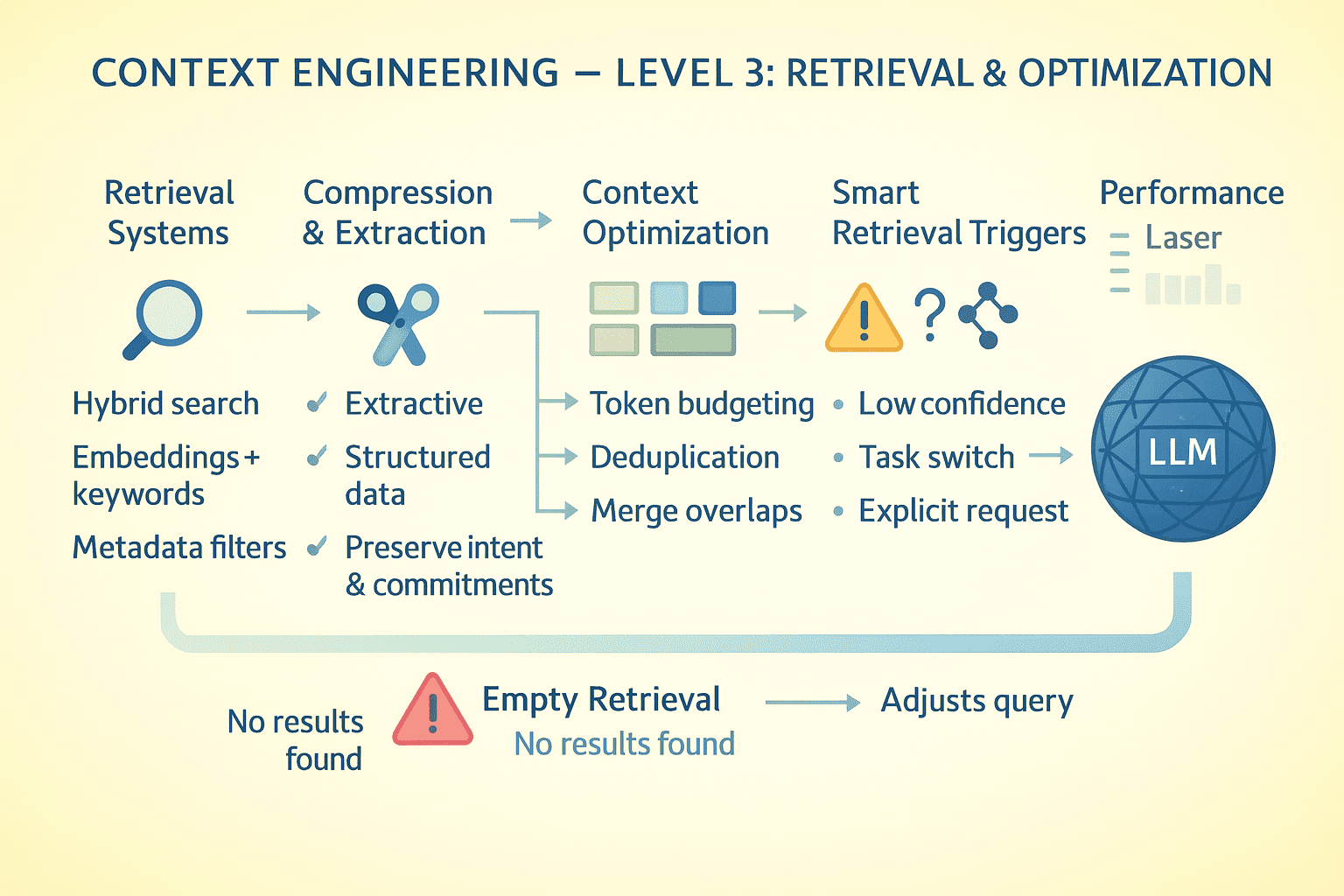

Context engineering at scale requires refined reminiscence architectures, compression methods, and retrieval techniques working in live performance. Right here is how you can construct production-grade implementations.

// Designing Reminiscence Structure Patterns

Separate reminiscence in agentic AI techniques into tiers:

- Working reminiscence (energetic context window)

- Episodic reminiscence (compressed dialog historical past and activity state)

- Semantic reminiscence (details, paperwork, data base)

- Procedural reminiscence (directions)

Working reminiscence is what the mannequin sees now, which is to be optimized for fast activity wants. Episodic reminiscence shops what occurred. You may compress aggressively however protect temporal relationships and causal chains. For semantic reminiscence, retailer indexes by subject, entity, and relevance for quick retrieval.

// Making use of Compression Methods

Naive summarization loses crucial particulars. A greater strategy is extractive compression, the place you establish and protect high-information-density sentences whereas discarding filler.

- For software outputs, extract structured knowledge (entities, metrics, relationships) relatively than prose summaries.

- For conversations, protect person intents and agent commitments precisely whereas compressing reasoning chains.

// Designing Retrieval Techniques

When the mannequin wants info not in context, retrieval high quality determines success. Implement hybrid search: dense embeddings for semantic similarity, BM25 for key phrase matching, and metadata filters for precision.

Rank outcomes by recency, relevance, and knowledge density. Return prime Ok but additionally floor near-misses; the mannequin ought to know what virtually matched. Retrieval occurs in-context, so the mannequin sees question formulation and outcomes. Dangerous queries produce dangerous outcomes; expose this to allow self-correction.

// Optimizing At The Token Degree

Profile your token utilization repeatedly.

- System directions consuming 5K tokens that might be 1K? Rewrite them.

- Instrument schemas verbose? Use compact

JSONschemas as an alternative of fullOpenAPIspecs. - Dialog turns repeating comparable content material? Deduplicate.

- Retrieved paperwork overlapping? Merge earlier than including to context.

Each token saved is a token obtainable for task-critical info.

// Triggering Reminiscence Retrieval

The mannequin mustn’t retrieve consistently; it’s costly and provides latency. Implement good triggers: retrieve when the mannequin explicitly requests info, when detecting data gaps, when activity switches happen, or when person references previous context.

When retrieval returns nothing helpful, the mannequin ought to know this explicitly relatively than hallucinating. Return empty outcomes with metadata: “No paperwork discovered matching question X in data base Y.” This lets the mannequin modify technique by reformulating the question, looking out a special supply, or informing the person the knowledge will not be obtainable.

Context Engineering Degree 3 | Picture by Creator

// Synthesizing Multi-document Data

When reasoning requires a number of sources, course of hierarchically.

- First go: extract key details from every doc independently (parallelizable).

- Second go: load extracted details into context and synthesize.

This avoids context exhaustion from loading 10 full paperwork whereas preserving multi-source reasoning functionality. For contradictory sources, protect the contradiction. Let the mannequin see conflicting info and resolve it or flag it for person consideration.

// Persisting Dialog State

For brokers that pause and resume, serialize context state to exterior storage. Save compressed dialog historical past, present activity graph, software outputs, and retrieval cache. On resume, reconstruct minimal crucial context; don’t reload every part.

// Evaluating And Measuring Efficiency

Monitor key metrics to grasp how your context engineering technique is performing. Monitor context utilization to see the common share of the window getting used, and eviction frequency to grasp how usually you’re hitting context limits. Measure retrieval precision by checking what fraction of retrieved paperwork are literally related and used. Lastly, observe info persistence to see what number of turns essential details survive earlier than being misplaced.

# Wrapping Up

Context engineering is finally about info structure. You’re constructing a system the place the mannequin has entry to every part in its context window and no entry to what’s not. Each design determination — what to compress, what to retrieve, what to cache, and what to discard — creates the knowledge setting your software operates in.

If you don’t deal with context engineering, your system might hallucinate, overlook essential particulars, or break down over time. Get it proper and also you get an LLM software that stays coherent, dependable, and efficient throughout complicated, prolonged interactions regardless of its underlying architectural limits.

Comfortable context engineering!

# References And Additional Studying

Bala Priya C is a developer and technical author from India. She likes working on the intersection of math, programming, knowledge science, and content material creation. Her areas of curiosity and experience embody DevOps, knowledge science, and pure language processing. She enjoys studying, writing, coding, and occasional! At present, she’s engaged on studying and sharing her data with the developer neighborhood by authoring tutorials, how-to guides, opinion items, and extra. Bala additionally creates partaking useful resource overviews and coding tutorials.