Introduction

It is vital that firms consider their engineering candidates appropriately when they’re hiring. Do different areas of your organization

consider their potential hires by limiting entry to instruments they will use to carry out the job? I believe not. Why do the identical to

your software program engineers?

AI Tooling – issues like GitHub’s Copilot and now ChatGPT – are instruments that your engineers can use to do their job. Through the hiring

course of, engineers ought to be evaluated on the abilities they’ve, and that features the power to make the most of all out there instruments. I count on AI

help to be come as frequent place as utilizing Stack Overflow to unravel an issue. ChatGPT, Copilot, and any future instruments doing comparable duties are

going for use steadily within the close to future.

On this article, I am testing ChatGPT in opposition to probably the most standard coding evaluation providers: HackerRank. I’ve already coated different evaluation providers like LeetCode, TestGorilla, CodeSignal and Codility on this sequence. I like to recommend having a look at these too.

To the engineers which have discovered this put up and are searching for options to your coding assessments, I need to warn you, ChatGPT is an

assistant. Consider it as a junior stage engineer. It’s possible you’ll must test it is work. ChatGPT is understood to be inaccurate, which is why

it was banned on Stack Overflow. Do not take it is responses as gospel. Consider the responses and make enhancements as wanted. You may see I

had to do this a few occasions with the questions under.

First a disclaimer: I used to be Director of Engineering at Woven Groups in 2022. Previous to that, I used to be a buyer of Woven for over two years.

HackerRank is talked about a number of occasions within the Woven weblog and is a competitor to Woven.

Closing notice earlier than I start, HackerRank introduced a pair annoyances throughout this take a look at as a result of they use LaTeX formatting of their prompts. Merely

copy and pasting prompts to ChatGPT was not attainable, as a result of key elements of the issue could be lacking. This meant that I

spent a number of minutes per immediate cleansing it up and reinserting the LaTeX characters in plain textual content. This accounts for the upper clear up

occasions in comparison with the competing providers in different articles.

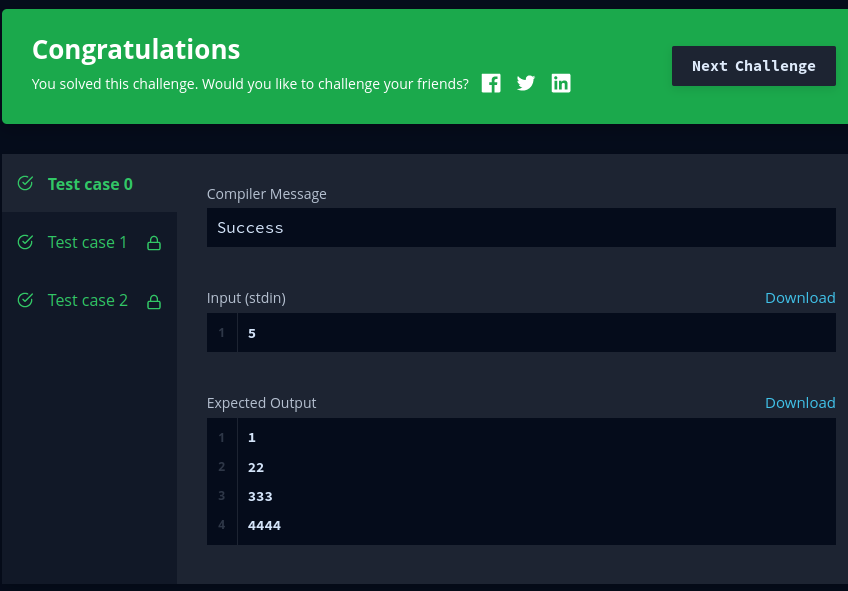

Triangle Quest

I chosen a random Medium problem Python query for my first immediate: Triangle Quest. This one was distinctive on this sequence of checks.

The anticipated answer was so as to add solely a single line of code and I might solely use arithmetic operations, a single for loop and print assertion.

As beforehand talked about, there have been a couple of LaTeX issues to scrub up, however then ChatGPT returned a single line of code so as to add to the for loop supplied.

The entire code is:

for i in vary(1,int(enter())): #Greater than 2 traces will lead to 0 rating. Don't depart a clean line additionally

print((i * (10 ** i - 1)) // 9)

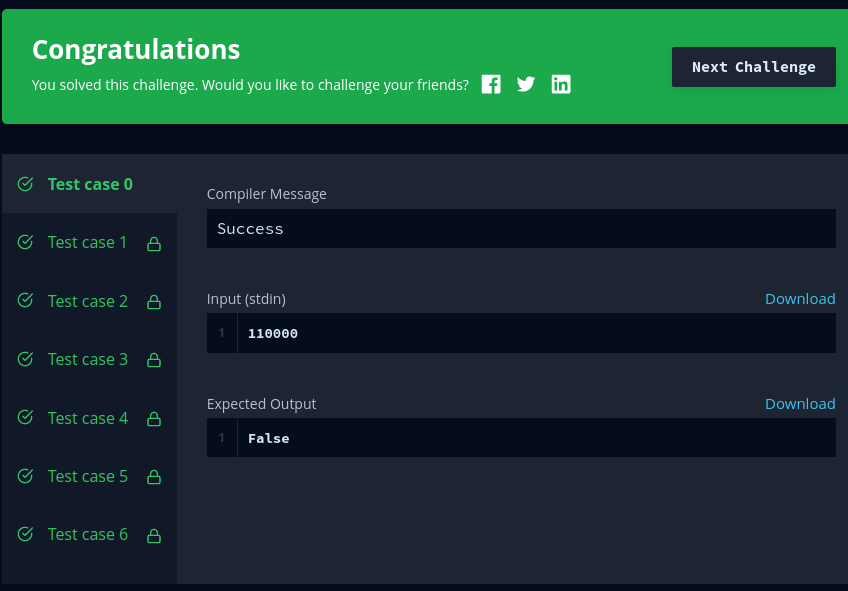

I submitted the code and handed all take a look at instances.

I used to be stunned by this one. I used to be anticipating to come across some issues with the constraint of solely with the ability to use arithemtic operations. It

seems, ChatGPT caught that constraint and solved the issue with none concern.

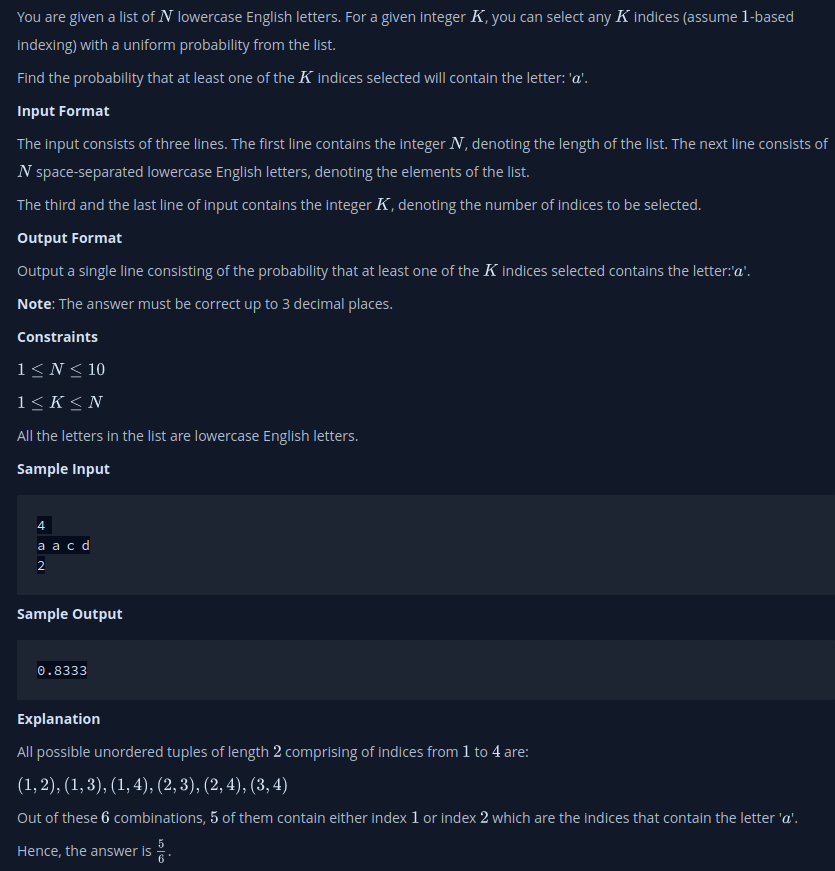

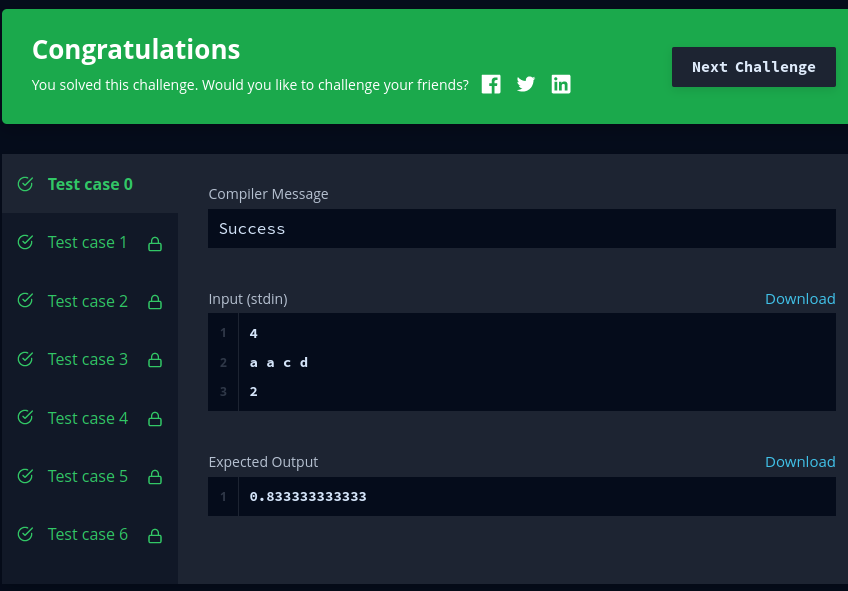

Iterables and Iterators

I needed to attempt one other Medium problem downside, that added greater than a single line of code. The constraints on the earlier query put

the issue properly into “puzzle” territory, and I’m not a fan of puzzles in technical interviews. The second medium query chosen was

Iterables and Iterators.

The primary consequence returned did not take enter. As an alternative, it arduous coded values like so:

# given listing of letters

letters = ['a', 'a', 'c', 'd']

# variety of indices to pick

okay = 2

The attention-grabbing factor with this answer was that it ignored the primary enter row –

“The primary line accommodates the integer N, denoting the size of the listing”. I do not disagree with this modification. We should always be capable to decide the size

of the listing from the listing itself.

Nevertheless, the answer required taking enter from STDIN, so I let ChatGPT know this by pasting in the one bit of knowledge supplied within the

code right here:

Enter your code right here. Learn enter from STDIN. Print output to STDOUT

ChatGPT made the neccessary change and supplied a brand new code block.

import itertools

# learn enter from stdin

n = int(enter())

letters = enter().break up()

okay = int(enter())

# variety of combos of indices

total_combinations = len(listing(itertools.combos(vary(1, len(letters)+1), okay)))

# variety of combos of indices that don't include the letter 'a'

combinations_without_a = listing(filter(lambda x: 'a' not in [letters[i-1] for i in x], itertools.combos(vary(1, len(letters)+1), okay)))

# chance that not one of the chosen indices include the letter 'a'

probability_without_a = len(combinations_without_a) / total_combinations

# chance that no less than one of many chosen indices include the letter 'a'

probability_with_a = 1 - probability_without_a

# print the chance to 3 decimal locations

print("{:.3f}".format(probability_with_a))

I submitted this code and it additionally handed every of the take a look at instances. This answer additionally ignored utilizing the n enter, however did settle for it as enter.

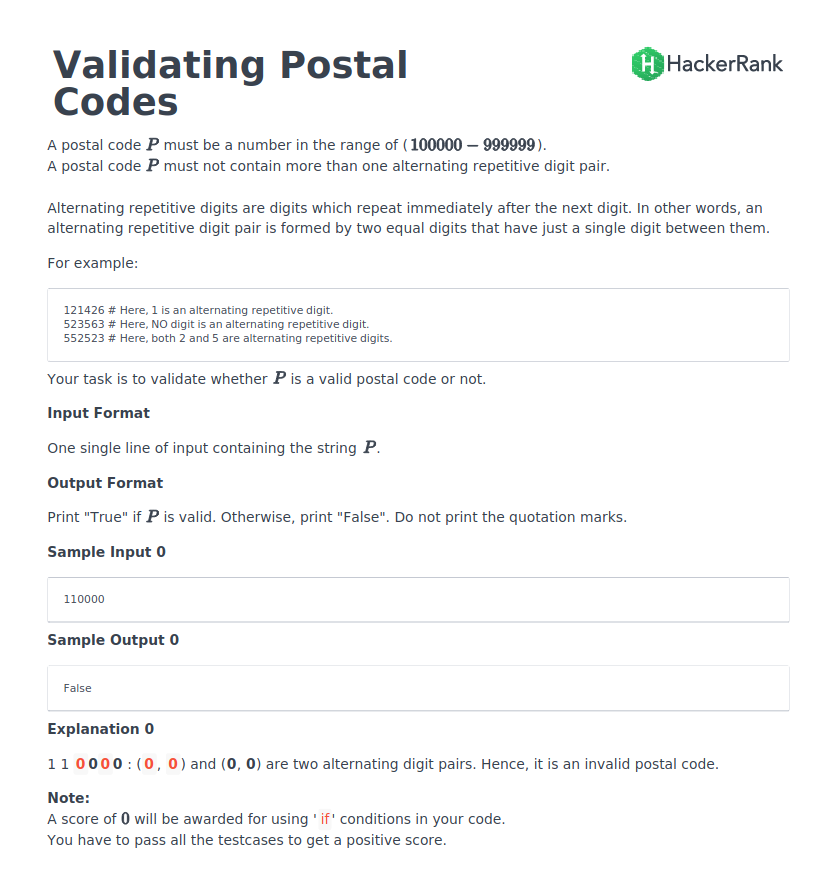

Validating Postal Codes

At this level, I’ve proven that ChatGPT can do medium stage issues. So, I chosen a Arduous downside: Validating Postal Codes.

The intersting constraint on this one is that if statements aren’t allowed. The aim right here is to supply two common expressions to unravel the issue.

I’ve constructed some nasty common expressions over time. I’ve talked about SmokeDetector up to now, and it is function in eradicating spam from

the Stack Alternate community. It’s constructed on common expressions. Tons and many common expressions. So, I felt fairly snug with

this query and needed to see how ChatGPT would deal with it.

The second common expression, the alternating repeating digit pair, did not work the primary time.

regex_alternating_repetitive_digit_pair = r'(d)d1'

For people who know common expressions, that’s not going to work. I pointed this out and it corrected itself, and defined the which means of the

new common expression. The 2 last common expressions are

regex_integer_in_range = r"^[1-9]d{5}$" # Do not delete 'r'.

regex_alternating_repetitive_digit_pair = r"(d)(?=d1)" # Do not delete 'r'.

That is utilizing a optimistic lookahead assertion, which ChatGPT explains as:

This common expression will match any digit

(d)adopted by one other digit, with the identical digit(1)showing instantly after that. The(?=...)syntax is a optimistic lookahead assertion, which suggests that it’s going to solely match a digit whether it is adopted by the desired sample, however the sample won’t be included within the general match. The parentheses rounddseize the digit in order that it may be known as1afterward.

I submitted the 2 regexes above, and handed the take a look at instances.

Closing Ideas

Nearly all of my time on these was spent cleansing up the LaTeX formatting. I discussed a couple of immediate clarifications wanted to unravel issues within the

returned code too – one required accepting person enter and the opposite was an invalid common expression. Pointing this out to ChatGPT acquired the

downside resolved instantly.

That is precisely the kind of factor that I would count on an engineer to wish to do with the AI assistant (or extra junior engineer). Hiring processes

should adapt to the existance of AI assistants. Consider your candidates and their means to do greater than merely write code.