Black Forest Labs releases FLUX.2 [klein], a compact picture mannequin household that targets interactive visible intelligence on client {hardware}. FLUX.2 [klein] extends the FLUX.2 line with sub second era and enhancing, a unified structure for textual content to picture and picture to picture, and deployment choices that vary from native GPUs to cloud APIs, whereas retaining state-of-the-art picture high quality.

From FLUX.2 [dev] to interactive visible intelligence

FLUX.2 [dev] is a 32 billion parameter rectified circulation transformer for textual content conditioned picture era and enhancing, together with composition with a number of reference photographs, and runs primarily on knowledge heart class accelerators. It’s tuned for optimum high quality and adaptability, with lengthy sampling schedules and excessive VRAM necessities.

FLUX.2 [klein] takes the identical design course and compresses it into smaller rectified circulation transformers with 4 billion and 9 billion parameters. These fashions are distilled to very brief sampling schedules, assist the identical textual content to picture and multi reference enhancing duties, and are optimized for response occasions under 1 second on fashionable GPUs.

Mannequin household and capabilities

The FLUX.2 [klein] household consists of 4 important open weight variants by way of a single structure.

- FLUX.2 [klein] 4B

- FLUX.2 [klein] 9B

- FLUX.2 [klein] 4B Base

- FLUX.2 [klein] 9B Base

FLUX.2 [klein] 4B and 9B are step distilled and steerage distilled fashions. They use 4 inference steps and are positioned because the quickest choices for manufacturing and interactive workloads. FLUX.2 [klein] 9B combines a 9B circulation mannequin with an 8B Qwen3 textual content embedder and is described because the flagship small mannequin on the Pareto frontier for high quality versus latency throughout textual content to picture, single reference enhancing, and multi reference era.

The Base variants are undistilled variations with longer sampling schedules. The documentation lists them as basis fashions that protect the whole coaching sign and supply larger output range. They’re meant for effective tuning, LoRA coaching, analysis pipelines, and customized submit coaching workflows the place management is extra necessary than minimal latency.

All FLUX.2 [klein] fashions assist three core duties in the identical structure. They will generate photographs from textual content, they’ll edit a single enter picture, and so they can carry out multi reference era and enhancing the place a number of enter photographs and a immediate collectively outline the goal output.

Latency, VRAM, and quantized variants

The FLUX.2 [klein] mannequin web page supplies approximate finish to finish inference occasions on GB200 and RTX 5090. FLUX.2 [klein] 4B is the quickest variant and is listed at about 0.3 to 1.2 seconds per picture, relying on {hardware}. FLUX.2 [klein] 9B targets about 0.5 to 2 seconds at larger high quality. The Base fashions require a number of seconds as a result of they run with 50 step sampling schedules, however they expose extra flexibility for customized pipelines.

The FLUX.2 [klein] 4B mannequin card states that 4B suits in about 13 GB of VRAM and is appropriate for GPUs just like the RTX 3090 and RTX 4070. The FLUX.2 [klein] 9B card studies a requirement of about 29 GB of VRAM and targets {hardware} such because the RTX 4090. This implies a single excessive finish client card can host the distilled variants with full decision sampling.

To increase the attain to extra gadgets, Black Forest Labs additionally releases FP8 and NVFP4 variations for all FLUX.2 [klein] variants, developed along with NVIDIA. FP8 quantization is described as as much as 1.6 occasions sooner with as much as 40 % decrease VRAM utilization, and NVFP4 as as much as 2.7 occasions sooner with as much as 55 % decrease VRAM utilization on RTX GPUs, whereas retaining the core capabilities the identical.

Benchmarks in opposition to different picture fashions

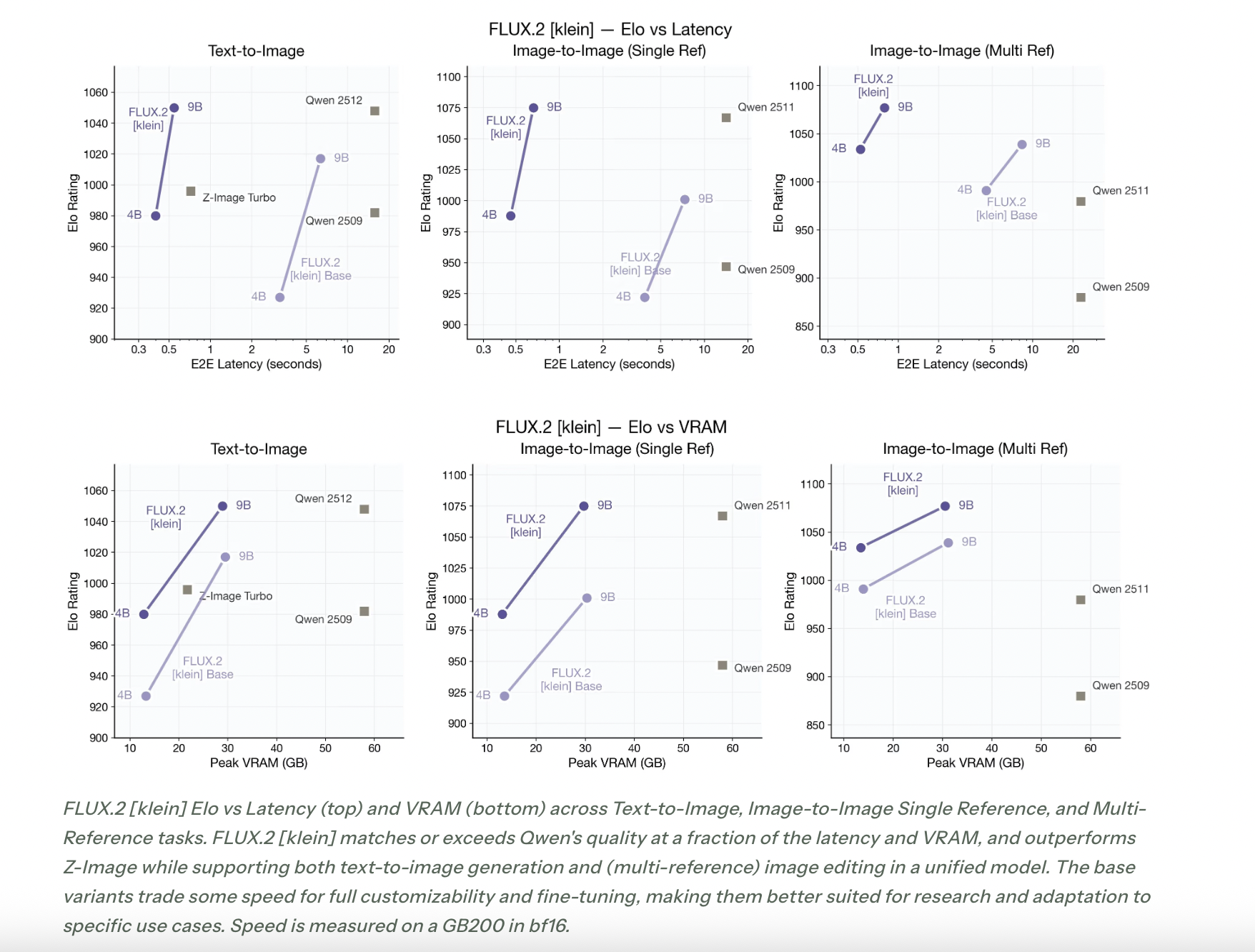

Black Forest Labs evaluates FLUX.2 [klein] by way of Elo fashion comparisons on textual content to picture, single reference enhancing, and multi reference duties. The efficiency charts present FLUX.2 [klein] on the Pareto frontier of Elo rating versus latency and Elo rating versus VRAM.The commentary states that FLUX.2 [klein] matches or exceeds the standard of Qwen based mostly picture fashions at a fraction of the latency and VRAM, and that it outperforms Z Picture whereas supporting unified textual content to picture and multi reference enhancing in a single structure.

The bottom variants commerce some velocity for full customizability and effective tuning, which aligns with their function as basis checkpoints for brand spanking new analysis and area particular pipelines.

Key Takeaways

- FLUX.2 [klein] is a compact rectified circulation transformer household with 4B and 9B variants that helps textual content to picture, single picture enhancing, and multi reference era in a single unified structure.

- The distilled FLUX.2 [klein] 4B and 9B fashions use 4 sampling steps and are optimized for sub second inference on a single fashionable GPU, whereas the undistilled Base fashions use longer schedules and are meant for effective tuning and analysis.

- Quantized FP8 and NVFP4 variants, constructed with NVIDIA, present as much as 1.6 occasions speedup with about 40 % VRAM discount for FP8 and as much as 2.7 occasions speedup with about 55 % VRAM discount for NVFP4 on RTX GPUs.

Take a look at the Technical particulars, Repo and Mannequin weights. Additionally, be at liberty to comply with us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you may be part of us on telegram as nicely.

![Black Forest Labs Releases FLUX.2 [klein]: Compact Stream Fashions for Interactive Visible Intelligence Black Forest Labs Releases FLUX.2 [klein]: Compact Stream Fashions for Interactive Visible Intelligence](https://www.marktechpost.com/wp-content/uploads/2026/01/blog-banner23-30-1024x731.png)