The way forward for synthetic intelligence is right here and to the builders, it’s within the type of new instruments that rework the way in which we code, create and remedy issues. GLM-4.7 Flash, an open-source giant language mannequin by Zhipu AI, is the newest large entrant however not merely one other model. This mannequin brings nice energy and astonishing effectivity, so state-of-the-art AI within the area of code technology, multi-step reasoning and content material technology contributes to the sector as by no means earlier than. We must always take a better take a look at the the reason why GLM-4.7 Flash is a game-changer.

Structure and Evolution: Sensible, Lean, and Highly effective

GLM-4.7 Flash has at its core a complicated Combination-of-Specialists (MoE) Transformer structure. Take into consideration a workforce of specialised professionals; suppose, each professional will not be engaged in all the issues, however solely probably the most related are engaged in a specific process. That is how MoE fashions work. Though all the GLM-4.7 mannequin comprises monumental and large (within the 1000’s) 358 billion parameters, solely a sub-fraction: about 32 billion parameters are energetic in any explicit question.

GLM-4.7 Flash model is but easier with roughly 30 billion whole parameters and 1000’s of energetic per request. Such a design renders it very environment friendly since it may function on comparatively small {hardware} and nonetheless entry an enormous quantity of data.

Straightforward API Entry for Seamless Integration

GLM-4.7 Flash is simple to begin with. It’s obtainable because the Zhipu Z.AI API platform offering the same interface to OpenAI or Anthropic. The mannequin can be versatile to a broad vary of duties whether or not it involves direct REST calls or an SDK.

These are among the sensible makes use of with Python:

1. Artistic Textual content Technology

Want a spark of creativity? Chances are you’ll make the mannequin write a poem or advertising copy.

import requests

api_url = "https://api.z.ai/api/paas/v4/chat/completions"

headers = {

"Authorization": "Bearer YOUR_API_KEY",

"Content material-Sort": "utility/json"

}

user_message = {"position": "person", "content material": "Write a brief, optimistic poem about the way forward for expertise."}

payload = {

"mannequin": "glm-4.7-flash",

"messages": [user_message],

"max_tokens": 200,

"temperature": 0.8

}

response = requests.publish(api_url, headers=headers, json=payload)

outcome = response.json()

print(outcome["choices"][0]["message"]["content"])Output:

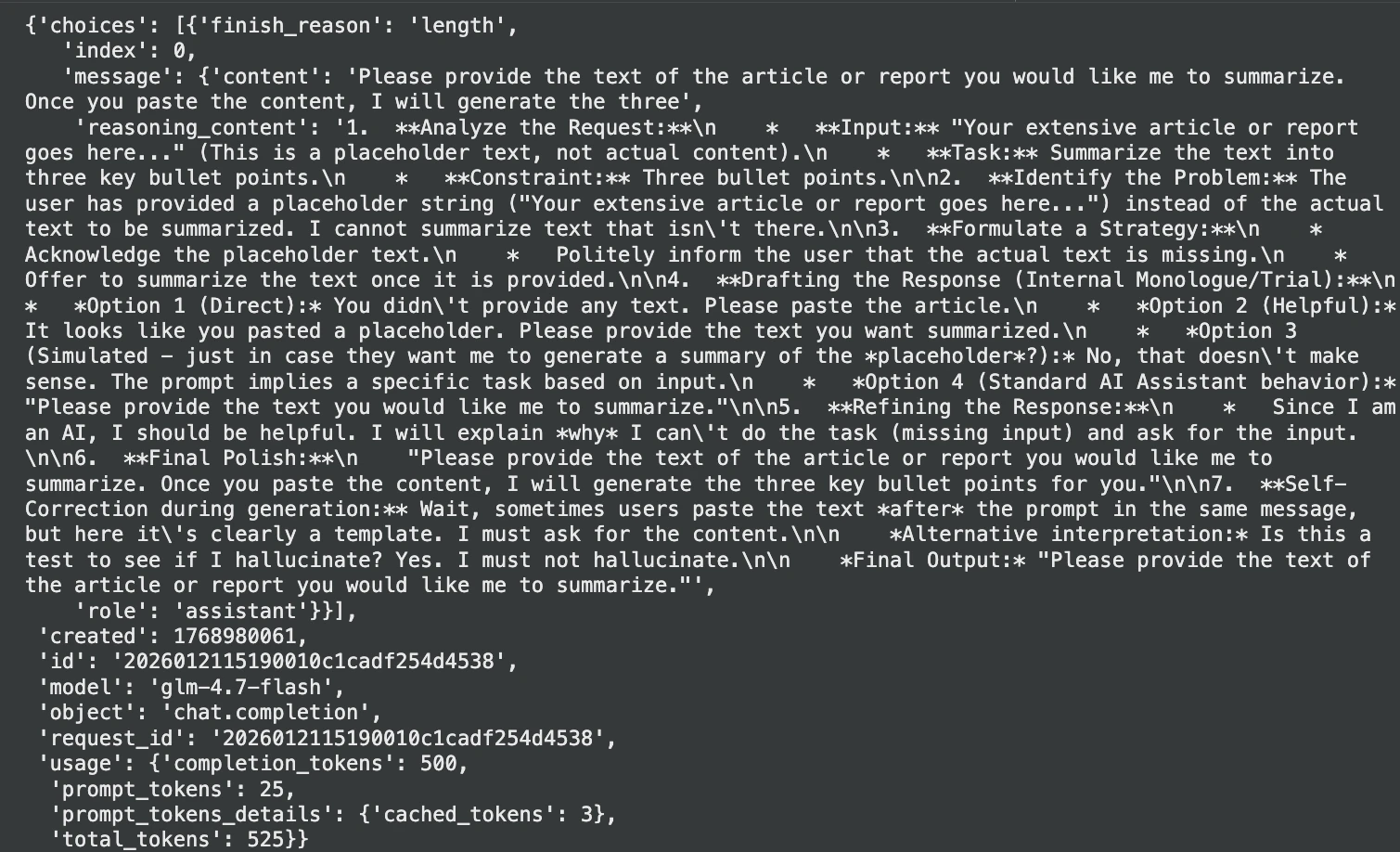

2. Doc Summarization

It has a giant context window that makes it simple to overview prolonged paperwork.

text_to_summarize = "Your in depth article or report goes right here..."

immediate = f"Summarize the next textual content into three key bullet factors:n{text_to_summarize}"

payload = {

"mannequin": "glm-4.7-flash",

"messages": [{"role": "user", "content": prompt}],

"max_tokens": 500,

"temperature": 0.3

}

response = requests.publish(api_url, json=payload, headers=headers)

abstract = response.json()["choices"][0]["message"]["content"]

print("Abstract:", abstract)Output:

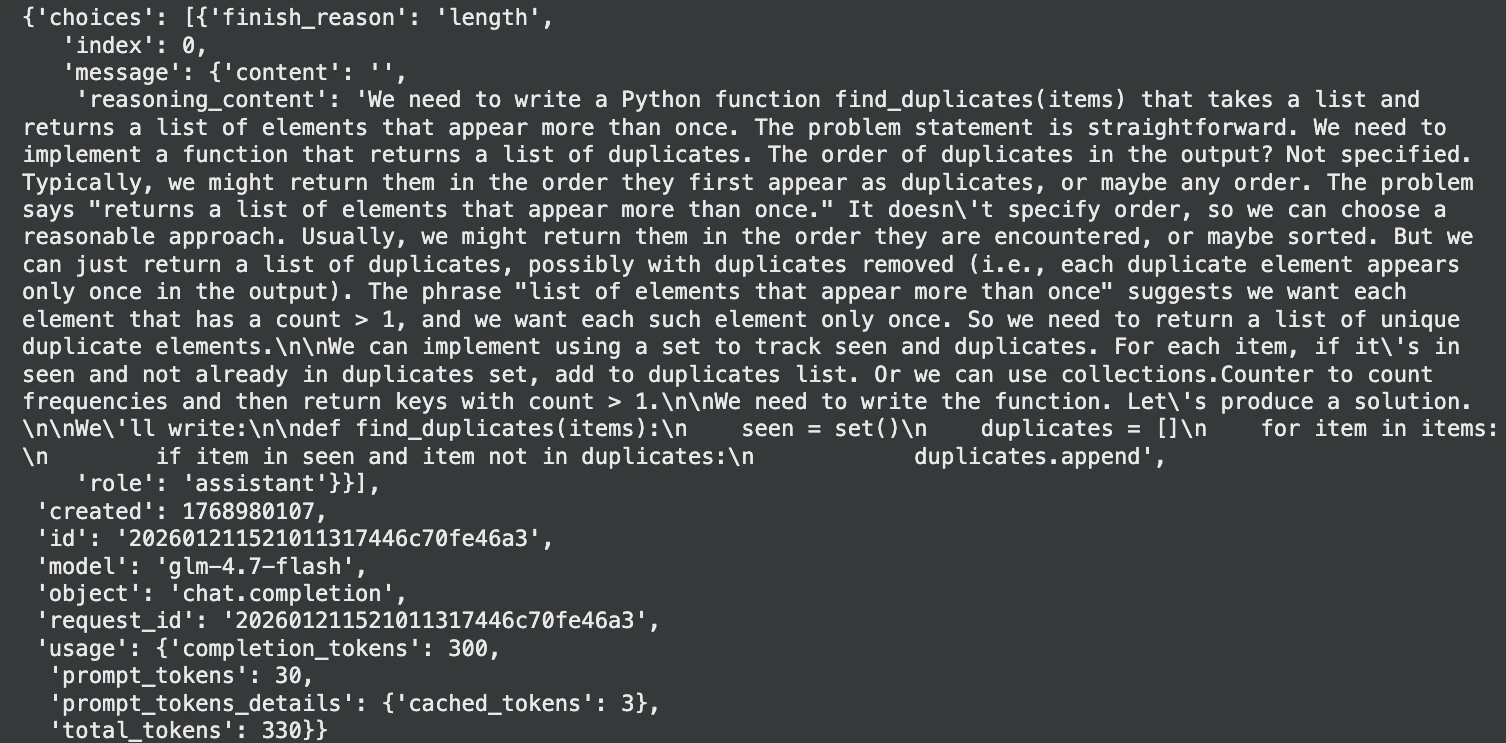

3. Superior Coding Help

GLM-4.7 Flash is certainly excellent in coding. Chances are you’ll say: create features, describe sophisticated code and even debug.

code_task = (

"Write a Python perform `find_duplicates(objects)` that takes a listing "

"and returns a listing of parts that seem greater than as soon as."

)

payload = {

"mannequin": "glm-4.7-flash",

"messages": [{"role": "user", "content": code_task}],

"temperature": 0.2,

"max_tokens": 300

}

response = requests.publish(api_url, json=payload, headers=headers)

code_answer = response.json()["choices"][0]["message"]["content"]

print(code_answer)Output:

Key Enhancements That Matter

GLM-4.7 Flash will not be an strange improve nevertheless it comes with a lot enchancment over its different variations.

- Enhanced Coding and “Vibe Coding”: This mannequin was optimized on giant datasets of code and thus its efficiency on coding benchmarks was aggressive with bigger, proprietary fashions. It additional brings in regards to the notion of Vibe coding, the place one considers the code formatting, type and even the looks of UI to supply a smoother and extra skilled look.

- Stronger Multi-Step Reasoning: This can be a distinguishing facet because the reasoning is enhanced.

- Interleaved Reasoning: The mannequin processes the directions after which thinks (earlier than advancing on responding or calling a instrument) in order that it could be extra apt to observe the complicated directions.

- Preserved Reasoning: It retains its reasoning process over a number of turns in a dialog, so it is not going to neglect the context in a fancy and prolonged process.

- Flip-Degree Management: Builders are capable of handle the depth of reasoning made by every question by the mannequin to tradeoff between pace and accuracy.

- Velocity and Value-Effectivity: The Flash model is concentrated on pace and value. Zhipu AI is free to builders and its API charges are a lot decrease than most rivals, which signifies that highly effective AI may be accessible to initiatives of any measurement.

Use Instances: From Agentic Coding to Enterprise AI

GLM-4.7 Flash has the potential of many functions as a result of its versatility.

- Agentic Coding and Automation: This paradigm could function an AI software program agent, which can be supplied with a high-level goal and produce a full-fledged, multi-part reply. It’s the finest in fast prototyping and automated boilerplate code.

- Lengthy-Type Content material Evaluation: Its monumental context window is good when summarizing studies which can be lengthy or analyzing log recordsdata or responding to questions that require in depth documentation.

- Enterprise Options: GLM-4.7 Flash used as a fine-tuned self-hosted open-source permits corporations to make use of inner knowledge to type their very own, privately owned AI assistants.

Efficiency That Speaks Volumes

GLM-4.7 Flash is a high-performance instrument, which is confirmed by benchmark exams. It has been scoring prime outcomes on the troublesome fashions of coding corresponding to SWE-Bench and LiveCodeBench utilizing open-source packages.

GLM-4.7 was rated at 73.8 per cent in a take a look at at SWE-Bench, which entails the fixing of actual GitHub issues. It was additionally superior in math and reasoning, acquiring a rating of 95.7 p.c on the AI Math Examination (AIME) and bettering by 12 p.c on its predecessor within the troublesome reasoning benchmark HLE. These figures present that GLM-4.7 Flash doesn’t solely compete with different fashions of its type, nevertheless it normally outsmarts them.

Why GLM-4.7 Flash is a Huge Deal

This mannequin is vital in quite a few causes:

- Excessive Efficiency at Low Value: It provides options that may compete with the very best finish proprietary fashions at a small fraction of the price. This permits superior AI to be obtainable to private builders and startups, in addition to huge corporations.

- Open Supply and Versatile: GLM-4.7 Flash is free, which signifies that it offers limitless management. You may customise it for particular domains, deploy it regionally to make sure knowledge privateness, and keep away from vendor lock-in.

- Developer-Centric by Design: The mannequin is simple to combine into developer workflows and helps an OpenAI-compatible API with built-in instrument help.

- Finish-to-Finish Drawback Fixing: GLM-4.7 Flash is able to serving to to resolve larger and extra sophisticated duties in a sequence. This liberates the builders to focus on high-level method and novelty, as an alternative of shedding sight within the implementation particulars.

Conclusion

GLM-4.7 Flash is a big leap in direction of robust, helpful and obtainable AI. You may customise it for particular domains, deploy it regionally to guard knowledge privateness, and keep away from vendor lock-in. GLM-4.7 Flash provides the means to create extra, in much less time, whether or not you’re creating the following nice app, automating complicated processes, or simply want a better coding companion. The age of the totally empowered developer has arrived and open-source schemes corresponding to GLM-4.7 Flash are on the frontline.

Incessantly Requested Questions

A. GLM-4.7 Flash is an open-source, light-weight language mannequin designed for builders, providing robust efficiency in coding, reasoning, and textual content technology with excessive effectivity.

A. It’s a mannequin design the place many specialised sub-models (“specialists”) exist, however just a few are activated for any given process, making the mannequin very environment friendly.

A. The GLM-4.7 sequence helps a context window of as much as 200,000 tokens, permitting it to course of very giant quantities of textual content without delay.

Login to proceed studying and luxuriate in expert-curated content material.