In case you are updated with the latest developments of AI and LLMs, you in all probability have realized {that a} main a part of the progress continues to be by means of constructing bigger fashions or higher computation routing. Properly, what if there may be yet another alternate route? Alongside got here Engram! A revolutionary methodology of DeepSeek AI that’s altering our perspective on the scaling of language fashions.

What Downside Does Engram Resolve?

Take into account a situation: You sort “Alexander the Nice” right into a language mannequin. Now, it spends beneficial computational assets reconstructing this widespread phrase from scratch, each single time. It’s like having an excellent mathematician who has to recount all the ten digits, earlier than fixing any complicated equation.

Present transformer fashions don’t have a devoted solution to merely “search for” widespread patterns. They simulate reminiscence retrieval by means of computation, which is inefficient. Engram introduces what researchers name conditional reminiscence, a complement to the conditional computation we see in Combination-of-Consultants (MoE) fashions.

The outcomes communicate for themselves. In benchmark exams, Engram-27B confirmed outstanding enhancements over comparable MoE fashions:

- 5.0-point achieve on BBH reasoning duties

- 3.4-point enchancment on MMLU information exams

- 3.0-point increase on HumanEval code era

- 97.0 vs 84.2 accuracy on multi-query needle-in-haystack exams

Key Options of Engram:

The important thing options of Engram are:

- Sparsity Allocation: We recognized a U-shaped scaling legislation that directs optimum capability allocation, presenting the trade-off of neural computation (MoE) versus static reminiscence (Engram) as a dilemma.

- Empirical Verification: The Engram-27B mannequin offers a constant achieve over MoE baselines within the domains of data, reasoning, code and math below situations of strict iso-parameter and iso-FLOPs constraints.

- Mechanistic Evaluation: The outcomes of our evaluation point out that Engram permits the early layers to be free from static sample reconstruction, which could end in sustaining the efficient depth for complicated reasoning.

- System Effectivity: The module makes use of deterministic addressing which permits embedding tables of giant measurement to be moved to host reminiscence with solely a slight enhance within the inference time.

How Engram Really Works?

Engram has been in comparison with a high-speed lookup desk within the case of language fashions that may simply entry frequent patterns.

The Core Structure

Engram’s method relies on a quite simple but in addition very highly effective concept: it’s based mostly on N-gram embeddings (sequences of N consecutive tokens) that may be regarded up in fixed time O(1). Quite than holding each attainable phrase mixture saved, it employs hash capabilities to map patterns to embeddings in an environment friendly method.

There are three primary elements to this structure:

- Tokenizer Compression: Previous to wanting up patterns, Engram standardizes tokens, so “Apple” and “apple” consult with the identical idea. This ends in a 23% discount of efficient vocabulary measurement, resulting in the system being extra environment friendly.

- Multi-Head Hashing: To stop collisions (i.e., completely different patterns mapping to the identical location), Engram employs a number of hash capabilities. For instance, consider it as having a number of completely different cellphone books – if one provides you the unsuitable quantity, the others may have your again.

- Context-Conscious Gating: That is the clever half. Not each reminiscence that’s retrieved is pertinent, so Engram employs attention-like mechanisms to find out how a lot to belief every lookup in response to the current context. If a sample is misplaced, the gate worth will drop in direction of zero, and the sample might be successfully disregarded.

The Scaling Regulation Discovery

Among the many quite a few fascinating discoveries, the U-shaped scaling legislation stands out. Researchers had been capable of determine the optimum efficiency when about 75-80% of the capability was allotted to MoE and solely 20-25% to Engram reminiscence.

Full MoE (100%) signifies no devoted reminiscence for the mannequin, and due to this fact, no correct use of computation reconstructing the widespread patterns. No MoE (0%) means the mannequin couldn’t do refined reasoning as a result of having little or no computational capability. The proper level is the place each are balanced.

Getting Began with Engram

- Set up Python with model 3.8 and better.

- Set up

numpyutilizing the next command:

pip set up numpy Arms-On: Understanding N-gram Hashing

Let’s observe how Engram’s core hashing mechanism works with a sensible activity.

Implementing Fundamental N-gram Hash Lookup

On this activity, we’ll see how Engram makes use of deterministic hashing to maps token sequences to embeddings, fully avoiding the requirement to retailer each attainable N-gram individually.

1: Organising the surroundings

import numpy as np

from typing import Record

# Configuration

MAX_NGRAM = 3

VOCAB_SIZE = 1000

NUM_HEADS = 4

EMBEDDING_DIM = 128 2: Create a easy tokenizer compression simulator

def compress_token(token_id: int) -> int:

# Simulate normalization by mapping comparable tokens

# In actual Engram, this makes use of NFKC normalization

return token_id % (VOCAB_SIZE // 2)

def compress_sequence(token_ids: Record[int]) -> np.ndarray:

return np.array([compress_token(tid) for tid in token_ids])3: Implement the hash perform

def hash_ngram(tokens: Record[int],

ngram_size: int,

head_idx: int,

table_size: int) -> int:

# Multiplicative-XOR hash as utilized in Engram

multipliers = [2 * i + 1 for i in range(ngram_size)]

combine = 0

for i, token in enumerate(tokens[-ngram_size:]):

combine ^= token * multipliers[i]

# Add head-specific variation

combine ^= head_idx * 10007

return combine % table_size

# Take a look at it

sample_tokens = [42, 108, 256, 512]

compressed = compress_sequence(sample_tokens)

hash_value = hash_ngram(

compressed.tolist(),

ngram_size=2,

head_idx=0,

table_size=5003

)

print(f"Hash worth for 2-gram: {hash_value}")4: Construct a multi-head embedding lookup

def multi_head_lookup(token_sequence: Record[int],

embedding_tables: Record[np.ndarray]) -> np.ndarray:

compressed = compress_sequence(token_sequence)

embeddings = []

for ngram_size in vary(2, MAX_NGRAM + 1):

for head_idx in vary(NUM_HEADS):

desk = embedding_tables[ngram_size - 2][head_idx]

table_size = desk.form[0]

hash_idx = hash_ngram(

compressed.tolist(),

ngram_size,

head_idx,

table_size

)

embeddings.append(desk[hash_idx])

return np.concatenate(embeddings)

# Initialize random embedding tables

tables = [

[

np.random.randn(5003, EMBEDDING_DIM // NUM_HEADS)

for _ in range(NUM_HEADS)

]

for _ in vary(MAX_NGRAM - 1)

]

consequence = multi_head_lookup([42, 108, 256], tables)

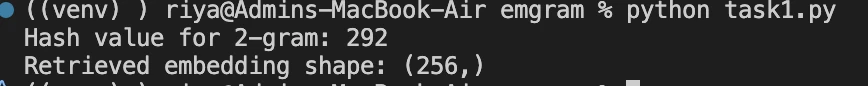

print(f"Retrieved embedding form: {consequence.form}")Output:

Understanding Your Outcomes:

Hash worth 292: Your 2-gram sample is situated at this index within the embedding desk. The worth modifications alongside together with your enter tokens, thus displaying the deterministic mapping.

Form (256,): A complete of 8 embeddings had been retrieved (2 N-gram sizes × 4 heads every), the place every embedding has a dimension of 32 (EMBEDDING_DIM=128 / NUM_HEADS=4). Concatenated: 8 × 32 = 256 dimensions.

Observe: You may also see the implementation of Engram through core logic of Engram module.

Actual-World Efficiency Beneficial properties

The truth that Engram might help with information duties is a superb plus, nevertheless it really makes reasoning and code era considerably higher simply the identical.

Engram shifts native sample recognition to reminiscence lookups and, due to this fact, the eye mechanisms are enabled to work on world context as effectively. The development in efficiency on this case may be very important. Throughout the RULER benchmark take a look at with 32k context home windows, Engram was capable of attain:

- Multi-query NIAH: 97.0 (vs 84.2 baseline)

- Variable Monitoring: 89.0 (vs 77.0 baseline)

- Widespread Phrases Extraction: 99.6 (vs 73.0 baseline)

Conclusion

Engram reveals very fascinating analysis paths. Is it attainable to exchange the fastened capabilities with realized hashing? What if the reminiscence is dynamic and will get up to date in real-time throughout inference? What would be the response by way of processing bigger contexts?

DeepSeek-AI’s Engram repository has the entire technical particulars and code, and the tactic is already being adopted in real-life methods. The primary takeaway is that AI growth is just not solely a matter of larger fashions or higher routing. Generally, it’s a quest for the suitable instruments for the fashions and typically, that sure software is solely a really environment friendly reminiscence system.

Incessantly Requested Questions

A. Engram is a reminiscence module for language fashions that lets them immediately search for widespread token patterns as a substitute of recomputing them each time. Consider it as giving an LLM a quick, dependable reminiscence alongside its reasoning capacity.

A. Conventional transformers simulate reminiscence by means of computation. Even for quite common phrases, the mannequin recomputes patterns repeatedly. Engram removes this inefficiency by introducing conditional reminiscence, releasing computation for reasoning as a substitute of recall.

A. MoE focuses on routing computation selectively. Engram enhances this by routing reminiscence selectively. MoE decides which consultants ought to suppose; Engram decides which patterns must be remembered and retrieved immediately.

Login to proceed studying and luxuriate in expert-curated content material.