We noticed in half 6 the right way to use OCI’s GenAI Service. GenAI Service makes use of GPUs for the LLMs, however do you know it’s additionally potential to make use of GenAI instantly in MySQL HeatWave? And by default, these LLMs will run on CPU. The associated fee will then be diminished.

Which means that when you find yourself related to your MySQL HeatWave database, you’ll be able to name some HeatWave AI procedures instantly out of your utility.

Connecting in Python to your DB System

I’ll exhibit this utilizing Python on the Webserver. We already noticed partially 5 the right way to set up mysql-connector-python.

Earlier than with the ability to use the HeatWave GenAI procedures, we have to grant an additional privilege to our consumer:

SQL > grant execute on sys.* to starterkit_user;Now, let’s modify our earlier Python script to make use of HeatWave GenAI:

import mysql.connector

cnx = mysql.connector.join(consumer="starterkit_user",

password='St4rt3rK4t[[',

host="10.0.1.19",

database="starterkit")

query = "select version(), @@version_comment"

cursor = cnx.cursor()

cursor.execute(query)

for (ver, ver_comm) in cursor:

print("{} {}".format(ver_comm, ver))

cursor.execute("call sys.HEATWAVE_CHAT("What is MySQL HeatWave?")")

rows = cursor.fetchall()

for row in rows:

print(row)

cursor.close()

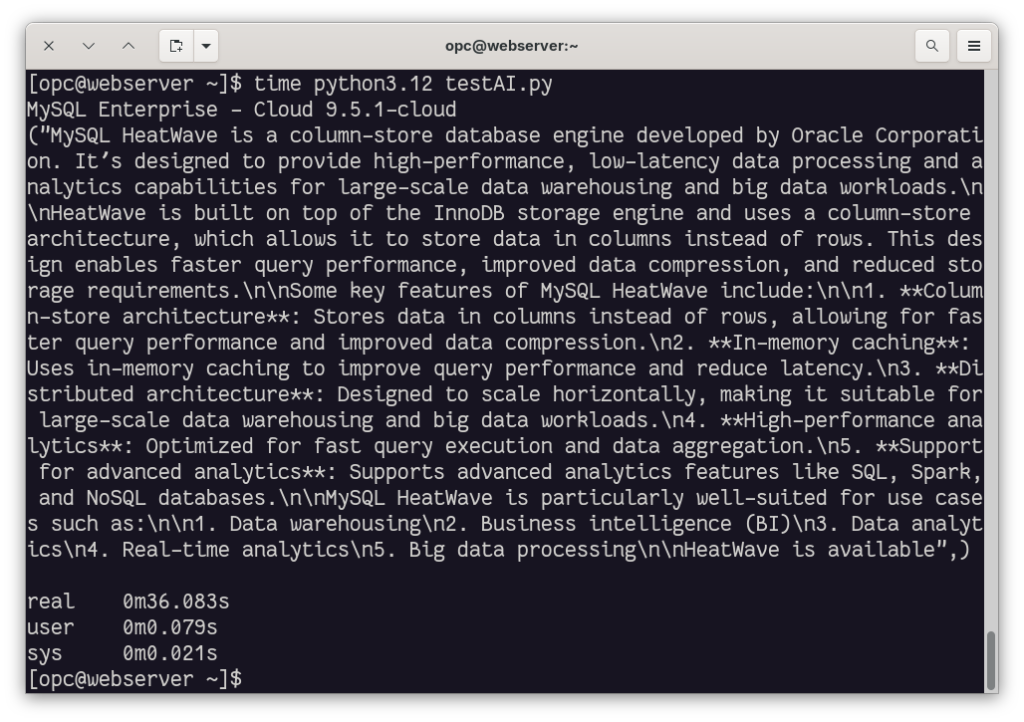

cnx.close()And we can execute it:

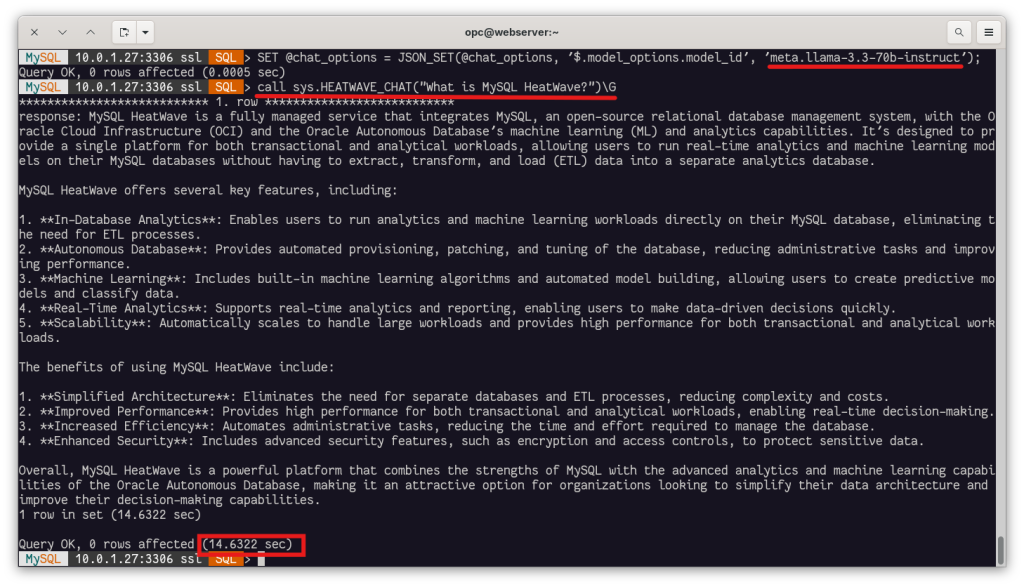

The first time, it takes some time, but after that, the execution time becomes faster:

Supported Models

You can also list the supported models in HeatWave:

SQL > SELECT * FROM sys.ML_SUPPORTED_LLMSG

*************************** 1. row ***************************

provider: HeatWave

model_id: llama3.2-1b-instruct-v1

availability_date: 2025-05-20

capabilities: ["GENERATION"]

default_model: 0

*************************** 2. row ***************************

supplier: HeatWave

model_id: llama3.2-3b-instruct-v1

availability_date: 2025-05-20

capabilities: ["GENERATION"]

default_model: 1

*************************** 3. row ***************************

supplier: HeatWave

model_id: all_minilm_l12_v2

availability_date: 2024-07-01

capabilities: ["TEXT_EMBEDDINGS"]

default_model: 0

*************************** 4. row ***************************

supplier: HeatWave

model_id: multilingual-e5-small

availability_date: 2024-07-24

capabilities: ["TEXT_EMBEDDINGS"]

default_model: 1

4 rows in set, 1 warning (1.4607 sec)OCI GenAI Service in HeatWave

You can even use the OCI Generative AI Service (with GPUs) instantly in HeatWave.

This offers entry to extra LLMs:

SQL > SELECT supplier, model_id FROM sys.ML_SUPPORTED_LLMS;

+---------------------------+--------------------------------+

| supplier | model_id |

+---------------------------+--------------------------------+

| HeatWave | llama2-7b-v1 |

| HeatWave | llama3-8b-instruct-v1 |

| HeatWave | llama3.1-8b-instruct-v1 |

| HeatWave | llama3.2-1b-instruct-v1 |

| HeatWave | llama3.2-3b-instruct-v1 |

| HeatWave | mistral-7b-instruct-v1 |

| HeatWave | mistral-7b-instruct-v3 |

| HeatWave | all_minilm_l12_v2 |

| HeatWave | multilingual-e5-small |

| OCI Generative AI Service | cohere.command-latest |

| OCI Generative AI Service | cohere.command-plus-latest |

| OCI Generative AI Service | cohere.command-a-03-2025 |

| OCI Generative AI Service | meta.llama-3.3-70b-instruct |

| OCI Generative AI Service | cohere.command-r-08-2024 |

| OCI Generative AI Service | cohere.command-r-plus-08-2024 |

| OCI Generative AI Service | cohere.embed-english-v3.0 |

| OCI Generative AI Service | cohere.embed-multilingual-v3.0 |

+---------------------------+--------------------------------+

17 rows in set (1.8044 sec)Nonetheless, you want to give entry to those providers to your DB System. Accessing OCI GenAI Service from HeatWave is just not a part of the always-free tier.

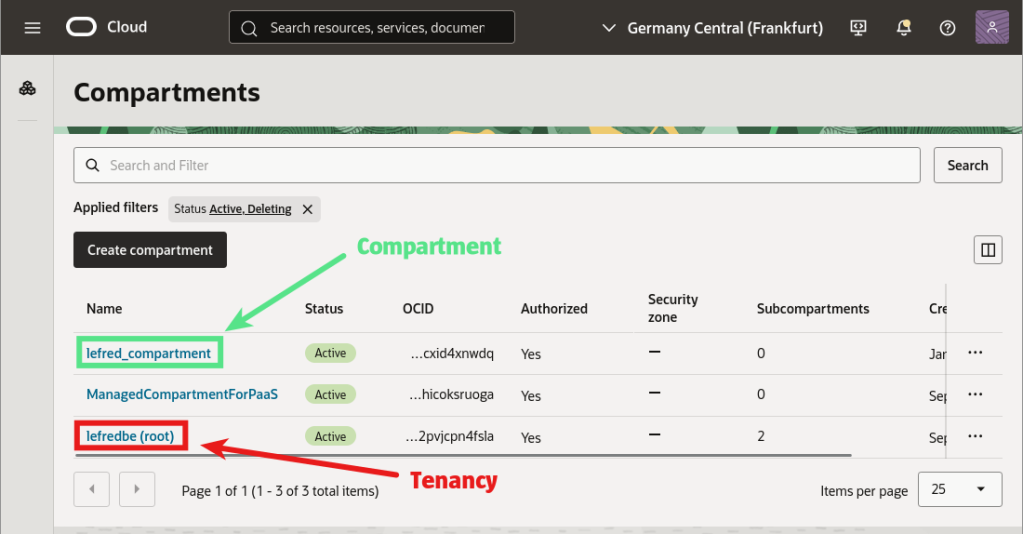

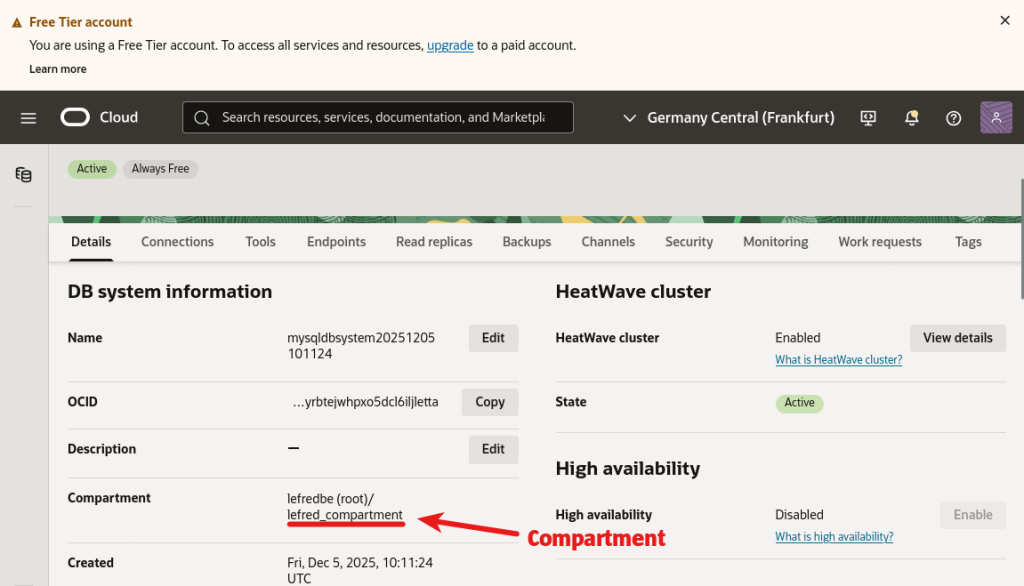

To grant such entry, examine whether or not your DB System is in your tenancy or in a devoted compartment (corresponding to a sandbox).

In our case, the DB System is in a compartment.

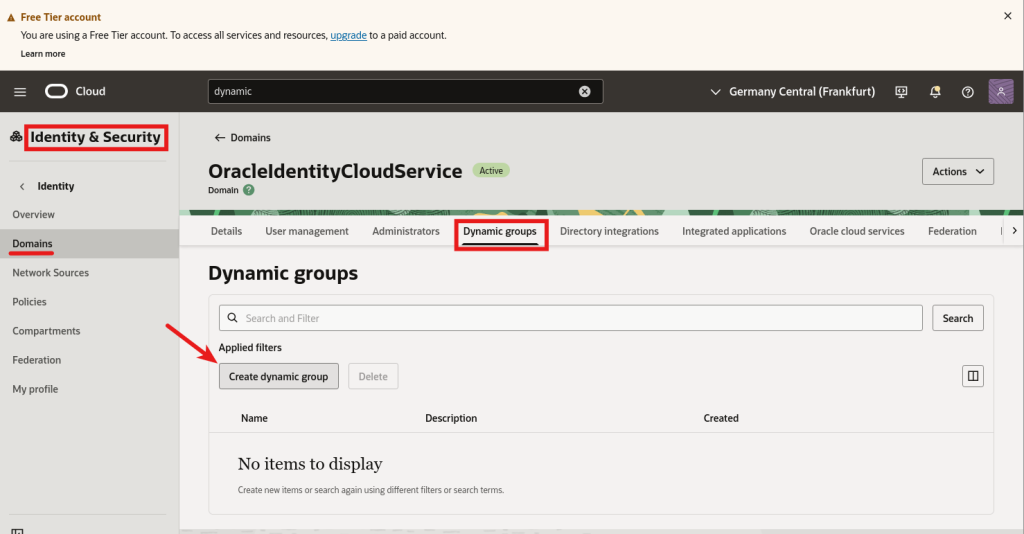

Dynamic Group

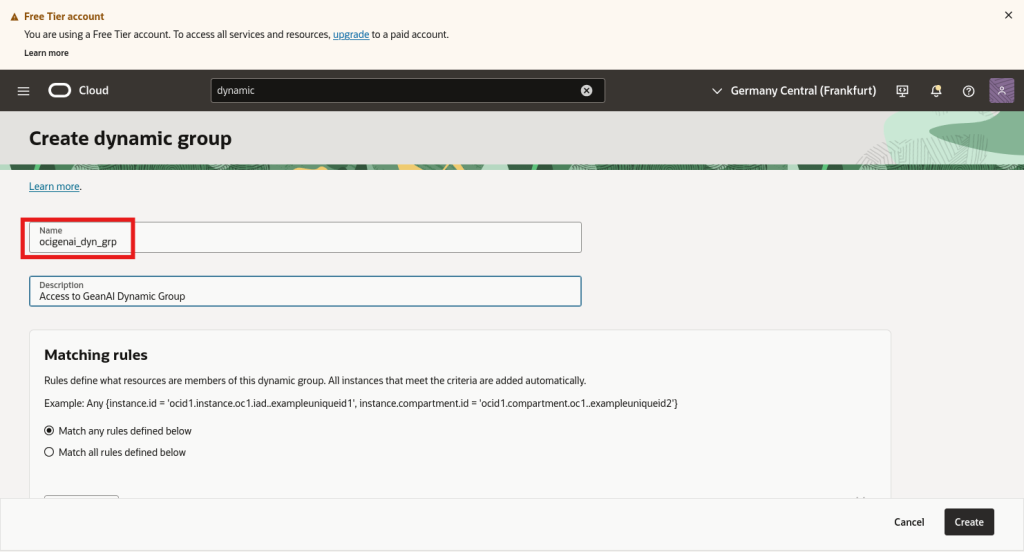

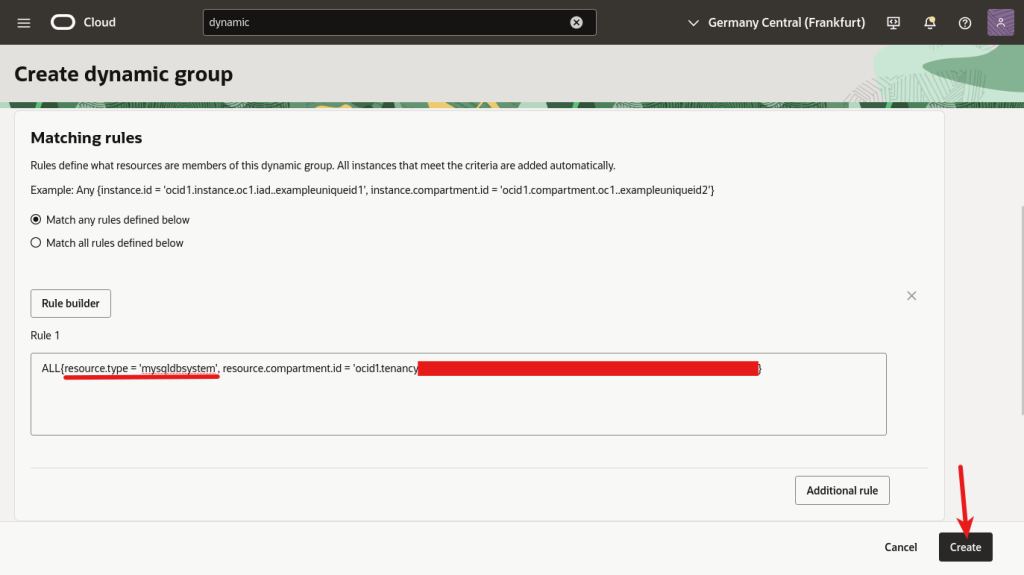

We now have to create a dynamic group to which we’ll present entry to the GenAI Service:

The group’s identify is crucial as we’ll use it later when creating the insurance policies.

If the DB System was not a part of a selected compartment however instantly deployed within the tenancy, we might take away the “,useful resource.compartment.id= ‘ocid1.tenancy….” half.

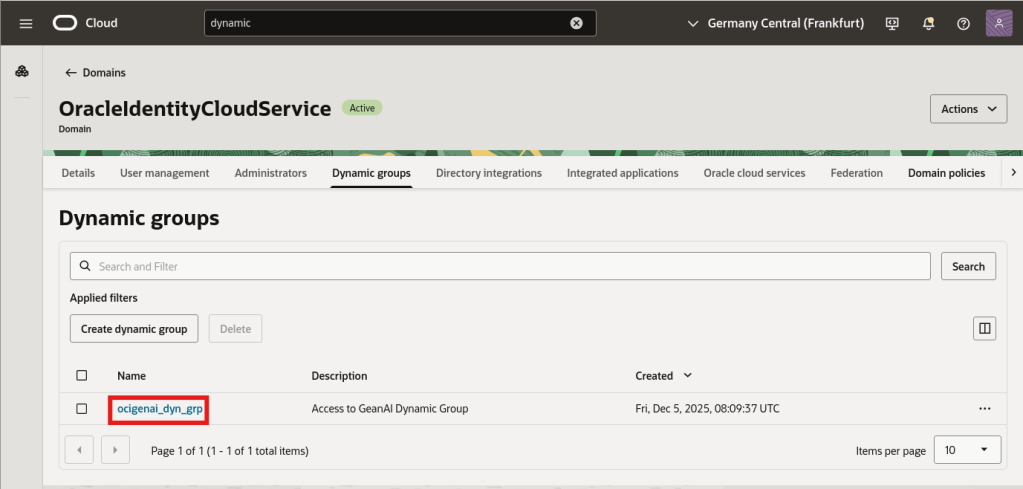

When created, the group ought to be seen:

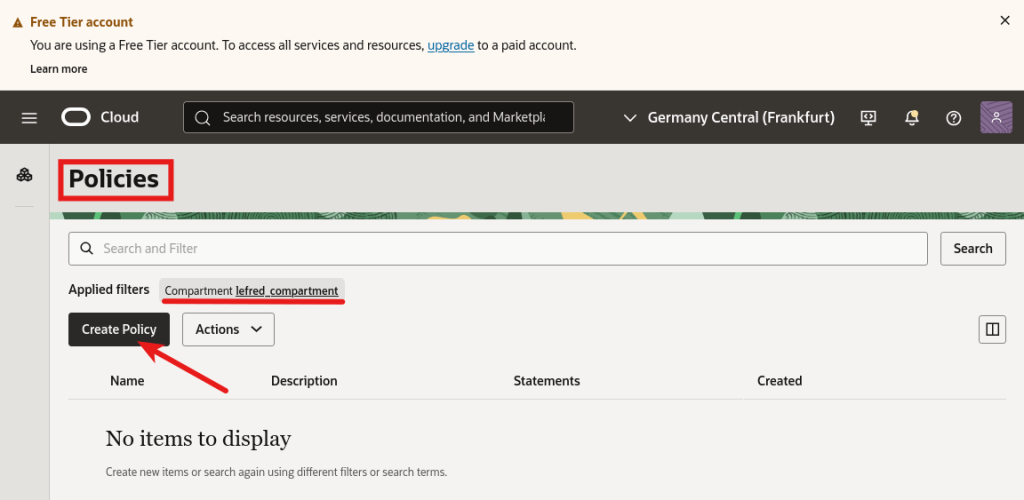

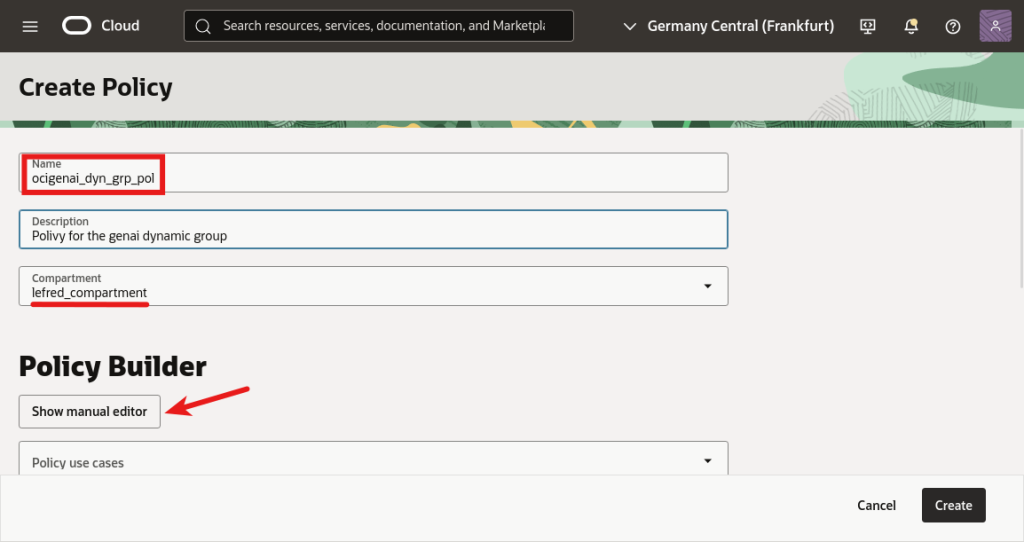

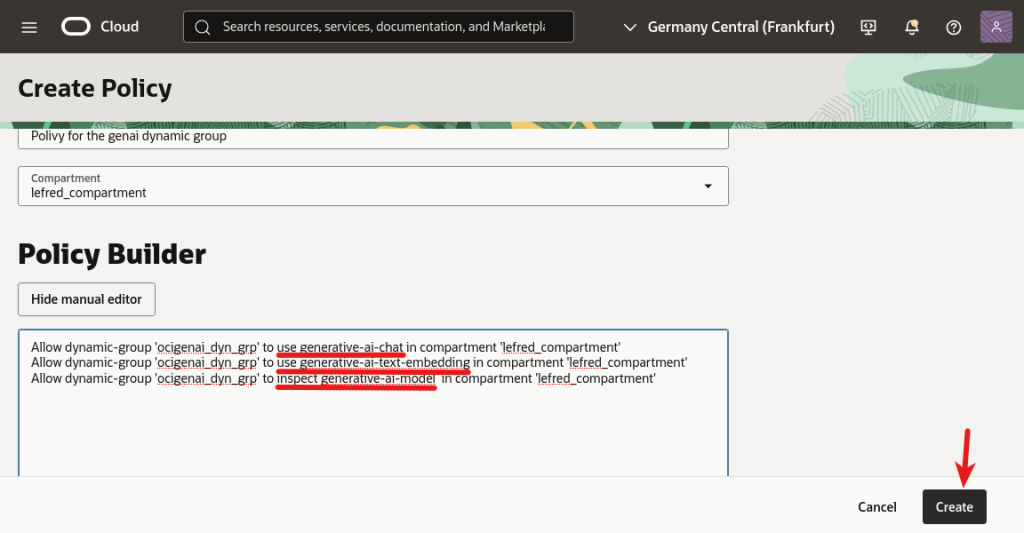

Coverage

Lastly, we will create the coverage:

And we have to add three important guidelines:

The three required guidelines are:

- use generative-ai-chat

- use generative-ai-text-embedding

- examine generative-ai-model

We have to enable these guidelines for the dynamic group we created.

And if we’ve got our DB System operating in a compartment, the three insurance policies are in compartment ‘identify of it’. In any other case, if the DB System was operating within the root compartment of the tenancy, the foundations ought to have been in tenancy.

Utilizing OCI GenAI with HeatWave

Now we will specify a mannequin from the OCI Generative AI Service and name the process sys.HEATWAVE_CHAT() instantly from our database connection:

Let’s uncover the right way to use HeatWave GenAI in video:

Conclusion

With just some extra steps, we’ve been capable of lengthen our MySQL HeatWave setup to help GenAI capabilities instantly from our MySQL connection —and even leverage the ability of OCI Generative AI Service when wanted. Whether or not you might be working with the built-in HeatWave LLMs or accessing extra superior fashions operating on GPUs, integration stays seamless and absolutely managed throughout the database layer.

This method not solely simplifies growth but in addition unlocks new prospects for AI-powered functions—every part from pure language chat interfaces to embedding-based search—with out orchestrating exterior providers or information pipelines. As HeatWave continues to increase its supported fashions and capabilities, it’s changing into a compelling resolution for bringing generative AI on to your information.