Earth commentary (EO) constellations seize enormous volumes of high-resolution imagery day-after-day, however most of it by no means reaches the bottom in time for mannequin coaching. Downlink bandwidth is the principle bottleneck. Photographs can sit on orbit for days whereas floor fashions practice on partial and delayed information.

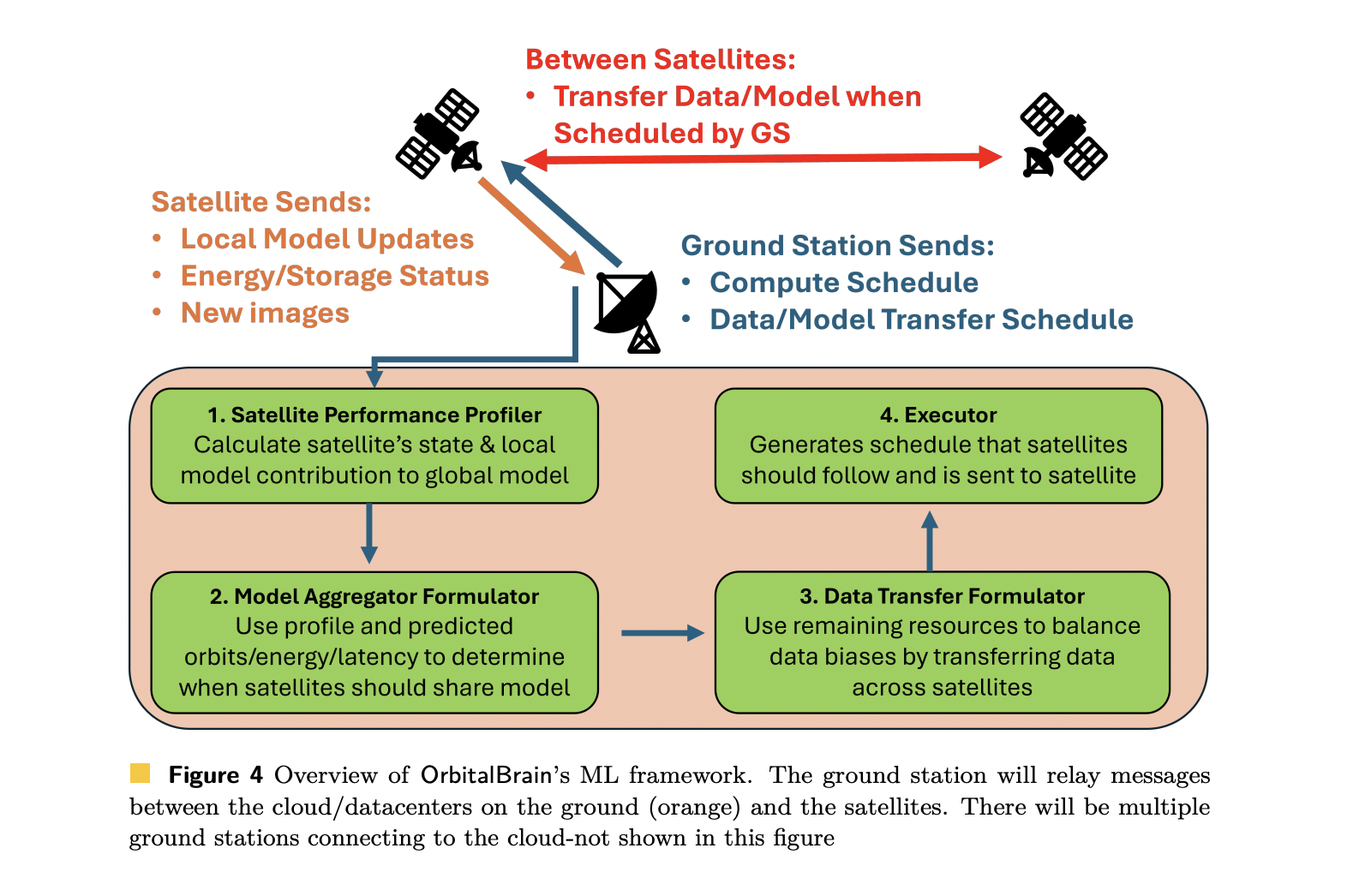

Microsoft Researchers launched ‘OrbitalBrain’ framework as a special method. As a substitute of utilizing satellites solely as sensors that relay information to Earth, it turns a nanosatellite constellation right into a distributed coaching system. Fashions are skilled, aggregated, and up to date straight in area, utilizing onboard compute, inter-satellite hyperlinks, and predictive scheduling of energy and bandwidth.

The BentPipe Bottleneck

Most business constellations use the BentPipe mannequin. Satellites acquire pictures, retailer them regionally, and dump them to floor stations at any time when they cross overhead.

The analysis group evaluates a Planet-like constellation with 207 satellites and 12 floor stations. At most imaging charge, the system captures 363,563 pictures per day. With 300 MB per picture and practical downlink constraints, solely 42,384 pictures could be transmitted in that interval, round 11.7% of what was captured. Even when pictures are compressed to 100 MB, solely 111,737 pictures, about 30.7%, attain the bottom inside 24 hours.

Restricted onboard storage provides one other constraint. Outdated pictures have to be deleted to make room for brand spanking new ones, which implies many doubtlessly helpful samples are by no means out there for ground-based coaching.

Why Standard Federated Studying isn’t Sufficient

Federated studying (FL) looks like an apparent match for satellites. Every satellite tv for pc might practice regionally and ship mannequin updates to a floor server for aggregation. The analysis group consider a number of FL baselines tailored to this setting:

- AsyncFL

- SyncFL

- FedBuff

- FedSpace

Nevertheless, these strategies assume extra secure communication and extra versatile energy than satellites can present. When the analysis group simulate practical orbital dynamics, intermittent floor contact, restricted energy, and non-i.i.d. information throughout satellites, these baselines present unstable convergence and huge accuracy drops, within the vary of 10%–40% in comparison with idealized circumstances.

The time-to-accuracy curves flatten and oscillate, particularly when satellites are remoted from floor stations for lengthy intervals. Many native updates turn into stale earlier than they are often aggregated.

OrbitalBrain: Constellation-Centric Coaching in Area

OrbitalBrain begins from 3 observations:

- Constellations are normally operated by a single business entity, so uncooked information could be shared throughout satellites.

- Orbits, floor station visibility, and solar energy are predictable from orbital components and energy fashions.

- Inter-satellite hyperlinks (ISLs) and onboard accelerators are actually sensible on nano-satellites.

The framework exposes 3 actions for every satellite tv for pc in a scheduling window:

- Native Compute (LC): practice the native mannequin on saved pictures.

- Mannequin Aggregation (MA): change and combination mannequin parameters over ISLs.

- Knowledge Switch (DT): change uncooked pictures between satellites to cut back information skew.

A controller working within the cloud, reachable by way of floor stations, computes a predictive schedule for every satellite tv for pc. The schedule decides which motion to prioritize in every future window, based mostly on forecasts of power, storage, orbital visibility, and hyperlink alternatives.

Core Parts: Profiler, MA, DT, Executor

- Guided efficiency profiler

- Mannequin aggregation over ISLs

- Knowledge transferrer for label rebalancing

- Executor

Experimental setup

OrbitalBrain is applied in Python on high of the CosmicBeats orbital simulator and the FLUTE federated studying framework. Onboard compute is modeled as an NVIDIA-Jetson-Orin-Nano-4GB GPU, with energy and communication parameters calibrated from public satellite tv for pc and radio specs.

The analysis group simulate 24-hour traces for two actual constellations:

- Planet: 207 satellites with 12 floor stations.

- Spire: 117 satellites.

They consider 2 EO classification duties:

- fMoW: round 360k RGB pictures, 62 lessons, DenseNet-161 with the final 5 layers trainable.

- So2Sat: round 400k multispectral pictures, 17 lessons, ResNet-50 with the final 5 layers trainable.

Outcomes: sooner time-to-accuracy and better accuracy

OrbitalBrain is in contrast with BentPipe, AsyncFL, SyncFL, FedBuff, and FedSpace underneath full bodily constraints.

For fMoW, after 24 hours:

- Planet: OrbitalBrain reaches 52.8% top-1 accuracy.

- Spire: OrbitalBrain reaches 59.2% top-1 accuracy.

For So2Sat:

- Planet: 47.9% top-1 accuracy.

- Spire: 47.1% top-1 accuracy.

These outcomes enhance over the perfect baseline by 5.5%–49.5%, relying on dataset and constellation.

When it comes to time-to-accuracy, OrbitalBrain achieves 1.52×–12.4× speedup in comparison with state-of-the-art ground-based or federated studying approaches. This comes from utilizing satellites that can’t at the moment attain a floor station by aggregating over ISLs and from rebalancing information distributions by way of DT.

Ablation research present that disabling MA or DT considerably degrades each convergence velocity and ultimate accuracy. Further experiments point out that OrbitalBrain stays sturdy when cloud cowl hides a part of the imagery, when solely a subset of satellites take part, and when picture sizes and resolutions fluctuate.

Implications for satellite tv for pc AI workloads

OrbitalBrain demonstrates that mannequin coaching can transfer into area and that satellite tv for pc constellations can act as distributed ML techniques, not simply information sources. By coordinating native coaching, mannequin aggregation, and information switch underneath strict bandwidth, energy, and storage constraints, the framework allows more energizing fashions for duties like forest hearth detection, flood monitoring, and local weather analytics, with out ready days for information to succeed in terrestrial information facilities.

Key Takeaways

- BentPipe downlink is the core bottleneck: Planet-like EO constellations can solely downlink about 11.7% of captured 300 MB pictures per day, and about 30.7% even with 100 MB compression, which severely limits ground-based mannequin coaching.

- Commonplace federated studying fails underneath actual satellite tv for pc constraints: AsyncFL, SyncFL, FedBuff, and FedSpace degrade by 10%–40% in accuracy when practical orbital dynamics, intermittent hyperlinks, energy limits, and non-i.i.d. information are utilized, resulting in unstable convergence.

- OrbitalBrain co-schedules compute, aggregation, and information switch in orbit: A cloud controller makes use of forecasts of orbit, energy, storage, and hyperlink alternatives to pick Native Compute, Mannequin Aggregation by way of ISLs, or Knowledge Switch per satellite tv for pc, maximizing a utility perform per motion.

- Label rebalancing and mannequin staleness are dealt with explicitly: A guided profiler tracks mannequin staleness and loss to outline compute utility, whereas the info transferrer makes use of Jensen–Shannon divergence on label histograms to drive raw-image exchanges that cut back non-i.i.d. results.

- OrbitalBrain delivers larger accuracy and as much as 12.4× sooner time-to-accuracy: In simulations on Planet and Spire constellations with fMoW and So2Sat, OrbitalBrain improves ultimate accuracy by 5.5%–49.5% over BentPipe and FL baselines and achieves 1.52×–12.4× speedups in time-to-accuracy.

Try the Paper. Additionally, be happy to observe us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you’ll be able to be part of us on telegram as effectively.