Introduction

It has been a yr and a half since I arrange GitLab. In that point I’ve used to it

retailer my private code, maintain monitor of options I need to add to my very own initiatives,

and usually beloved it. One factor I have never accomplished although, is play with the CI/CD

options. I have been eager to, however by no means acquired round to it.

To make the most of the CI/CD options, you could arrange a GitLab runner

Arrange

Docker

I will be working my runners in Docker containers. There are different choices,

however for my residence atmosphere, that is best to arrange and preserve.

Let’s begin by putting in Docker:

sudo apt-get set up apt-transport-https ca-certificates curl software program-properties-frequent -y

curl -fsSL https://obtain.docker.com/linux/ubuntu/gpg | sudo apt-key add -

sudo add-apt-repository "deb [arch=amd64] https://obtain.docker.com/linux/ubuntu $(lsb_release -cs) steady"

sudo apt replace

sudo apt set up docker-ce -y

That is going so as to add the Docker repository and set up the neighborhood version.

GitLab Runner

As soon as Docker is put in and dealing, we are able to set up the GitLab runner.

wget -O /usr/native/bin/gitlab-runner https://gitlab-runner-downloads.s3.amazonaws.com/newest/binaries/gitlab-runner-linux-amd64

chmod +x /usr/native/bin/gitlab-runner

useradd --remark 'GitLab Runner' --create-residence gitlab-runner --shell /bin/bash

gitlab-runner set up --consumer=gitlab-runner --working-listing=/residence/gitlab-runner

gitlab-runner begin

That is downloading the set up bundle. Then it provides a brand new consumer, that the runner will run as. This can be a good safety precaution to restrict what the runner has entry to on the system. It isn’t full safety, but it surely does all me to limit what that specific consumer has entry to on the server.

Then the run is began.

Subsequent the runner must be registered. Log into GitLab, and navigate to

the mission that can be utilizing this runner. It must be accessible at this hyperlink:

https://url.to.your.gitlab.set up///settings/ci_cd

Seize the GitLab CI token. That’s wanted within the subsequent step. Run the gitlab-runner register command, and fill in your values as required.

$ gitlab-runner register

Please enter the gitlab-ci coordinator URL (e.g. https://gitlab.com/):

https://url.to.your.gitlab.set up/

Please enter the gitlab-ci token for this runner:

[redacted]]

Please enter the gitlab-ci description for this runner:

[my-runner]: python-runner

Please enter the gitlab-ci tags for this runner (comma separated):

python

Registering runner... succeeded runner=66m_339h

Please enter the executor: docker-ssh+machine, docker, docker-ssh, parallels, shell, ssh, virtualbox, docker+machine, kubernetes:

docker

Please enter the default Docker picture (e.g. ruby:2.1):

alpine:newest

Runner registered efficiently. Really feel free to begin it, however if it's working already the config ought to be robotically reloaded!

My expertise with Docker is restricted, so I’m utilizing the newest alpine model. I am positive there are higher ones to make use of for a easy Python utility, however this works for me and I am not involved in regards to the absolute quickest CI/CD pipeline on my server.

Utilizing the CI/CD pipeline

At this level, the GitLab runner is registered to a single mission and is able to use. You possibly can allow your mission to make use of this pipeline by including a .gitlab-ci.yml file to the basis of your repository.

I used it to arrange the next pipeline.

This pipeline has 4 levels.

Static Evaluation

Within the Static Evaluation section, I run varied evaluation instruments over my code. The aim right here is to make sure that my code, YAML paperwork, and documentation are simple to learn and use.

You will discover the the xenon process has a warning within the picture. I’ve the settings fairly strict on this pipeline, the place I enable a really low Cyclomatic Complexity. On this mission, I’ve one perform that’s simply barely failing my strict settings. I’ve allowed the pipeline to proceed if this one explicit process fails.

I may both improve the allowed complexity within the code base to disregard the warning. Alternatively, I couldn’t enable the pipeline to proceed till I repair this perform. This was a type of cases the place it hasn’t been value it to refactor the code, however I need to be reminded I ought to repair it, so I enable the pipeline to proceed and present me the error.

Take a look at

The Take a look at section must be pretty self explanatory. It is the stage the place I run my take a look at suite. It is going to solely run if the Static Evaluation section completes efficiently (or I enable a selected job to fail, within the case of the xenon process).

Deploy Testing

As soon as the take a look at suite has accomplished efficiently, I deploy the bundle to the take a look at occasion of PyPI. This ensures that I’ve a profitable construct and deploy.

Deploy Manufacturing

Deployment to manufacturing is a handbook step. I did this as a result of I do not at all times need to push adjustments to manufacturing PyPI. That is very true if I am solely making small fixes – akin to white area adjustments. I do not need to skip the complete CI course of, however I additionally do not need to push out to manufacturing for one thing so minor.

Atmosphere arrange

One in all my largest complaints in regards to the Travis CI integration with GitHub is the way you cannot belief atmosphere variables in Travis CI. Since I management the server that this runner is linked to, I’ve no drawback including atmosphere variables into GitLab.

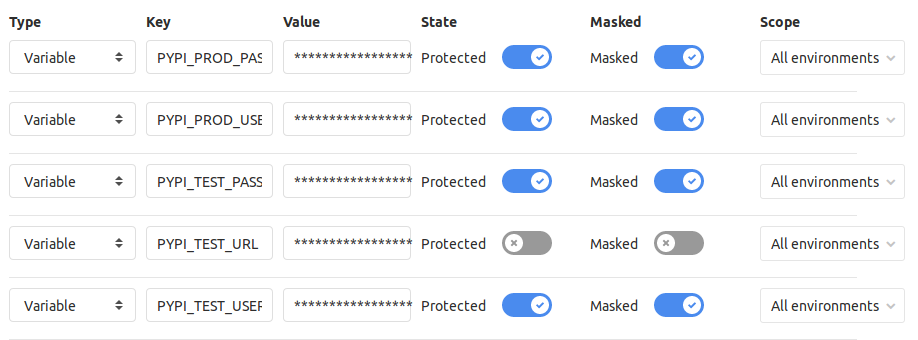

Within the mission, I used to be in a position so as to add these by going to Settings->CI/CD and increasing the Variables part. I wanted to retailer credentials for each the take a look at and manufacturing cases of PyPI.

With these added, they’re now accessible by my .gitlab-ci.yml file.

.gitlab-ci.yml

The script I exploit to construct the pipeline is as follows:

picture: "python:3.7"

.python-tag:

tags:

- python

before_script:

- python --model

- pip set up .[dev]

levels:

- Static Evaluation

- Take a look at

- Deploy Testing

- Deploy Manufacturing

flake8:

stage: Static Evaluation

extends:

- .python-tag

script:

- flake8 --max-line-size=120

doc8:

stage: Static Evaluation

extends:

- .python-tag

allow_failure: true

script:

- doc8 --max-line-size=100 --ignore-path=docs/_build/* docs/

yamllint:

stage: Static Evaluation

extends:

- .python-tag

allow_failure: true

script:

- yamllint .

bandit:

stage: Static Evaluation

extends:

- .python-tag

allow_failure: true

script:

- bandit -r -s B101 my_package

radon:

stage: Static Evaluation

extends:

- .python-tag

script:

- radon cc my_package/ -s -a -nb

- radon mi my_package/ -s -nb

xenon:

stage: Static Evaluation

extends:

- .python-tag

allow_failure: true

script:

- xenon --max-absolute B --max-modules B --max-common B my_package/

pytest:

stage: Take a look at

extends:

- .python-tag

script:

- pytest

deploy_testing:

stage: Deploy Testing

extends:

- .python-tag

variables:

TWINE_USERNAME: $PYPI_TEST_USERNAME

TWINE_PASSWORD: $PYPI_TEST_PASSWORD

script:

- python setup.py sdist

- twine add --repository-url $PYPI_TEST_URL dist/*

deploy_production:

stage: Deploy Manufacturing

extends:

- .python-tag

when: handbook

variables:

TWINE_USERNAME: $PYPI_PROD_USERNAME

TWINE_PASSWORD: $PYPI_PROD_PASSWORD

script:

- python setup.py sdist

- twine add dist/*

.gitlab-ci.yml rationalization

There are a number of areas that I really feel have to be defined within the script above.

Tags

.python-tag:

tags:

- python

This part is a template that’s used so as to add the python tag to a job step. The Runner will solely run duties which have a tag you assigned to it. That is helpful in case you are constructing throughout a number of working techniques or throughout a number of languages. You don’t need a runner set as much as take a look at towards a Home windows atmosphere selecting up duties for a Linux atmosphere. In my case, every little thing is being run for a single runner.

In every step, I would like so as to add this:

With this, the python tag can be robotically utilized to the duty. For a easy pipeline like mine, it might look like overkill, but it surely’s helpful if I need to pressure different tags or steps.

Permit failures

xenon:

stage: Static Evaluation

extends:

- .python-tag

allow_failure: true

script:

- xenon --max-absolute B --max-modules B --max-common B my_package/

I beforehand talked about that I allowed the xenon step to fail. That is achieved with the allow_failue: true setting you see right here. Since this setting is in place, the pipeline will proceed even when this single process fails.

Guide deployment

deploy_production:

stage: Deploy Manufacturing

extends:

- .python-tag

when: handbook

variables:

TWINE_USERNAME: $PYPI_PROD_USERNAME

TWINE_PASSWORD: $PYPI_PROD_PASSWORD

script:

- python setup.py sdist

- twine add dist/*

The deploy to manufacturing is a handbook step. I exploit when: handbook to make sure this step does not happen robotically. Sooner or later sooner or later, this mission can be steady and will not have minor fixes any longer and I will need to robotically deploy to manufacturing with out interplay. After I attain that time, I will simply have to take away this line. So long as the previous steps succeed, this one can be carried out as nicely.

Subsequent Steps

Now that I’ve set this up and are available to depend on it, I believe my subsequent steps can be changing my residence Minecraft Server to make use of the GitLab pipelines to robotically deploy updates. Search for that publish sooner or later.