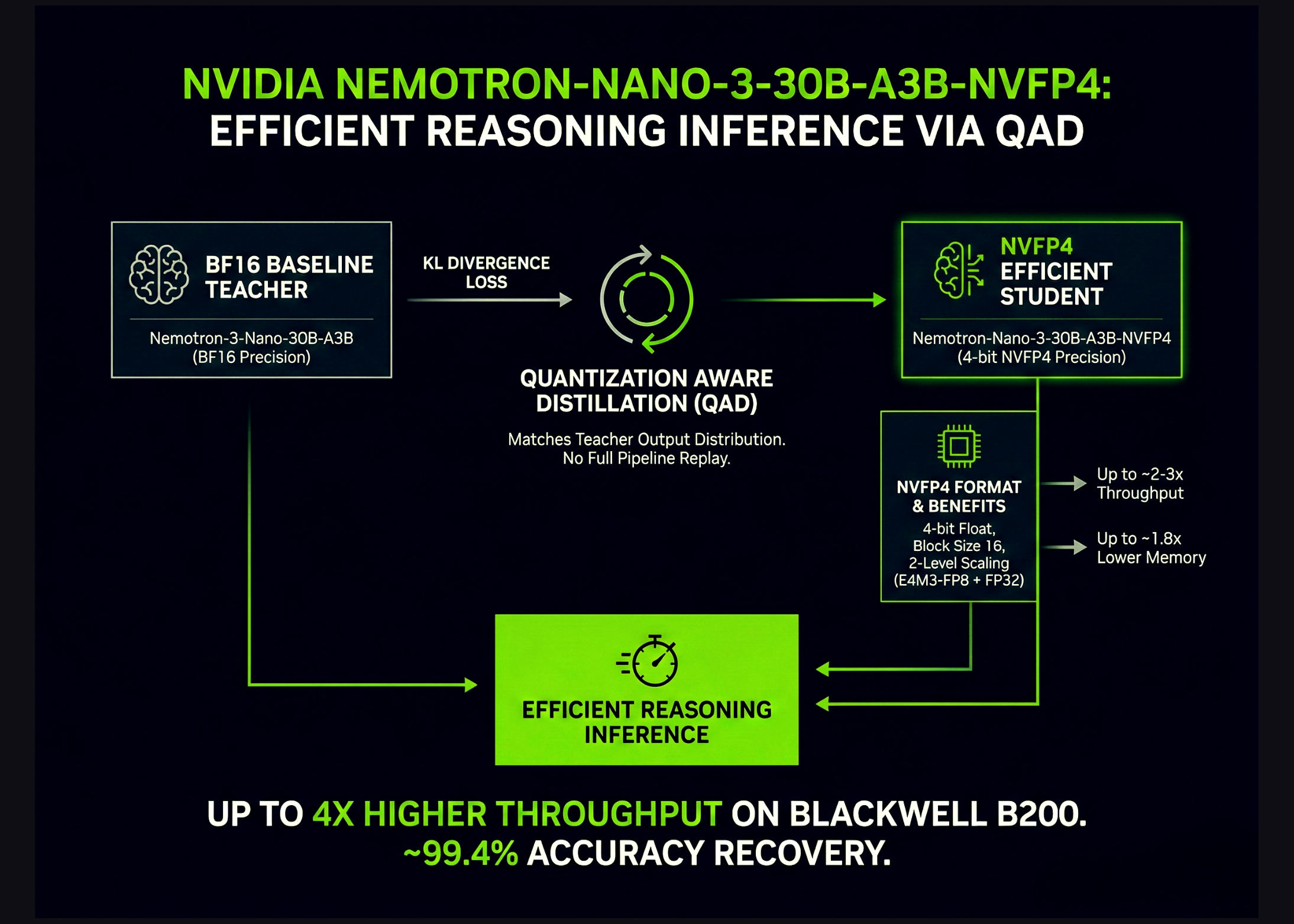

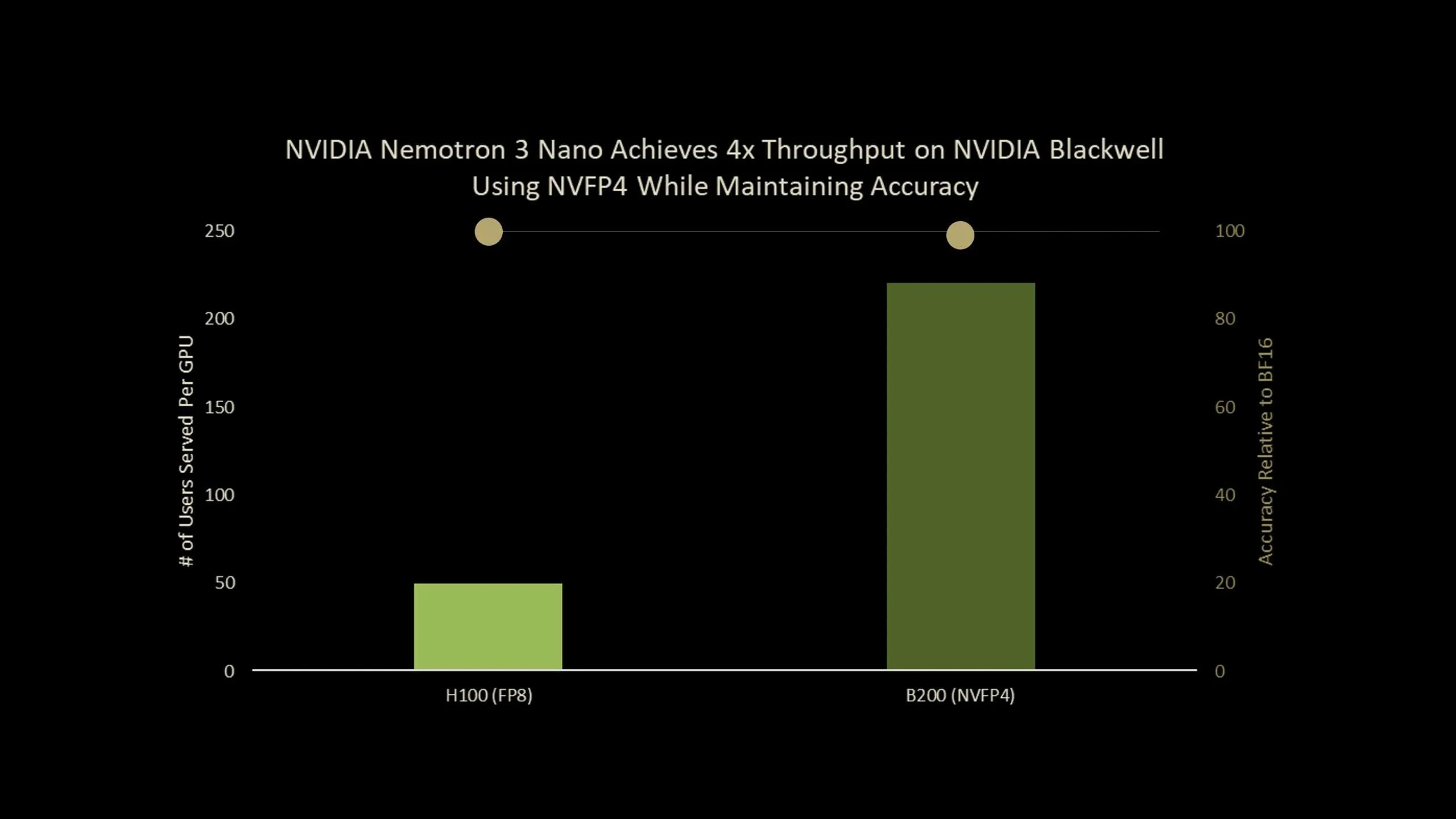

NVIDIA has launched Nemotron-Nano-3-30B-A3B-NVFP4, a manufacturing checkpoint that runs a 30B parameter reasoning mannequin in 4 bit NVFP4 format whereas conserving accuracy near its BF16 baseline. The mannequin combines a hybrid Mamba2 Transformer Combination of Consultants structure with a Quantization Conscious Distillation (QAD) recipe designed particularly for NVFP4 deployment. Total, it’s an ultra-efficient NVFP4 precision model of Nemotron-3-Nano that delivers as much as 4x increased throughput on Blackwell B200.

What’s Nemotron-Nano-3-30B-A3B-NVFP4?

Nemotron-Nano-3-30B-A3B-NVFP4 is a quantized model of Nemotron-3-Nano-30B-A3B-BF16, educated from scratch by NVIDIA crew as a unified reasoning and chat mannequin. It’s constructed as a hybrid Mamba2 Transformer MoE community:

- 30B parameters in complete

- 52 layers in depth

- 23 Mamba2 and MoE layers

- 6 grouped question consideration layers with 2 teams

- Every MoE layer has 128 routed consultants and 1 shared professional

- 6 consultants are energetic per token, which provides about 3.5B energetic parameters per token

The mannequin is pre-trained on 25T tokens utilizing a Warmup Steady Decay studying charge schedule with a batch dimension of 3072, a peak studying charge of 1e-3 and a minimal studying charge of 1e-5.

Submit coaching follows a 3 stage pipeline:

- Supervised nice tuning on artificial and curated information for code, math, science, instrument calling, instruction following and structured outputs.

- Reinforcement studying with synchronous GRPO throughout multi step instrument use, multi flip chat and structured environments, and RLHF with a generative reward mannequin.

- Submit coaching quantization to NVFP4 with FP8 KV cache and a selective excessive precision structure, adopted by QAD.

The NVFP4 checkpoint retains the eye layers and the Mamba layers that feed into them in BF16, quantizes remaining layers to NVFP4 and makes use of FP8 for the KV cache.

NVFP4 format and why it issues?

NVFP4 is a 4 bit floating level format designed for each coaching and inference on latest NVIDIA GPUs. The primary properties of NVFP4:

- In contrast with FP8, NVFP4 delivers 2 to three instances increased arithmetic throughput.

- It reduces reminiscence utilization by about 1.8 instances for weights and activations.

- It extends MXFP4 by lowering the block dimension from 32 to 16 and introduces two degree scaling.

The 2 degree scaling makes use of E4M3-FP8 scales per block and a FP32 scale per tensor. The smaller block dimension permits the quantizer to adapt to native statistics and the twin scaling will increase dynamic vary whereas conserving quantization error low.

For very massive LLMs, easy publish coaching quantization (PTQ) to NVFP4 already provides respectable accuracy throughout benchmarks. For smaller fashions, particularly these closely postage pipelines, the analysis crew notes that PTQ causes non negligible accuracy drops, which motivates a coaching based mostly restoration methodology.

From QAT to QAD

Customary Quantization Conscious Coaching (QAT) inserts a pseudo quantization into the ahead go and reuses the unique job loss, comparable to subsequent token cross entropy. This works properly for convolutional networks, however the analysis crew lists 2 primary points for contemporary LLMs:

- Advanced multi stage publish coaching pipelines with SFT, RL and mannequin merging are exhausting to breed.

- Unique coaching information for open fashions is commonly unavailabublic kind.

Quantization Conscious Distillation (QAD) adjustments the target as a substitute of the total pipeline. A frozen BF16 mannequin acts as instructor and the NVFP4 mannequin is a pupil. Coaching minimizes KL divergence between their output token distributions, not the unique supervised or RL goal.

The analysis crew highlights 3 properties of QAD:

- It aligns the quantized mannequin with the excessive precision instructor extra precisely than QAT.

- It stays steady even when the instructor has already gone by a number of phases, comparable to supervised nice tuning, reinforcement studying and mannequin merging, as a result of QAD solely tries to match the ultimate instructor conduct.

- It really works with partial, artificial or filtered information, as a result of it solely wants enter textual content to question the instructor and pupil, not the unique labels or reward fashions.

Benchmarks on Nemotron-3-Nano-30B

Nemotron-3-Nano-30B-A3B is among the RL heavy fashions within the QAD analysis. The under Desk reveals accuracy on AA-LCR, AIME25, GPQA-D, LiveCodeBench-v5 and SciCode-TQ, NVFP4-QAT and NVFP4-QAD.

Key Takeaways

- Nemotron-3-Nano-30B-A3B-NVFP4 is a 30B parameter hybrid Mamba2 Transformer MoE mannequin that runs in 4 bit NVFP4 with FP8 KV cache and a small set of BF16 layers preserved for stability, whereas conserving about 3.5B energetic parameters per token and supporting context home windows as much as 1M tokens.

- NVFP4 is a 4 bit floating level format with block dimension 16 and two degree scaling, utilizing E4M3-FP8 per block scales and a FP32 per tensor scale, which provides about 2 to three instances increased arithmetic throughput and about 1.8 instances decrease reminiscence price than FP8 for weights and activations.

- Quantization Conscious Distillation (QAD) replaces the unique job loss with KL divergence to a frozen BF16 instructor, so the NVFP4 pupil straight matches the instructor’s output distribution with out replaying the total SFT, RL and mannequin merge pipeline or needing the unique reward fashions.

- Utilizing the brand new Quantization Conscious Distillation methodology, the NVFP4 model achieves as much as 99.4% accuracy of BF16

- On AA-LCR, AIME25, GPQA-D, LiveCodeBench and SciCode, NVFP4-PTQ reveals noticeable accuracy loss and NVFP4-QAT degrades additional, whereas NVFP4-QAD recovers efficiency to close BF16 ranges, lowering the hole to just a few factors throughout these reasoning and coding benchmarks.

Try the Paper and Mannequin Weights. Additionally, be at liberty to observe us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you may be a part of us on telegram as properly.