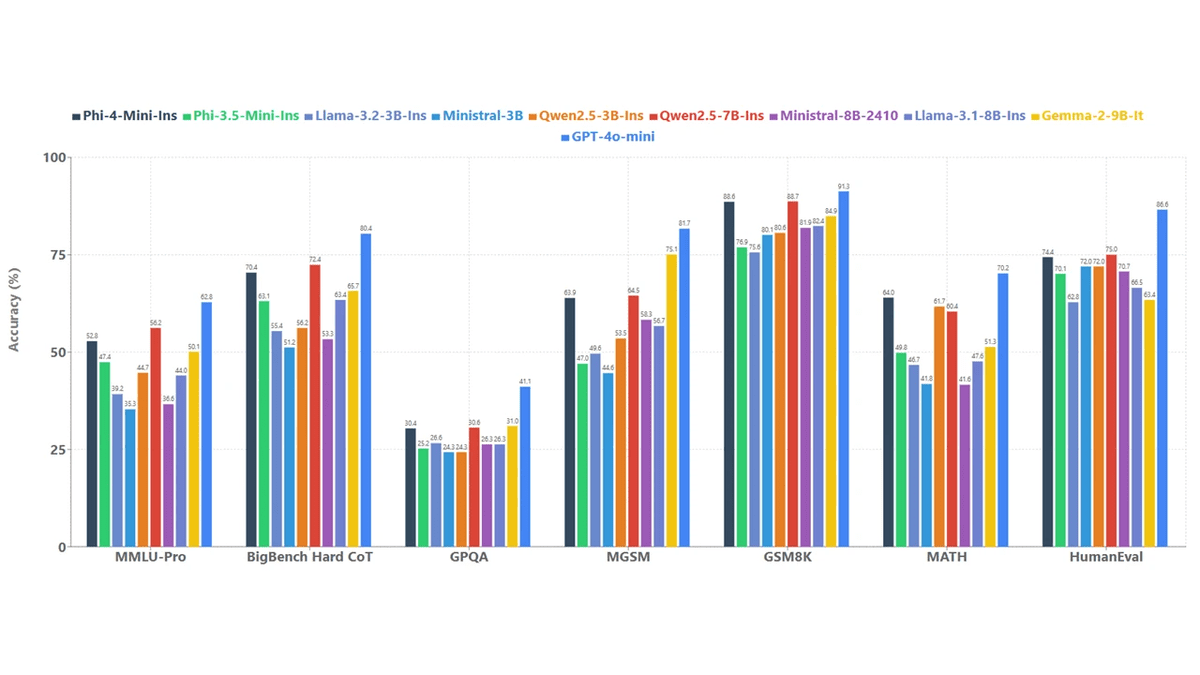

Picture based mostly on Synthetic Evaluation

# Introduction

We regularly speak about small AI fashions. However what about tiny fashions that may really run on a Raspberry Pi with restricted CPU energy and little or no RAM?

Because of fashionable architectures and aggressive quantization, fashions round 1 to 2 billion parameters can now run on extraordinarily small gadgets. When quantized, these fashions can run virtually wherever, even in your good fridge. All you want is llama.cpp, a quantized mannequin from the Hugging Face Hub, and a easy command to get began.

What makes these tiny fashions thrilling is that they aren’t weak or outdated. Lots of them outperform a lot older massive fashions in real-world textual content technology. Some additionally assist instrument calling, imaginative and prescient understanding, and structured outputs. These should not small and dumb fashions. They’re small, quick, and surprisingly clever, able to working on gadgets that match within the palm of your hand.

On this article, we are going to discover 7 tiny AI fashions that run properly on a Raspberry Pi and different low-power machines utilizing llama.cpp. If you wish to experiment with native AI with out GPUs, cloud prices, or heavy infrastructure, this listing is a superb place to begin.

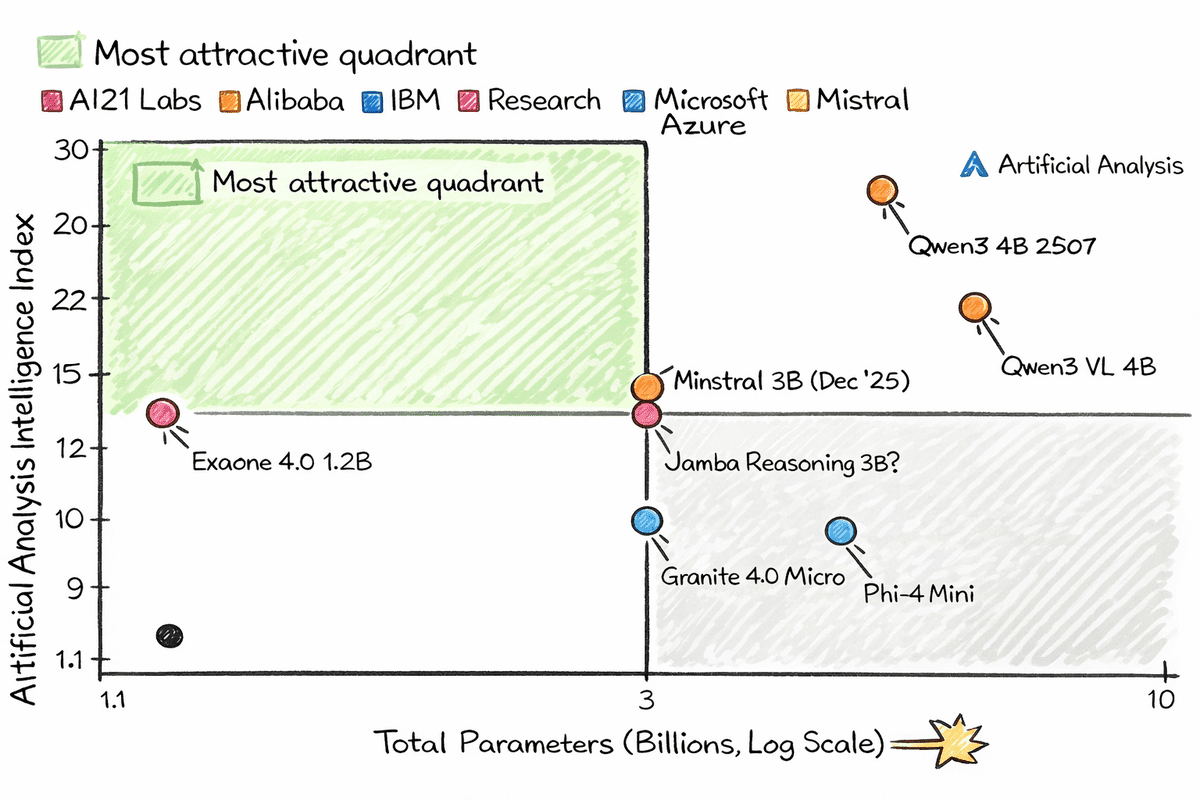

# 1. Qwen3 4B 2507

Qwen3-4B-Instruct-2507 is a compact but extremely succesful non-thinking language mannequin that delivers a significant leap in efficiency for its dimension. With simply 4 billion parameters, it reveals sturdy good points throughout instruction following, logical reasoning, arithmetic, science, coding, and power utilization, whereas additionally increasing long-tail data protection throughout many languages.

The mannequin demonstrates notably improved alignment with person preferences in subjective and open-ended duties, leading to clearer, extra useful, and higher-quality textual content technology. Its assist for a formidable 256K native context size permits it to deal with extraordinarily lengthy paperwork and conversations effectively, making it a sensible alternative for real-world purposes that demand each depth and pace with out the overhead of bigger fashions.

# 2. Qwen3 VL 4B

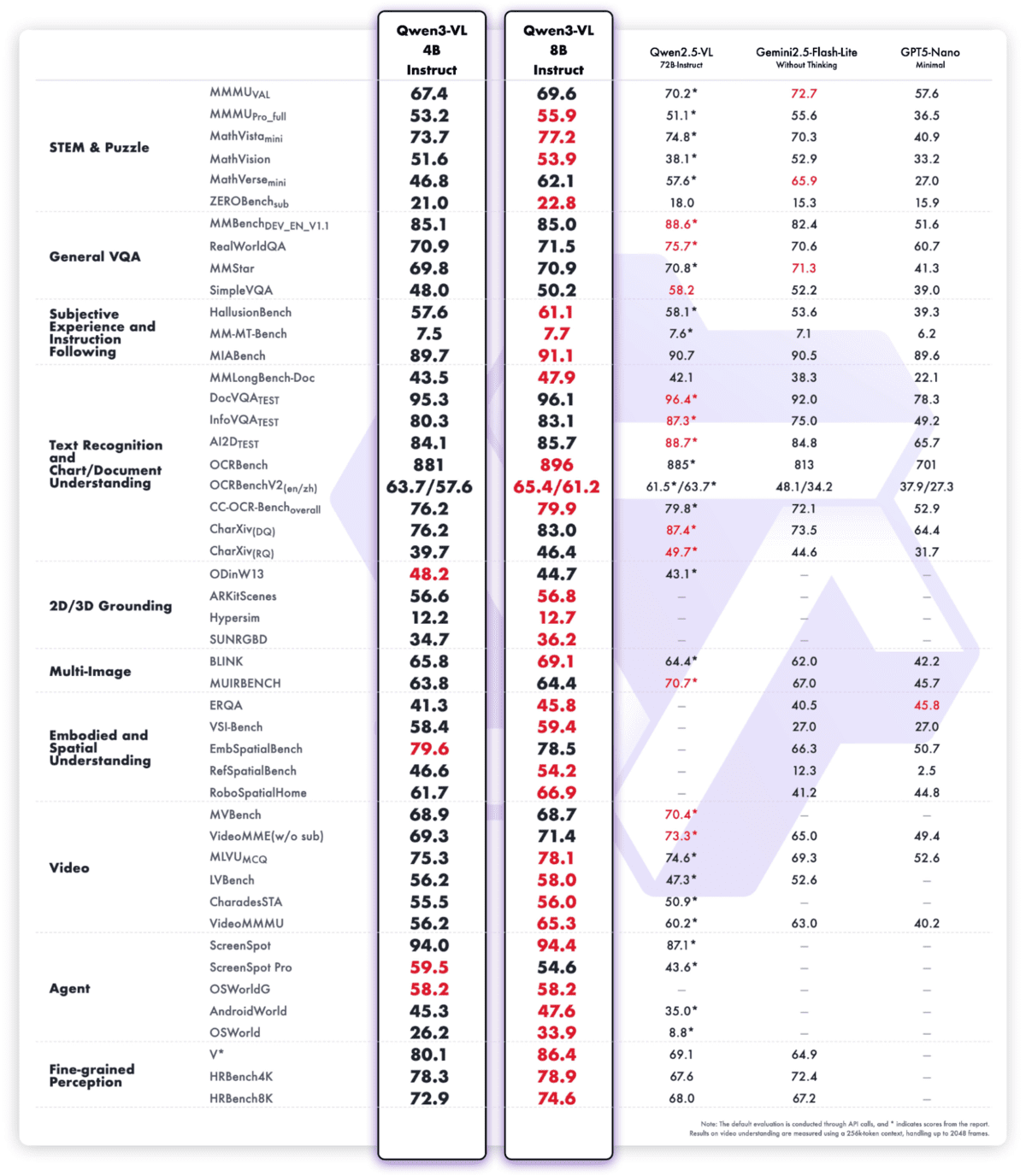

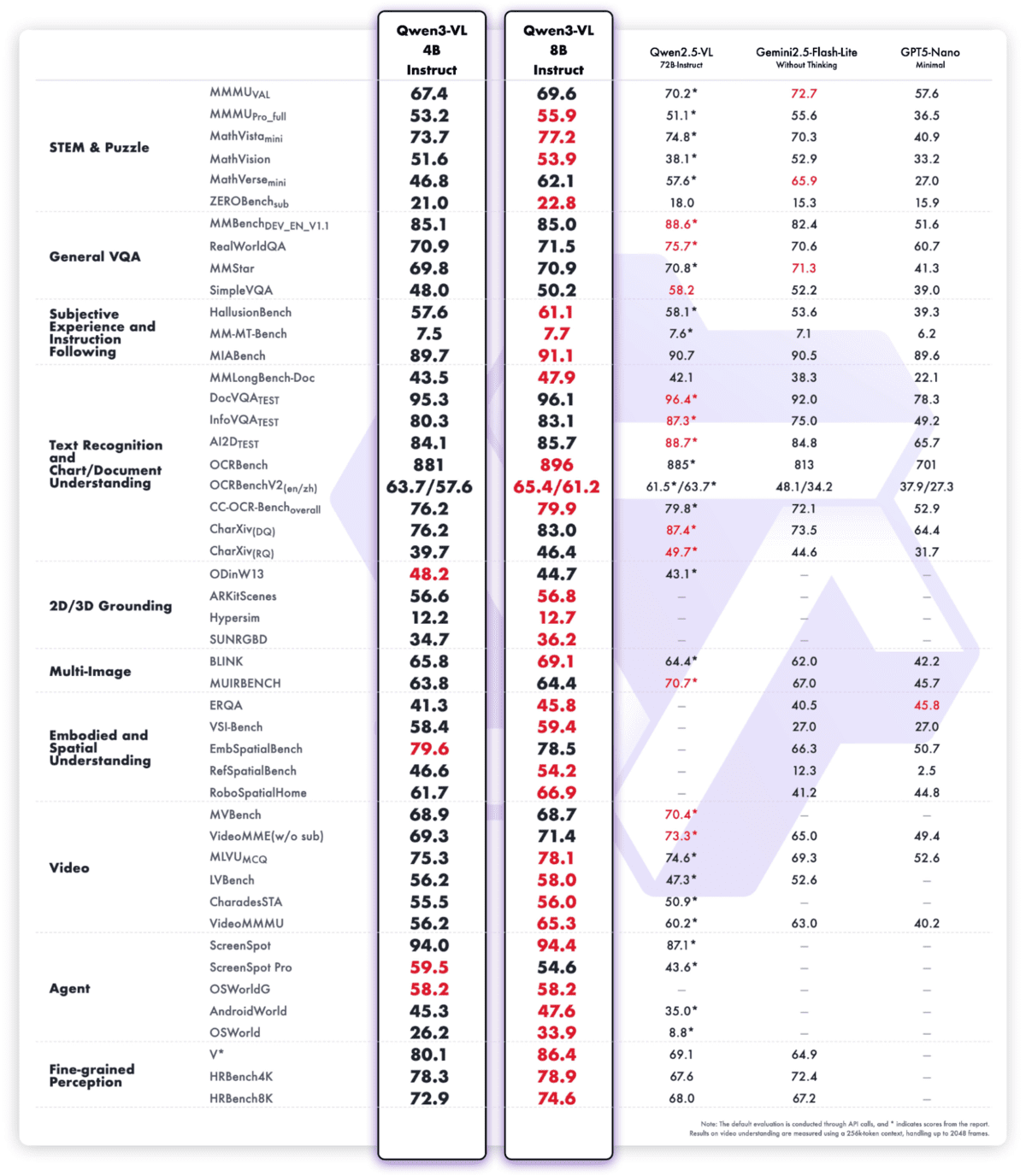

Qwen3‑VL‑4B‑Instruct is essentially the most superior imaginative and prescient‑language mannequin within the Qwen household thus far, packing state‑of‑the‑artwork multimodal intelligence right into a extremely environment friendly 4B‑parameter type issue. It delivers superior textual content understanding and technology, mixed with deeper visible notion, reasoning, and spatial consciousness, enabling sturdy efficiency throughout pictures, video, and lengthy paperwork.

The mannequin helps native 256K context (expandable to 1M), permitting it to course of total books or hours‑lengthy movies with correct recall and positive‑grained temporal indexing. Architectural upgrades akin to Interleaved‑MRoPE, DeepStack visible fusion, and exact textual content–timestamp alignment considerably enhance lengthy‑horizon video reasoning, positive‑element recognition, and picture–textual content grounding

Past notion, Qwen3‑VL‑4B‑Instruct capabilities as a visible agent, able to working PC and cellular GUIs, invoking instruments, producing visible code (HTML/CSS/JS, Draw.io), and dealing with complicated multimodal workflows with reasoning grounded in each textual content and imaginative and prescient.

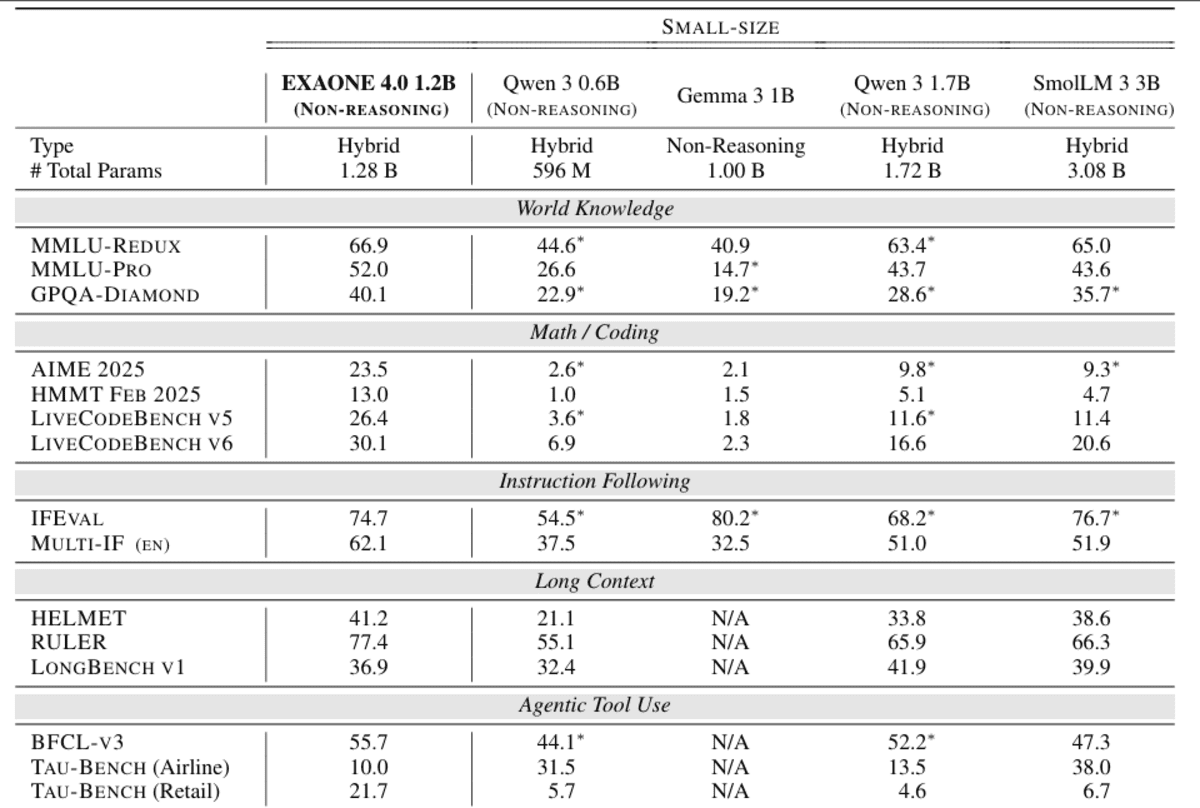

# 3. Exaone 4.0 1.2B

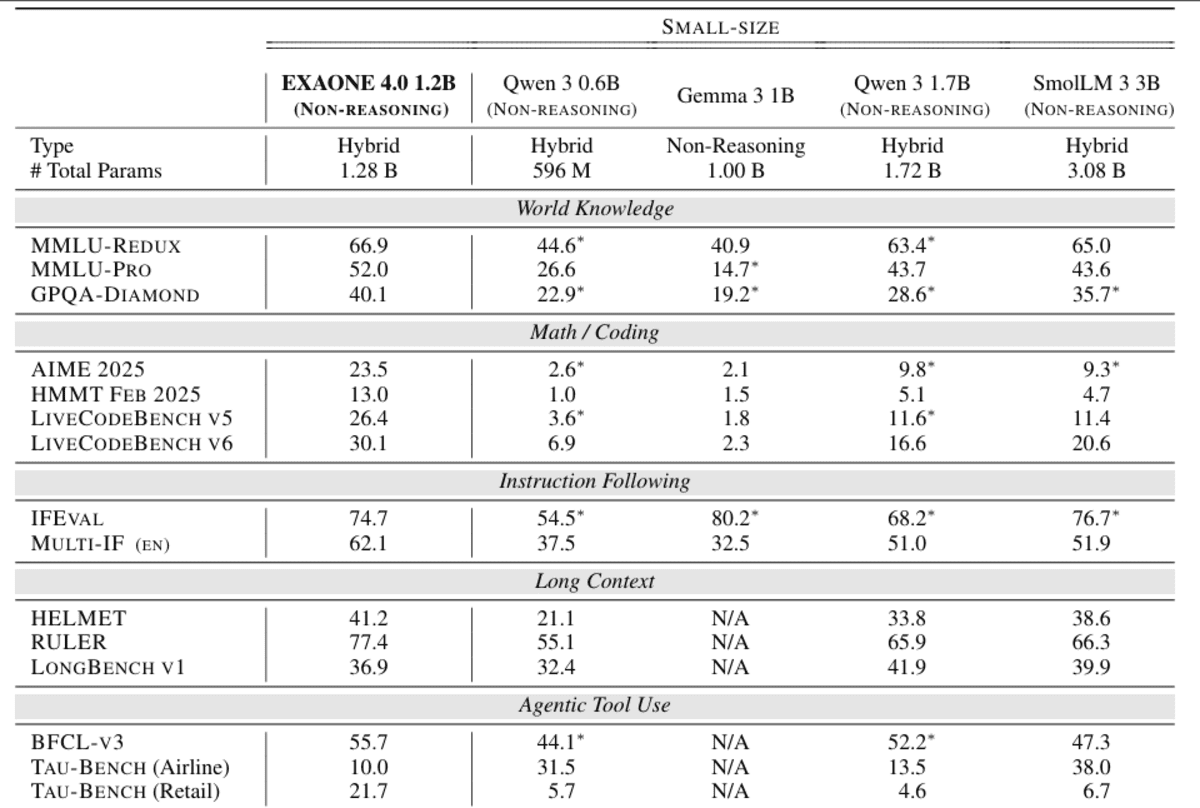

EXAONE 4.0 1.2B is a compact, on‑machine–pleasant language mannequin designed to deliver agentic AI and hybrid reasoning into extraordinarily useful resource‑environment friendly deployments. It integrates each non‑reasoning mode for quick, sensible responses and an non-obligatory reasoning mode for complicated downside fixing, permitting builders to commerce off pace and depth dynamically inside a single mannequin.

Regardless of its small dimension, the 1.2B variant helps agentic instrument use, enabling operate calling and autonomous process execution, and presents multilingual capabilities in English, Korean, and Spanish, extending its usefulness past monolingual edge purposes.

Architecturally, it inherits EXAONE 4.0’s advances akin to hybrid consideration and improved normalization schemes, whereas supporting a 64K token context size, making it unusually sturdy for lengthy‑context understanding at this scale

Optimized for effectivity, it’s explicitly positioned for on‑machine and low‑price inference situations, the place reminiscence footprint and latency matter as a lot as mannequin high quality.

# 4. Ministral 3B

Ministral-3-3B-Instruct-2512 is the smallest member of the Ministral 3 household and a extremely environment friendly tiny multimodal language mannequin goal‑constructed for edge and low‑useful resource deployment. It’s an FP8 instruct‑positive‑tuned mannequin, optimized particularly for chat and instruction‑following workloads, whereas sustaining sturdy adherence to system prompts and structured outputs

Architecturally, it combines a 3.4B‑parameter language mannequin with a 0.4B imaginative and prescient encoder, enabling native picture understanding alongside textual content reasoning.

Regardless of its compact dimension, the mannequin helps a big 256K context window, sturdy multilingual protection throughout dozens of languages, and native agentic capabilities akin to operate calling and JSON output, making it properly suited to actual‑time, embedded, and distributed AI programs.

Designed to suit inside 8GB of VRAM in FP8 (and even much less when quantized), Ministral 3 3B Instruct delivers sturdy efficiency per watt and per greenback for manufacturing use circumstances that demand effectivity with out sacrificing functionality

# 5. Jamba Reasoning 3B

Jamba-Reasoning-3B is a compact but exceptionally succesful 3‑billion‑parameter reasoning mannequin designed to ship sturdy intelligence, lengthy‑context processing, and excessive effectivity in a small footprint.

Its defining innovation is a hybrid Transformer–Mamba structure, the place a small variety of consideration layers seize complicated dependencies whereas the vast majority of layers use Mamba state‑house fashions for extremely environment friendly sequence processing.

This design dramatically reduces reminiscence overhead and improves throughput, enabling the mannequin to run easily on laptops, GPUs, and even cellular‑class gadgets with out sacrificing high quality.

Regardless of its dimension, Jamba Reasoning 3B helps 256K token contexts, scaling to very lengthy paperwork with out counting on huge consideration caches, which makes lengthy‑context inference sensible and value‑efficient

On intelligence benchmarks, it outperforms comparable small fashions akin to Gemma 3 4B and Llama 3.2 3B on a mixed rating spanning a number of evaluations, demonstrating unusually sturdy reasoning capacity for its class.

# 6. Granite 4.0 Micro

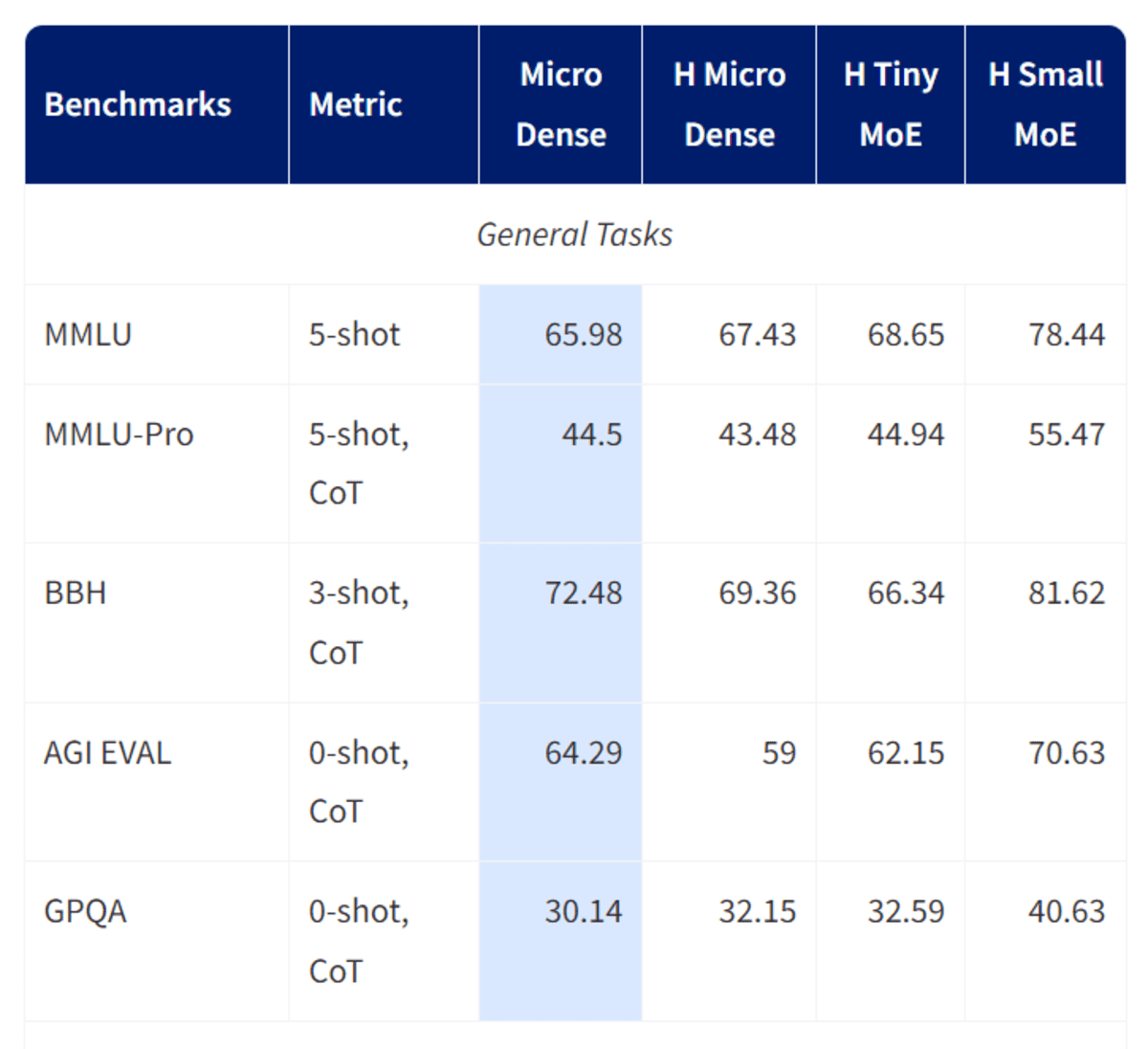

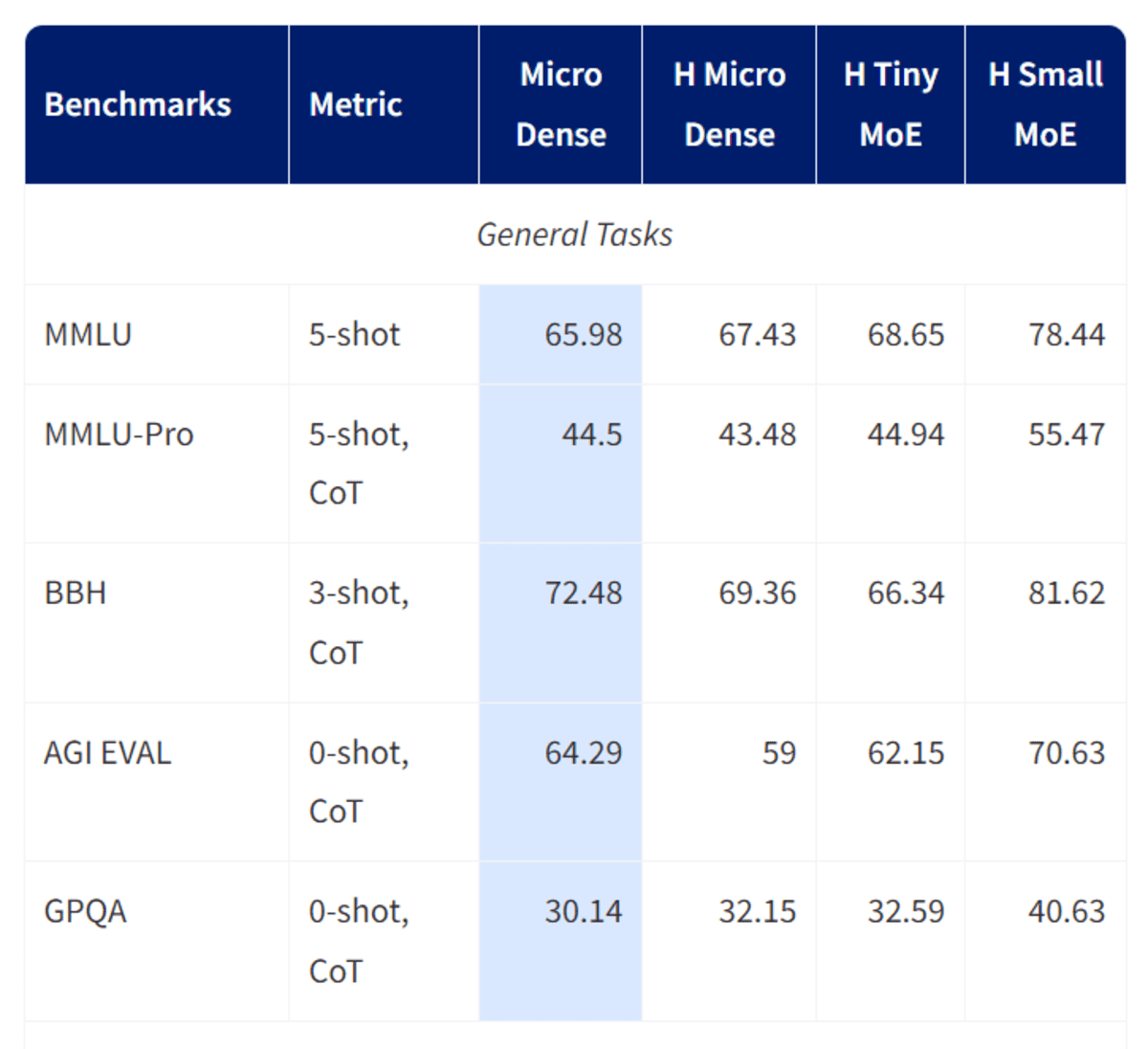

Granite-4.0-micro is a 3B‑parameter lengthy‑context instruct mannequin developed by IBM’s Granite group and designed particularly for enterprise‑grade assistants and agentic workflows.

Nice‑tuned from Granite‑4.0‑Micro‑Base utilizing a mix of permissively licensed open datasets and excessive‑high quality artificial knowledge, it emphasizes dependable instruction following, skilled tone, and secure responses, bolstered by a default system immediate added in its October 2025 replace.

The mannequin helps a really massive 128K context window, sturdy instrument‑calling and performance‑execution capabilities, and broad multilingual assist spanning main European, Center Japanese, and East Asian languages.

Constructed on a dense decoder‑solely transformer structure with fashionable elements akin to GQA, RoPE, SwiGLU MLPs, and RMSNorm, Granite‑4.0‑Micro balances robustness and effectivity, making it properly suited as a basis mannequin for enterprise purposes, RAG pipelines, coding duties, and LLM brokers that should combine cleanly with exterior programs beneath an Apache 2.0 open‑supply license.

# 7. Phi-4 Mini

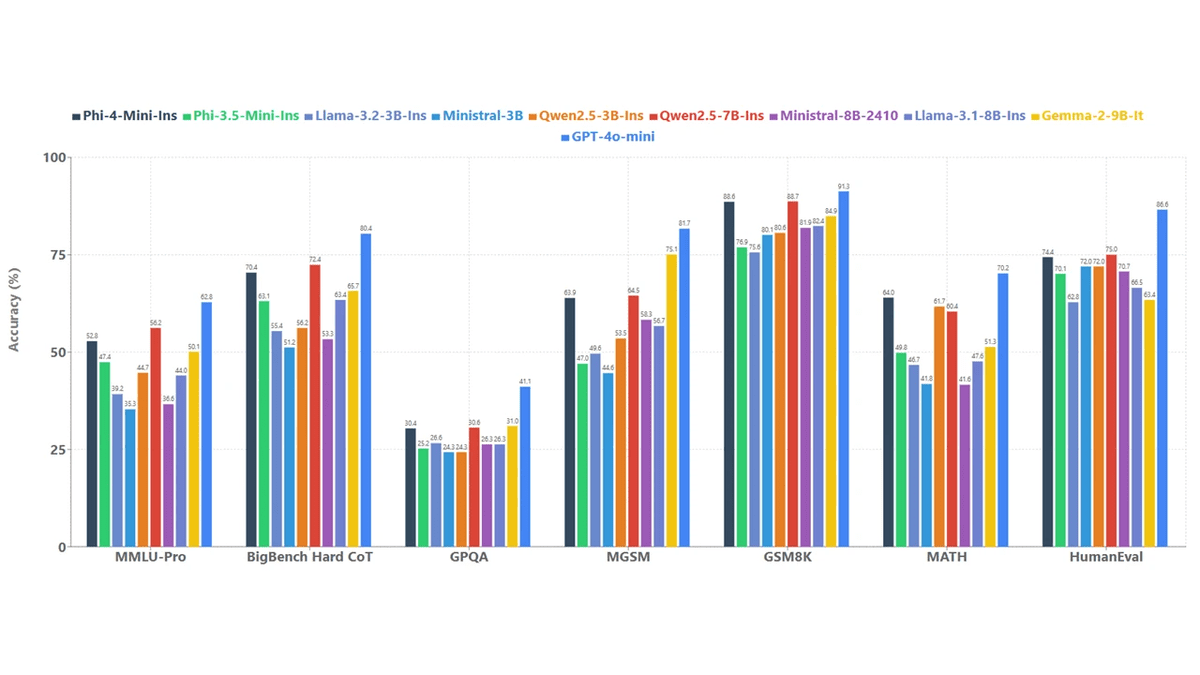

Phi-4-mini-instruct is a light-weight, open 3.8B‑parameter language mannequin from Microsoft designed to ship sturdy reasoning and instruction‑following efficiency beneath tight reminiscence and compute constraints.

Constructed on a dense decoder‑solely Transformer structure, it’s educated totally on excessive‑high quality artificial “textbook‑like” knowledge and punctiliously filtered public sources, with a deliberate emphasis on reasoning‑dense content material over uncooked factual memorization.

The mannequin helps a 128K token context window, enabling lengthy‑doc understanding and prolonged conversations unusual at this scale.

Submit‑coaching combines supervised positive‑tuning and direct choice optimization, leading to exact instruction adherence, sturdy security habits, and efficient operate calling.

With a big 200K‑token vocabulary and broad multilingual protection, Phi‑4‑mini‑instruct is positioned as a sensible constructing block for analysis and manufacturing programs that should stability latency, price, and reasoning high quality, significantly in reminiscence‑ or compute‑constrained environments.

# Closing Ideas

Tiny fashions have reached a degree the place dimension is now not a limitation to functionality. The Qwen 3 collection stands out on this listing, delivering efficiency that rivals a lot bigger language fashions and even challenges some proprietary programs. If you’re constructing purposes for a Raspberry Pi or different low-power gadgets, Qwen 3 is a superb start line and properly price integrating into your setup.

Past Qwen, the EXAONE 4.0 1.2B fashions are significantly sturdy at reasoning and non-trivial downside fixing, whereas remaining considerably smaller than most alternate options. The Ministral 3B additionally deserves consideration as the most recent launch in its collection, providing an up to date data cutoff and strong general-purpose efficiency.

Total, many of those fashions are spectacular, but when your priorities are pace, accuracy, and power calling, the Qwen 3 LLM and VLM variants are exhausting to beat. They clearly present how far tiny, on-device AI has come and why native inference on small {hardware} is now not a compromise.

Abid Ali Awan (@1abidaliawan) is a licensed knowledge scientist skilled who loves constructing machine studying fashions. At the moment, he’s specializing in content material creation and writing technical blogs on machine studying and knowledge science applied sciences. Abid holds a Grasp’s diploma in expertise administration and a bachelor’s diploma in telecommunication engineering. His imaginative and prescient is to construct an AI product utilizing a graph neural community for college kids fighting psychological sickness.