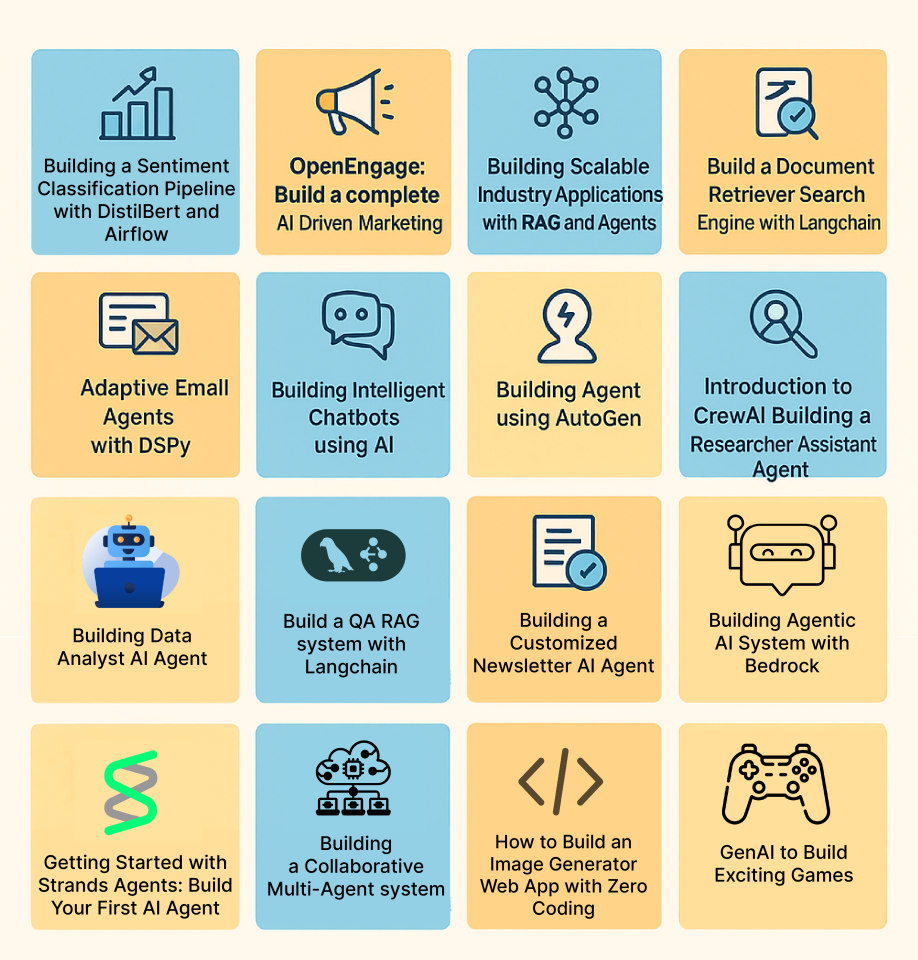

AI and knowledge science are two of the fastest-growing fields on the planet at present. In the event you understand that and are aiming to stage up your portfolio, stand out in interviews, or just perceive them intimately, right here is your final wrap-up for 2025. On this article, we carry you 25+ absolutely solved, end-to-end AI and knowledge science tasks. These tasks span throughout machine studying, NLP, laptop imaginative and prescient, RAG methods, automation, multi-agent collaboration, and extra (learn their newbie’s guides within the hyperlink). Whereas each AI and knowledge science undertaking listed right here covers completely different subjects, all of them comply with one structured format. With this, you’ll be able to shortly perceive what you’ll study, what instruments you’ll grasp, and the way precisely to unravel these AI and knowledge science tasks with a step-by-step strategy.

In totality, these tasks shall assist rookies and professionals alike within the discipline of AI and knowledge science to hone their expertise, construct production-grade functions, and keep forward of the business curve.

Ideally, I’d counsel you bookmark this text and study any or each undertaking as per your liking, one after the other. For this, I’ve additionally shared the hyperlink to every of the tasks. So with none delay, let’s dive proper into one of the best AI and Knowledge Science tasks of 2025.

Classical ML / Core Knowledge Science

1. Mortgage Prediction Observe Drawback (Utilizing Python)

This undertaking takes a real-world loan-approval state of affairs and guides you to construct a binary classification mannequin in Python. You’ll predict whether or not a mortgage software will get permitted or not primarily based on applicant knowledge. It offers you hands-on expertise in an end-to-end knowledge science workflow: from knowledge exploration to mannequin constructing and analysis.

Key Abilities to Study

- Understanding binary classification and its use in real-life issues like mortgage approval.

- Exploratory Knowledge Evaluation (EDA): univariate and bivariate evaluation to know knowledge distributions and relationships.

- Knowledge preprocessing: dealing with lacking values, outlier therapy, encoding categorical variables, and getting ready knowledge for modelling.

- Constructing classification fashions in Python (e.g., logistic regression, resolution timber, random forest, and so on.).

- Mannequin analysis & validation: utilizing train-test break up, metrics like accuracy (and optionally precision/recall), and evaluating a number of fashions to decide on one of the best performer.

Challenge Workflow

- Outline the issue assertion: resolve to foretell whether or not a mortgage software ought to be permitted or denied primarily based on applicant attributes (revenue, credit score historical past, mortgage quantity, and so on.).

- Load the dataset in Python (e.g., with pandas) and carry out preliminary inspection: checking knowledge sorts, lacking values, and abstract statistics.

- Carry out Exploratory Knowledge Evaluation (EDA: analyse distributions, relationships between options and goal to get insights.

- Preprocess the info: deal with lacking values/outliers, encode categorical variables, and put together knowledge for modelling.

- Construct a number of classification fashions: begin with easy ones (like logistic regression), then attempt extra superior fashions (resolution tree, random forest, and so on.) to see which works finest.

- Consider and evaluate fashions: break up knowledge into practice and check units, compute efficiency metrics, validate stability, and select the mannequin with one of the best efficiency.

- Interpret outcomes and draw insights: perceive which options affect mortgage approval predictions most, and replicate on the implications for real-world loan-approval methods.

2. Twitter Sentiment Evaluation (Utilizing Python)

This undertaking teaches you learn how to carry out sentiment evaluation on Twitter knowledge utilizing Python. You’ll study to fetch tweets, clear and preprocess textual content, construct machine-learning fashions, and classify sentiments (optimistic, destructive, impartial). It’s probably the most in style NLP starter tasks as a result of it combines real-world noisy textual content knowledge with sensible ML workflows.

Key Abilities to Study

- Textual content preprocessing: cleansing tweets, eradicating noise, tokenization, stop-word removing

- Understanding sentiment evaluation fundamentals utilizing NLP

- Characteristic engineering utilizing methods like TF-IDF or Bag-of-Phrases

- Constructing ML fashions for textual content classification (Logistic Regression, Naive Bayes, SVM, and so on.)

- Evaluating NLP fashions utilizing accuracy, F1-score, and confusion matrix

- Working with Python libraries like pandas, scikit-learn, and NLTK

Challenge Workflow

- Acquire tweets: both utilizing pattern datasets or by fetching reside tweets by way of APIs.

- Preprocess the textual content knowledge: clear URLs, hashtags, mentions, emojis; tokenize and normalize the textual content.

- Convert textual content to numerical options utilizing TF-IDF, Bag-of-Phrases, or different vectorization methods.

- Construct sentiment classification fashions: begin with baseline algorithms like Logistic Regression or Naive Bayes.

- Prepare and consider the mannequin utilizing accuracy and F1-score to measure efficiency.

- Interpret outcomes: perceive probably the most influential phrases, patterns in sentiment, and the way your mannequin responds to completely different tweet sorts.

- Apply the mannequin to unseen tweets to generate insights from reside or saved Twitter knowledge.

3. Constructing Textual content Classification Fashions in NLP

This undertaking helps you perceive learn how to construct end-to-end textual content classification methods utilizing core NLP methods. You’ll work with uncooked textual content knowledge, clear and rework it, and practice machine-learning fashions that may routinely classify textual content into predefined classes. The undertaking focuses on the basics of NLP and serves as a powerful entry level for anybody studying how text-based ML pipelines work.

Key Abilities to Study

- Textual content preprocessing: cleansing, tokenization, normalization

- Changing textual content into numerical options (TF-IDF, Bag-of-Phrases, and so on.)

- Constructing ML fashions for textual content classification (Logistic Regression, Naive Bayes, SVM, and so on.)

- Understanding analysis metrics for NLP classification duties

- Structuring an end-to-end NLP pipeline from knowledge loading to mannequin deployment

Challenge Workflow

- Begin by loading and exploring the textual content dataset and understanding the goal labels.

- Clear and preprocess the textual content: take away noise, tokenize, normalize, and put together it for modelling.

- Convert textual content into numeric representations utilizing TF-IDF or Bag-of-Phrases.

- Prepare classification fashions on the processed knowledge and tune primary parameters.

- Consider mannequin efficiency and evaluate outcomes to decide on one of the best strategy.

4. Constructing Your First Pc Imaginative and prescient Mannequin

This undertaking guides you to construct your very first laptop imaginative and prescient mannequin utilizing deep studying. You’ll learn the way digital photos are processed, how convolutional neural networks (CNNs) work, after which practice a imaginative and prescient mannequin on actual picture knowledge. It’s designed for rookies – a powerful entry level into image-based ML and deep studying.

Key Abilities to Study

- Fundamentals of picture processing and the way photos are represented digitally (pixels, channels, arrays)

- Understanding Convolutional Neural Networks (CNNs): convolution layer, pooling/striding, downsampling, and so on.

- Constructing deep-learning-based imaginative and prescient fashions utilizing Python frameworks (e.g. TensorFlow, OpenCV)

- Coaching and evaluating picture classification fashions on actual datasets

- Finish-to-end CV pipeline: knowledge loading, preprocessing, mannequin design, coaching, inference

Challenge Workflow

- Load and preprocess the picture dataset: learn photos, convert to arrays, normalize, and resize as wanted.

- Construct a CNN mannequin: outline convolution, pooling, and fully-connected layers to study from picture knowledge.

- Prepare the mannequin on coaching photos and validate on a hold-out set to watch efficiency.

- Consider outcomes: examine mannequin accuracy (or different acceptable metrics), analyze misclassifications, iterate if wanted.

- Use the skilled mannequin for inference on new/unseen photos to check real-world efficiency.

GenAI, LLMs, and RAG

5. Constructing a Deep Analysis AI Agent

This AI undertaking walks you thru constructing a full-blown research-and-report technology agent utilizing a graph-based agent framework (like LangGraph). Such an agent can routinely fetch knowledge from the net, analyze it, and compile a structured analysis report. The undertaking offers you a hands-on understanding of agentic AI workflows – the place the agent autonomously breaks down a analysis activity, gathers sources, and assembles a readable report.

Key Abilities to Study

- Understanding agent-based AI design: planning brokers, activity decomposition, sub-agent orchestration

- Integrating web-search instruments/APIs to fetch real-time knowledge for evaluation

- Designing pipelines combining search, knowledge assortment, content material technology, and report meeting

- Orchestrating parallel execution: enabling sub-tasks to run concurrently for sooner outcomes

- Immediate engineering and template design for structured report technology

Challenge Workflow

- Outline your analysis goal: decide a subject or query for the agent to discover (e.g. “Newest developments in AI brokers in 2025”).

- Arrange the agent framework utilizing a graph-based agent device; create core modules equivalent to a planner node, section-builder sub-agents, and a closing report compiler.

- Combine web-search capabilities so the agent can dynamically fetch knowledge from the web when wanted.

- Design a report template that defines sections like introduction, background, insights, and conclusion, so the agent is aware of the construction forward.

- Run the agent workflow: planner decomposes duties > sub-agents fetch knowledge, write sections > closing compiler collates sections right into a full report.

- Overview and refine the generated report, validate sources/knowledge, and tweak prompts or workflow for higher coherence and reliability.

6. Construct your first RAG system utilizing LlamaIndex

This undertaking helps you construct a full Retrieval-Augmented Technology (RAG) system utilizing LlamaIndex. You’ll discover ways to ingest paperwork (PDFs, textual content, and so on.), break up them into manageable chunks, construct a semantic search index (usually vector-based), after which join that index with a language mannequin to serve context-aware responses or QA. The end result: a system that may reply person queries primarily based in your doc assortment. Such a system is smarter, extra correct, and grounded in precise knowledge.

Key Abilities to Study

- Doc ingestion and preprocessing: loading docs, cleansing textual content, chunking/splitting for indexing

- Working with indexing & embedding/vector shops to allow semantic retrieval

- Constructing a retrieval + technology pipeline: utilizing LlamaIndex to fetch related context and feeding it to an LLM for reply synthesis

- Configuring retrieval parameters: chunk dimension, embedding mannequin, and question engine settings to optimize retrieval high quality

- Integrating retrieval and LLM-based technology right into a seamless QA/software circulate

Challenge Workflow

- Put together your doc corpus: PDFs, textual content recordsdata or any unstructured content material you need the system to “know.”

- Preprocess and break up paperwork into chunks or nodes to allow them to be successfully listed.

- Construct an index utilizing LlamaIndex (vector-based or semantic), embedding the doc chunks right into a searchable retailer.

- Arrange a question engine that retrieves related chunks given a person’s query or immediate.

- Combine the index with an LLM: feed the retrieved context + person question to the LLM, let it generate a context-aware response.

- Take a look at the system: ask various questions, examine response correctness and relevance. Regulate indexing or retrieval settings if wanted (chunk dimension, embedding mannequin, and so on.).

7. Construct a Doc Retriever Search Engine with LangChain

This undertaking helps you construct a doc retriever–fashion search engine utilizing LangChain. You’ll discover ways to course of giant textual content corpora, break them into chunks, create embeddings, and join the whole lot to a vector database in order that person queries return probably the most related paperwork. It’s a compact however highly effective introduction to retrieval methods that sit on the core of contemporary RAG functions.

Key Abilities to Study

- Fundamentals of doc retrieval and engines like google

- Utilizing LangChain for doc loading, chunking, and embedding technology

- Indexing paperwork right into a vector database for environment friendly similarity search

- Implementing retrievers that fetch probably the most related chunks for a given question

- Understanding how such retrieval methods plug into bigger RAG or QA pipelines

Challenge Workflow

- Load a textual content corpus (for instance, Wikipedia-like paperwork or data base content material) utilizing LangChain doc loaders.

- Chunk paperwork into smaller items and generate embeddings for every chunk.

- Retailer these embeddings in a vector database or in-memory vector retailer.

- Implement a retriever that, given a person question, finds and returns probably the most related doc chunks.

- Take a look at the search engine with completely different queries and refine chunking, embeddings, or retrieval settings to enhance relevance.

8. Construct a QA RAG system with Langchain

This undertaking walks you thru constructing an entire Query-Answering RAG system utilizing LangChain. You’ll mix retrieval (vector search) with an LLM to create a robust pipeline the place the mannequin solutions questions utilizing context pulled out of your paperwork – making responses factual, grounded, and context-aware.

Key Abilities to Study

- Fundamentals of Retrieval-Augmented Technology (RAG)

- Integrating LLMs with vector databases for context-aware QA

- Utilizing LangChain’s retrievers, indexes, and chains

- Constructing end-to-end QA pipelines with immediate templates and retrieval logic

- Enhancing RAG efficiency by way of chunking, embedding selection, and immediate design

Challenge Workflow

- Load paperwork, chunk them, and embed them for vector storage.

- Construct a retriever that fetches probably the most related chunks for any question.

- Join the retriever with an LLM utilizing LangChain’s QA or RAG-style chains.

- Configure prompts in order that the mannequin makes use of the retrieved context whereas answering.

- Take a look at the QA system with numerous questions and refine chunking, retrieval, or prompts to enhance accuracy.

9. Coding a ChatGPT-style Language Mannequin From Scratch in Pytorch

This undertaking reveals you learn how to construct a transformer-based language mannequin just like ChatGPT from the bottom up utilizing PyTorch. You get hands-on with all elements: tokenization, embeddings, positional encodings, masked self-attention, and a decoder-only transformer. By the tip, you’ll have coded, skilled, and even deployed your individual easy language mannequin able to producing textual content.

Key Abilities to Study

- Fundamentals of transformer-based language fashions: embeddings, positional encoding, masked self-attention, decoder-only transformer structure

- Sensible PyTorch expertise: knowledge preparation, mannequin coding, coaching, and fine-tuning

- NLP fundamentals for generative duties: dealing with tokenization, language mannequin inputs & outputs

- Coaching and evaluating a customized LLM: loss capabilities, overfitting avoidance, and inference pipeline setup

- Deploying a customized language mannequin: understanding learn how to go from prototype code to an inference-ready mannequin

Challenge Workflow

- Put together your textual dataset and construct tokenization + input-label pipelines.

- Implement core mannequin elements: embeddings, positional encodings, consideration layers, and the decoder-only transformer.

- Prepare your mannequin on the ready knowledge, monitoring coaching progress and tuning hyperparameters if wanted.

- Validate the mannequin’s textual content technology functionality: pattern outputs, examine coherence, examine for typical errors.

- Optionally fine-tune or iterate mannequin parameters/knowledge to enhance technology high quality earlier than deployment.

10. Constructing Clever Chatbots utilizing AI

This undertaking teaches you learn how to construct a contemporary AI-powered chatbot able to understanding person queries, retrieving related info, and producing clever, context-aware responses. You’ll work with LLMs, retrieval pipelines, and chatbot frameworks. You’ll create an assistant that may reply precisely, deal with paperwork, and assist multimodal interactions relying in your setup.

Key Abilities to Study

- Designing end-to-end conversational AI methods

- Constructing retrieval-augmented chatbot pipelines utilizing embeddings and semantic search

- Loading paperwork, producing embeddings, and enabling contextual question-answering

- Structuring conversational flows and sustaining context

- Incorporating responsible-AI practices: security, bias checks, and clear responses

Challenge Workflow

- Begin by loading your data base: PDFs, textual content paperwork, or customized datasets.

- Preprocess your content material and generate embeddings for semantic retrieval.

- Construct a retrieval-plus-generation pipeline: retrieval gives context, and the LLM generates correct solutions.

- Combine the pipeline right into a chatbot interface that helps conversational interactions.

- Take a look at the chatbot end-to-end, consider response accuracy, and refine prompts and retrieval settings for higher efficiency.

Agentic / Multi-Agent / Automation

11. Constructing a Collaborative Multi-Agent System

This undertaking teaches you learn how to construct a collaborative multi-agent AI system utilizing a graph-based framework. As a substitute of a single agent doing all duties, you design a number of brokers (nodes) that talk, coordinate, and share duties. Such cross-communication and coordinated motion allow modular, scalable AI workflows for advanced, multi-step issues.

Key Abilities to Study

- Understanding multi-agent structure: how brokers perform as nodes and coordinate by way of message passing.

- Utilizing LangGraph to outline brokers, their roles, dependencies, and interactions.

- Designing workflows the place completely different brokers specialize (for instance: knowledge retrieval, processing, summarization, or decision-making) and collaborate.

- Managing state and context throughout brokers, enabling sequences of operations, info circulate, and context sharing.

- Constructing modular and maintainable AI methods, that are simpler to increase or debug in comparison with monolithic agent setups.

Challenge Workflow

- Outline the general activity or drawback that wants a number of capabilities (e.g., analysis + summarization + reporting, or knowledge pipeline + evaluation + alerting).

- Decompose the issue into sub-tasks, then design a set of brokers the place every agent handles a selected sub-task or function.

- Mannequin the brokers and their dependencies utilizing LangGraph: arrange nodes, outline inputs/outputs, and specify communication or knowledge circulate between them.

- Implement agent logic for every node: for instance, knowledge fetcher agent, analyzer agent, summarizer agent, and so on.

- Run the multi-agent system end-to-end: provide enter, let brokers collaborate based on story-defined circulate, and seize the ultimate output/end result.

- Take a look at and refine the workflow: consider output high quality, debug agent interactions, and alter knowledge flows or agent duties for higher efficiency.

12. Creating Drawback-Fixing Brokers with GenAI for Actions

This undertaking teaches you learn how to construct GenAI-powered problem-solving brokers that may suppose, plan, and execute actions autonomously. As a substitute of merely producing responses, these brokers study to interrupt down duties into smaller steps, compose actions intelligently, and full end-to-end workflows. It’s a vital basis for contemporary agentic AI methods utilized in automation, assistants, and enterprise workflows.

Key Abilities to Study

- Understanding agentic AI: how reasoning-driven brokers differ from conventional ML fashions

- Job decomposition: breaking giant issues into action-level steps

- Designing agent architectures that plan and execute actions

- Utilizing GenAI fashions to allow reasoning, planning, and dynamic decision-making

- Constructing actual, action-based AI workflows as an alternative of static prompt-response methods

Challenge Workflow

- Begin with the basics of agentic methods. These embody what brokers are, how multi-agent buildings work, and why reasoning issues.

- Outline a transparent drawback the agent ought to resolve, equivalent to knowledge extraction, chained automation, or multi-step duties.

- Design the action-composition framework: how the agent decides steps, plans execution, and handles branching logic.

- Implement the agent utilizing GenAI fashions to allow reasoning and motion choice.

- Take a look at the agent end-to-end and refine its planning or execution logic primarily based on efficiency.

13. Construct a Resume Overview Agentic System with CrewAI

This undertaking guides you to construct an AI-powered resume evaluation system utilizing an agent framework. The system routinely analyses submitted resumes, evaluates key attributes (expertise, expertise, relevance), and gives structured suggestions or scoring. It mimics how a recruiter would display screen functions, however in an automatic, scalable means.

Key Abilities to Study

- Constructing agentic methods tailor-made for doc evaluation and analysis

- Parsing and extracting structured info from unstructured paperwork (resumes)

- Designing analysis standards and scoring logic aligned with job necessities

- Combining NLP methods with agent orchestration to evaluate content material (expertise, expertise, training, and so on.)

- Automating suggestions technology and structured output (evaluation experiences)

Challenge Workflow

- Start by defining the analysis standards or rubric your resume-review agent ought to apply (e.g. talent match, expertise years, function relevance).

- Construct or configure the agent framework (utilizing CrewAI) to simply accept resumes as enter — PDF, DOCX or textual content.

- Implement parsing logic to extract related fields (expertise, expertise, training, and so on.) from the resume.

- Have the agent consider the extracted knowledge towards your standards and generate structured suggestions/scoring.

- Take a look at the system with a number of resumes to examine consistency, accuracy, and robustness – refine parsing and analysis logic as wanted.

14. Constructing a Knowledge Analyst AI Agent

This undertaking teaches you learn how to construct an AI-powered knowledge analyst agent that may automate your complete knowledge workflow. This spans from loading uncooked datasets to producing insights, visualizations, summaries, and experiences. The agent can interpret person queries in pure language, resolve what analytical steps to carry out, and return significant outcomes with out requiring handbook coding.

Key Abilities to Study

- Understanding the basics of agentic AI and the way brokers can automate analytical duties

- Constructing data-oriented agent workflows for cleansing, preprocessing, evaluation, and reporting

- Automating core analytics capabilities: EDA, summarisation, visualization, and sample detection

- Designing decision-making logic so the agent chooses the correct analytical operation primarily based on person queries

- Integrating natural-language interfaces so customers can ask questions in plain English and get knowledge insights

Challenge Workflow

- Outline the evaluation scope: the dataset, the forms of insights wanted, and typical questions the agent ought to reply.

- Arrange the agent framework and configure modules for knowledge loading, cleansing, transformation, and evaluation.

- Implement analytical capabilities: summaries, correlations, charts, development evaluation, and so on.

- Construct a natural-language question interface that maps person inquiries to the related analytical steps.

- Take a look at utilizing actual queries and refine the agent’s resolution logic for accuracy and reliability.

15. Constructing Agent utilizing AutoGen

This undertaking teaches you learn how to use AutoGen, a multi-agent AI framework, to construct clever brokers that may plan, talk, and resolve duties collaboratively. You’ll discover ways to construction brokers with particular roles, allow them to trade messages, combine instruments or fashions, and orchestrate full end-to-end workflows utilizing agentic intelligence.

Key Abilities to Study

- Fundamentals of agentic AI and multi-agent system design

- Creating AutoGen brokers with outlined roles and capabilities

- Structuring communication flows between brokers

- Integrating instruments, LLMs, and exterior capabilities into brokers

- Designing multi-agent workflows for analysis, automation, coding duties, and reasoning-heavy issues

Challenge Workflow

- Arrange the AutoGen setting and perceive how brokers, messages, and instruments match collectively.

- Outline agent roles equivalent to planner, assistant, or executor primarily based on the duty you need to automate.

- Construct a minimal agent group and configure their communication logic.

- Combine instruments (like code execution or retrieval capabilities) to increase agent capabilities.

- Run a collaborative workflow: let brokers plan, delegate, and execute duties by way of structured interactions.

- Refine prompts, agent roles, and workflow steps to enhance reliability and efficiency.

16. Getting Began with Strands Brokers: Construct Your First AI Agent

This undertaking helps you construct your first AI agent utilizing Strands, a framework that permits brokers to carry out duties, motive, and act. It’s designed for rookies, providing a hands-on introduction to constructing agentic methods that may carry out structured duties and workflows.

Key Abilities to Study

- Fundamentals of agentic AI: what brokers are, how they motive and act.

- Understanding the Strands framework for constructing AI brokers.

- Establishing an agent pipeline: from enter consumption to output/motion.

- Designing duties and actions: learn how to outline what the agent must do.

- Testing and refining agent behaviour for reliability and correctness.

Challenge Workflow

- Set up and configure the Strands setting and dependencies.

- Outline a easy activity you need your agent to carry out (e.g. info retrieval, knowledge summarization, easy automation).

- Construct the agent logic: outline inputs, anticipated actions or outputs, and the way the agent processes requests.

- Run and check the agent: feed pattern enter, observe outputs, consider correctness.

- Iterate and refine: alter immediate logic, enter/output formatting or agent behaviour for higher outcomes.

17. Constructing a Custom-made Publication AI Agent

This undertaking teaches you learn how to construct an AI-powered system that routinely generates personalized newsletters. Utilizing an agent framework, you’ll create a pipeline that fetches content material, summarises and codecs it, and delivers a ready-to-send publication — automating what’s historically a tedious, handbook course of.

Key Abilities to Study

- Understanding agentic AI design: goal-setting, constraint modelling, activity orchestration

- Utilizing fashionable frameworks (e.g. for brokers + LLMs) to construct workflow-based AI methods for content material automation

- Automating content material gathering and summarisation for dynamic content material sources

- Deploying and delivering outcomes: integration with deployment platforms (e.g. by way of Replit/Streamlit), producing output in publication format

- Arms-on sensible pipeline creation: from knowledge ingestion to closing publication output

Challenge Workflow

- Outline the publication’s goal: what content material you need (e.g. information abstract, AI-trends roundup, curated articles), frequency, and audience.

- Fetch or ingest content material: collect articles/information/posts from net sources or datasets.

- Use an AI agent to course of content material: summarise, filter, and format the knowledge as per publication necessities.

- Generate the publication: compile summaries right into a structured publication structure.

- Deploy the system – optionally on a platform (e.g. by way of a easy net app) so you’ll be able to set off publication technology and supply simply.

18. Adaptive Electronic mail Brokers with DSPy

This undertaking teaches you learn how to construct adaptive, context-aware e mail brokers utilizing DSPy. Not like mounted prompt-based responders, these brokers dynamically choose related context, retrieve previous interactions, optimize prompts, and generate polished e mail replies routinely. The main target is on making e mail automation smarter, adaptive, and extra dependable utilizing DSPy’s structured framework.

Key Abilities to Study

- Designing adaptive brokers that may retrieve, filter, and use context intelligently

- Understanding DSPy workflows for constructing sturdy LLM pipelines

- Implementing context-engineering methods: context choice, compression, and relevance filtering

- Utilizing DSPy optimization methods (like MePro-style refinement) to enhance output high quality

- Automating e mail responses end-to-end: studying inputs, retrieving context, producing coherent replies

Challenge Workflow

- Arrange the DSPy setting and perceive its core workflow elements.

- Construct the context-handling logic — how the agent selects emails, threads, and related info from previous conversations.

- Create the adaptive e mail pipeline: retrieval → immediate formation → optimization → response technology.

- Take a look at the agent on instance e mail threads and consider the standard, tone, and relevance of responses.

- Refine the agent by tuning context guidelines and enhancing prompt-optimization methods for extra adaptive behaviour.

19. Constructing an Agentic AI System with Bedrock

This undertaking reveals you learn how to construct production-ready agentic AI methods utilizing Amazon Bedrock because the backend. You’ll discover ways to mix multi-agent design, managed LLM companies, orchestration and deployment to create clever, context-aware brokers that may motive, collaborate, and execute advanced workflows, all with out heavy infrastructure overhead.

Key Abilities to Study

- Fundamentals of agentic AI methods: what makes an agentic system completely different from easy LLM apps

- Learn how to use Bedrock for brokers: creating brokers, establishing agent orchestration, and leveraging managed AI companies

- Multi-agent orchestration: designing workflows the place a number of brokers collaborate to unravel duties

- Integrating exterior instruments/APIs with brokers: enabling brokers to work together with knowledge shops, databases or different companies for real-world use instances

- Constructing scalable, production-ready AI methods by combining brokers + managed cloud infrastructure

Challenge Workflow

- Begin by understanding the idea: what’s “agentic AI,” and the way Bedrock helps constructing such methods.

- Design the agent structure: outline the variety of brokers, their roles, and the way they’ll talk or collaborate to attain objectives.

- Arrange brokers on Bedrock: configure and initialize brokers utilizing Bedrock’s agent-management capabilities.

- Combine required exterior instruments/companies (APIs, databases, and so on.) as per activity necessities, so brokers can fetch knowledge, persist state or work together with exterior methods.

- Implement orchestration logic so brokers coordinate: cross context/state, set off sub-agents, and deal with dependencies.

- Take a look at the complete agentic workflow end-to-end: feed inputs, let brokers collaborate, and examine outputs.

- Iterate to refine logic, error-handling, orchestration, and integration to make the system sturdy and production-ready.

20. Introduction to CrewAI Constructing a Researcher Assistant Agent

This undertaking teaches you learn how to construct a “Researcher Assistant” AI agent utilizing CrewAI. You learn the way brokers are outlined, how they collaborate inside a crew, and learn how to automate analysis duties equivalent to info gathering, summarization, and structured be aware creation. It’s the proper start line for understanding CrewAI’s agent-based workflow.

Key Abilities to Study

- Fundamentals of agentic AI and the way CrewAI buildings brokers, duties, and crews

- Defining agent roles and duties inside a analysis workflow

- Utilizing CrewAI elements to orchestrate multi-step analysis duties

- Automating analysis duties equivalent to knowledge retrieval, summarization, note-making, and report technology

- Constructing a purposeful Analysis Assistant that may deal with end-to-end analysis prompts

Challenge Workflow

- Perceive CrewAI’s structure: how brokers, duties, and crews work together to type a workflow.

- Outline the Analysis Assistant Agent’s scope: what info it ought to collect, summarize, or compile.

- Arrange brokers and instruments inside CrewAI, assigning every agent a transparent function inside the analysis circulate.

- Assemble your brokers right into a crew to allow them to collaborate and cross info between steps.

- Run the agent on a analysis immediate: observe the way it retrieves knowledge, summarizes content material, and generates structured output.

- Refine agent prompts, behaviour, or crew construction to enhance accuracy and output high quality.

Utilized / Area-Particular AI

21. No Code Predictive Analytics with Orange

One of the beginner-friendly knowledge science tasks, this one teaches you learn how to carry out predictive analytics utilizing Orange. For these unaware, Orange is a very no-code, drag-and-drop data-mining platform. You’ll study to construct machine-learning workflows, run experiments, evaluate fashions, and extract insights from knowledge with out writing a single line of code. It’s excellent for learners who need to perceive ML ideas by way of visible, interactive workflows relatively than programming.

Key Abilities to Study

- Core machine-learning ideas: supervised & unsupervised studying

- Knowledge preprocessing and have exploration

- Constructing regression, classification, and clustering fashions

- Mannequin analysis: accuracy, RMSE, train-test break up, cross-validation

- Visible, workflow-based ML experimentation with Orange

Challenge Workflow

- Begin with the issue assertion: perceive what you need to predict utilizing your dataset.

- Load your knowledge into Orange utilizing its easy drag-and-drop widgets.

- Preprocess your dataset by dealing with lacking values, deciding on options, and visualizing patterns.

- Select your ML strategy: regression, classification, or clustering, relying in your activity.

- Experiment with a number of fashions by connecting completely different mannequin widgets and observing how every performs.

- Consider the outcomes utilizing built-in analysis widgets, evaluating accuracy or error metrics.

- Interpret the insights and learn the way predictive analytics can information decision-making in real-world situations.

22. Generative AI on AWS (Case Examine Challenge)

This undertaking walks you thru constructing generative AI functions on cloud infrastructure, utilizing AWS companies. You’ll discover ways to leverage AWS’s AI/ML stack, together with foundational mannequin companies, inference endpoints, and AI-driven instruments. This shall allow you to discover ways to construct, host, and deploy gen-AI apps in a scalable, production-ready setting.

Key Abilities to Study

- Working with AWS AI/ML companies, particularly SageMaker and Amazon Bedrock

- Constructing and deploying generative AI functions (textual content, language, doubtlessly multimodal) on AWS

- Integrating AWS instruments/companies (mannequin internet hosting, inference, storage, API endpoints)

- Managing real-world deployment constraints: scalability, useful resource administration, setting setup

- Understanding cloud-based ML workflows: from mannequin choice to deployment and inference

Challenge Workflow

- Outline your generative AI use case: resolve what sort of gen-AI app you need (for instance: textual content technology, summarisation, content material creation).

- Choose fashions by way of AWS companies: use Bedrock (or SageMaker) to select or load basis / pre-trained fashions, appropriate on your use case.

- Configure cloud infrastructure: arrange compute sources, storage (for knowledge and mannequin artifacts), and inference endpoints by way of AWS.

- Deploy the mannequin to AWS: host the mannequin on AWS, create endpoints or APIs so the mannequin can serve actual requests.

- Combine enter/output pipelines: handle person inputs (textual content, prompts, knowledge), feed them to the mannequin endpoint, and deal with generated outputs.

- Take a look at and iterate on the system: run generative duties, examine outcomes for correctness, latency, and reliability; tweak parameters or prompts as wanted.

- Scale & optimize deployment: make sure the system is production-ready: handle safety, environment friendly useful resource utilization, value optimization, and reliability.

23. Constructing a Sentiment Classification Pipeline with DistilBert and Airflow

This undertaking teaches you learn how to construct an end-to-end sentiment-analysis pipeline utilizing a contemporary transformer mannequin (DistilBERT) mixed with Apache Airflow for workflow automation. You’ll work with actual evaluation knowledge, clear and preprocess it, fine-tune a transformer for sentiment prediction, after which orchestrate all the pipeline so it runs in a structured, automated method. You additionally construct a easy native interface so customers can enter textual content and immediately get sentiment outcomes.

Key Abilities to Study

- Utilizing DistilBERT for transformer-based sentiment classification

- Textual content preprocessing and cleansing for real-world evaluation datasets

- Workflow orchestration with Airflow: DAG creation, activity scheduling, dependencies

- Automating ML pipelines end-to-end (knowledge → mannequin → inference)

- Constructing a easy native prediction interface for user-friendly mannequin interplay

Challenge Workflow

- Load and clear the evaluation dataset; preprocess textual content and put together it for transformer inputs.

- Wonderful-tune or practice a DistilBERT-based sentiment classifier on the cleaned knowledge.

- Create an Airflow DAG that automates all steps: ingestion, preprocessing, inference, and output technology.

- Construct a minimal native software to enter new textual content and retrieve sentiment predictions from the mannequin.

- Take a look at the complete pipeline end-to-end and refine steps for stability, accuracy, and effectivity.

24. OpenEngage: Construct an entire AI-driven advertising and marketing Engine

This undertaking/course reveals you learn how to construct an end-to-end AI-powered advertising and marketing engine that automates customized buyer journeys, engagement, and marketing campaign administration. You learn the way giant language fashions (LLMs) and automation can rework conventional advertising and marketing workflows into scalable, data-driven, and customized advertising and marketing methods.

Key Abilities to Study

- How LLMs can be utilized to generate customized content material and tailor advertising and marketing messages at scale

- Designing and orchestrating buyer journeys — mapping person behaviours to automated engagement flows

- Constructing AI-driven advertising and marketing pipelines: knowledge seize, monitoring person behaviour, segmentation, and multi-channel supply (e mail, messages, and so on.)

- Integrating AI-based personalization with conventional advertising and marketing/CRM workflows to optimize engagement and conversions

- Understanding learn how to construct an AI advertising and marketing engine that reduces handbook effort and scales with the person base

Challenge Workflow

- Perceive the function of AI and LLMs in fashionable advertising and marketing methods and the way they will enhance personalization and engagement.

- Outline advertising and marketing aims and buyer journey — what campaigns, what person interactions, what personalization logic.

- Construct or configure the advertising and marketing engine’s elements: knowledge seize/monitoring, person segmentation, content material technology by way of LLMs, and supply mechanisms.

- Design automated pipelines that set off customized messages primarily based on person behaviour or segments, leveraging AI for content material and timing.

- Take a look at the pipelines with pattern customers/knowledge, monitor efficiency (engagement, response charges), and refine segmentation or content material logic.

25. Learn how to Construct an Picture Generator Net App with Zero Coding

This undertaking guides you to construct an internet software that generates photos utilizing generative AI — all with out writing any code. It’s a drag-and-drop, no-programming route for anybody who desires to launch a picture generator net app shortly, utilizing prebuilt elements and interfaces.

Key Abilities to Study

- Understanding generative AI for photos: how AI fashions can create visuals from prompts

- Utilizing no-code or low-code instruments to construct net functions that combine AI picture technology

- Designing person interface and person circulate for an internet app with out coding

- Deploying a functioning net app that connects to an AI backend for real-time picture technology

- Managing picture enter/output, immediate dealing with, and person requests in a no-code setting

Challenge Workflow

- Select a no-code/low-code platform or device that helps AI picture technology + web-app constructing.

- Configure the backend with a generative AI mannequin (pre-trained) that may generate photos primarily based on person prompts.

- Design the front-end utilizing drag-and-drop UI elements: enter immediate discipline, generate button, show space for outcomes.

- Hyperlink the front-end to the AI backend: guarantee person inputs are handed appropriately, and generated photos are returned and displayed.

- Take a look at the app totally by submitting completely different prompts, checking output photos, and verifying usability and efficiency.

- Optionally deploy/publish the net app so others can use it (on a internet hosting platform or a web-app internet hosting service).

26. GenAI to Construct Thrilling Video games

This undertaking teaches you learn how to construct enjoyable and interactive video games powered by Generative AI. You’ll discover how AI can drive sport logic, generate dynamic content material, reply to participant inputs, and create partaking experiences, all without having a complicated game-development background. It’s a inventive, hands-on approach to perceive how GenAI can be utilized past conventional knowledge or textual content functions.

Key Abilities to Study

- Making use of Generative AI fashions to design sport mechanics

- Integrating AI instruments/APIs to create dynamic, responsive gameplay

- Designing person interplay flows for AI-powered video games

- Dealing with prompt-based technology and various person inputs

- Constructing light-weight interactive functions utilizing AI because the core engine

Challenge Workflow

- Begin by selecting a easy sport idea the place AI technology provides worth — for instance, a guessing sport, storytelling problem, or AI-generated puzzle.

- Outline the sport loop: how the person interacts, what enter they offer, and what the AI generates in response.

- Combine a generative AI mannequin to supply dynamic content material, hints, storylines, or selections.

- Construct the interplay circulate: seize person enter, name the AI mannequin, format outputs, and return outcomes again to the participant.

- Take a look at the sport with completely different inputs, refine prompts for higher responses, and enhance the general gameplay expertise.

Conclusion

When you’ve got managed to comply with all or any of the AI and knowledge science tasks above, I’m certain you gained way more sensible expertise than you’d’ve from simply the theoretical understanding of those subjects. The most effective half – these subjects cowl the whole lot from classical ML to superior agentic methods, RAG pipelines, and even game-building with GenAI. Every undertaking is designed that will help you flip expertise into actual, portfolio-ready outcomes. Whether or not you’re simply beginning out or levelling up as knowledgeable, these tasks are certain that will help you perceive how fashionable AI methods work in an entire new means.

That is your 2025 blueprint for studying AI and knowledge science. Now dive into those that excite you most, comply with the structured workflows, and create one thing extraordinary.

Login to proceed studying and luxuriate in expert-curated content material.

![25+ AI and Knowledge Science Solved Initiatives [2025 Wrap-up] 25+ AI and Knowledge Science Solved Initiatives [2025 Wrap-up]](https://cdn.analyticsvidhya.com/wp-content/uploads/2025/12/25-AI-and-Data-Science-Solved-Projects-2025-Wrap-up.png)